Preliminary discussion of the logical design of an electronic computing instrument1

Arthur W. Burks / Herman H. Goldstine / John von Neumann

PART I

1. Principal components of the machine

1.1. Inasmuch as the completed device will be a general-purpose computing machine it should contain certain main organs relating to arithmetic, memory-storage, control and connection with the human operator. It is intended that the machine be fully automatic in character, i.e. independent of the human operator after the computation starts. A fuller discussion of the implications of this remark will be given in Sec. 3 below.

1.2. It is evident that the machine must be capable of storing in some manner not only the digital information needed in a given computation such as boundary values, tables of functions (such as the equation of state of a fluid) and also the intermediate results of the computation (which may be wanted for varying lengths of time), but also the instructions which govern the actual routine to be performed on the numerical data. In a special-purpose machine these instructions are an integral part of the device and constitute a part of its design structure. For an all-purpose machine it must be possible to instruct the device to carry out any computation that can be formulated in numerical terms. Hence there must be some organ capable of storing these program orders. There must, moreover, be a unit which can understand these instructions and order their execution.

1.3. Conceptually we have discussed above two different forms of memory: storage of numbers and storage of orders. If, however, the orders to the machine are reduced to a numerical code and if the machine can in some fashion distinguish a number from an order, the memory organ can be used to store both numbers and orders. The coding of orders into numeric form is discussed in 6.3 below.

1.4. If the memory for orders is merely a storage organ there must exist an organ which can automatically execute the orders stored in the memory. We shall call this organ the Control.

1.5. Inasmuch as the device is to be a computing machine there must be an arithmetic organ in it which can perform certain of the elementary arithmetic operations. There will be, therefore, a unit capable of adding, subtracting, multiplying and dividing. It will be seen in 6.6 below that it can also perform additional operations that occur quite frequently.

The operations that the machine will view as elementary are clearly those which are wired into the machine. To illustrate, the operation of multiplication could be eliminated from the device as an elementary process if one were willing to view it as a properly ordered series of additions. Similar remarks apply to division. In general, the inner economy of the arithmetic unit is determined by a compromise between the desire for speed of operation-a non-elementary operation will generally take a long time to perform since it is constituted of a series of orders given by the control-and the desire for simplicity, or cheapness, of the machine.

1.6. Lastly there must exist devices, the input and output organ, whereby the human operator and the machine can communicate with each other. This organ will be seen below in 4.5, where it is discussed, to constitute a secondary form of automatic memory.

2. First remarks on the memory

2.1. It is clear that the size of the memory is a critical consideration in the design of a satisfactory general-purpose computing

1

From A. H. Taub (ed.), "Collected Works of John von Neumann," vol. 5, pp. 34-79, The Macmillan Company, New York, 1963. Taken from report to U. S. Army Ordnance Department, 1946. See also Bibliography Burks, Goldstine and von Neumann, 1962a, 1962b, 1963; and Goldstine and von Neumann 1963a, 1963b, 1963c, 1963d.92

machine. We proceed to discuss what quantities the memory should store for various types of computations.

2.2. In the solution of partial differential equations the storage requirements are likely to be quite extensive, In general, one must remember not only the initial and boundary conditions and any arbitrary functions that enter the problem but also an extensive number of intermediate results.

a For equations of parabolic or hyperbolic type in two independent variables the integration process is essentially a double induction. To find the values of the dependent variables at time t + D t one integrates with respect to x from one boundary to the other by utilizing the data at time t as if they were coefficients which contribute to defining the problem of this integration.

Not only must the memory have sufficient room to store these intermediate data but there must be provisions whereby these data can later be removed, i.e. at the end of the (t + D t) cycle, and replaced by the corresponding data for the (t + 2D t) cycle. This process of removing data from the memory and of replacing them with new information must, of course, be done quite automatically under the direction of the control.

b For total differential equations the memory requirements are clearly similar to, but smaller than, those discussed in (a) above.

c Problems that are solved by iterative procedures such as systems of linear equations or elliptic partial differential equations, treated by relaxation techniques, may be expected to require quite extensive memory capacity. The memory requirement for such problems is apparently much greater than for those problems in (a) above in which one needs only to store information corresponding to the instantaneous value of one variable [t in (a) above], while now entire solutions (covering all values of all variables) must be stored. This apparent discrepancy in magnitudes can, however, be somewhat overcome by the use of techniques which permit the use of much coarser integration meshes in this case, than in the cases under (a).

2.3. It is reasonable at this time to build a machine that can conveniently handle problems several orders of magnitude more complex than are now handled by existing machines, electronic or electro-mechanical. We consequently plan on a fully automatic electronic storage facility of about 4,000 numbers of 40 binary digits each. This corresponds to a precision of 2- 40 ~ 0.9 x 10- 12, i.e. of about 12 decimals. We believe that this memory capacity exceeds the capacities required for most problems that one deals with at present by a factor of about 10. The precision is also safely higher than what is required for the great majority of present day problems. In addition, we propose that we have a subsidiary memory of much larger capacity, which is also fully automatic, on some medium such as magnetic wire or tape.

3. First remarks on the control and code

3.1. It is easy to see by formal-logical methods that there exist codes that are in abstracto adequate to control and cause the execution of any sequence of operations which are individually available in the machine and which are, in their entirety, conceivable by the problem planner. The really decisive considerations from the present point of view, in selecting a code, are more of a practical nature: simplicity of the equipment demanded by the code, and the clarity of its application to the actually important problems together with the speed of its handling of those problems. It would take us much too far afield to discuss these questions at all generally or from first principles. We will therefore restrict ourselves to analyzing only the type of code which we now envisage for our machine.

3.2. There must certainly be instructions for performing the fundamental arithmetic operations. The specifications for these orders will not be completely given until the arithmetic unit is described in a little more detail.

3.3. It must be possible to transfer data from the memory to the arithmetic organ and back again. In transferring information from the arithmetic organ back into the memory there are two types we must distinguish: Transfers of numbers as such and transfers of numbers which are parts of orders. The first case is quite obvious and needs no further explication. The second case is more subtle and serves to illustrate the generality and simplicity of the system. Consider, by way of illustration, the problem of interpolation in the system. Let us suppose that we have formulated the necessary instructions for performing an interpolation of order n in a sequence of data. The exact location in the memory of the (n + 1) quantities that bracket the desired functional value is, of course, a function of the argument. This argument probably is found as the result of a computation in the machine. We thus need an order which can substitute a number into a given order-in the case of interpolation the location of the argument or the group of arguments that is nearest in our table to the desired value. By means of such an order the results of a computation can be introduced into the instructions governing that or a different computation. This makes it possible for a sequence of instructions to be used with different sets of numbers located in different parts of the memory.

Section 1 Processors with one address per instruction

To summarize, transfers into the memory will be of two sorts:

Total substitutions, whereby the quantity previously stored is cleared out and replaced by a new number. Partial substitutions in which that part of an order containing a memory location-number-we assume the various positions in the memory are enumerated serially by memory location-numbers-is replaced by a new memory location-number.

3.4. It is clear that one must be able to get numbers from any part of the memory at any time. The treatment in the case of orders can, however, b more methodical since one can at least partially arrange the control instructions in a linear sequence. Consequently the control will be so constructed that it will normally proceed from place n in the memory to place (n + 1) for its next instruction.

3.5. The utility of an automatic computer lies in the possibility of using a given sequence of instructions repeatedly, the number of times it is iterated being either preassigned or dependent upon the results of the computation. When the iteration is completed a different sequence of orders is to be followed, so we must, in most cases, give two parallel trains of orders preceded by an instruction as to which routine is to be followed. This choice can be made to depend upon the sign of a number (zero being reckoned as plus for machine purposes). Consequently, we introduce an order (the conditional transfer order) which will, depending on the sign of a given number, cause the proper one of two routines to be executed.

Frequently two parallel trains of orders terminate in a common routine. It is desirable, therefore, to order the control in either case to proceed to the beginning point of the common routine. This unconditional transfer can be achieved either by the artificial use of a conditional transfer or by the introduction of an explicit order for such a transfer.

3.6. Finally we need orders which will integrate the input-output devices with the machine. These are discussed briefly in 6.8.

3.7. We proceed now to a more detailed discussion of the machine. Inasmuch as our experience has shown that the moment one chooses a given component as the elementary memory unit, one has also more or less determined upon much of the balance of the machine, we start by a consideration of the memory organ. In attempting an exposition of a highly integrated device like a computing machine we do not find it possible, however, to give an exhaustive discussion of each organ before completing its description. It is only in the final block diagrams that anything approaching a complete unit can be achieved.

The time units to be used in what follows will be:

1 m sec = 1 microsecond = 10- 6 seconds

1 msec = 1 millisecond = 10- 3 seconds

4. The memory organ

4.1. Ideally one would desire an indefinitely large memory capacity such that any particular aggregate of 40 binary digits, or word (cf. 2.3), would be immediately available-i.e. in a time which is somewhat or considerably shorter than the operation time of a fast electronic multiplier. This may be assumed to be practical at the level of about 100 m sec. Hence the availability time for a word in the memory should be 5 to 50 m sec. It is equally desirable that words may be replaced with new words at about the same rate. It does not seem possible physically to achieve such a capacity. We are therefore forced to recognize the possibility of constructing a hierarchy of memories, each of which has greater capacity than the preceding but which is less quickly accessible.

The most common forms of storage in electrical circuits are the flip-flop or trigger circuit, the gas tube, and the electromechanical relay. To achieve a memory of n words would, of course, require about 40n such elements, exclusive of the switching elements. We saw earlier (cf. 2.2) that a fast memory of several thousand words is not at all unreasonable for an all-purpose instrument. Hence, about 105 flip-flops or analogous elements would be required! This would, of course, be entirely impractical.

We must therefore seek out some more fundamental method of storing electrical information than has been suggested above. One criterion for such a storage medium is that the individual storage organs, which accommodate only one binary digit each, should not be macroscopic components, but rather microscopic elements of some suitable organ. They would then, of course, not be identified and switched to by the usual macroscopic wire connections, but by some functional procedure in manipulating that organ.

One device which displays this property to a marked degree is the iconoscope tube. In its conventional form it possesses a linear resolution of about one part in 500. This would correspond to a (two-dimensional) memory capacity of 500 x 500 = 2.5 x 105. One is accordingly led to consider the possibility of storing electrical charges on a dielectric plate inside a cathode-ray tube. Effectively such a tube is nothing more than a myriad of electrical capacitors which can be connected into the circuit by means of an electron beam.

Actually the above mentioned high resolution and concomitant memory capacity are only realistic under the conditions of television-image storage, which are much less exigent in respect to

the reliability of individual markings than what one can accept in the storage for a computer. In this latter case resolutions of one part in 20 to 100, i.e. memory capacities of 400 to 10,000, would seem to be more reasonable in terms of equipment built essentially along familiar lines.

At the present time the Princeton Laboratories of the Radio Corporation of America are engaged in the development of a storage tube, the Selectron, of the type we have mentioned above. This tube is also planned to have a non-amplitude-sensitive switching system whereby the electron beam can be directed to a given spot on the plate within a quite small fraction of a millisecond. Inasmuch as the storage tube is the key component of the machine envisaged in this report we are extremely fortunate in having secured the cooperation of the RCA group in this as well as in various other developments.

An alternate form of rapid memory organ is the acoustic feedback delay line described in various reports on the EDVAC. (This is an electronic computing machine being developed for the Ordnance Department, U.S. Army, by the University of Pennsylvania, Moore School of Electrical Engineering.) Inasmuch as that device has been so clearly reported in those papers we give no further discussion. There are still other physical and chemical properties of matter in the presence of electrons or photons that might be considered, but since none is yet beyond the early discussion stage we shall not make further mention of them.

4.2. We shall accordingly assume throughout the balance of this report that the Selectron is the modus for storage of words at electronic speeds. As now planned, this tube will have a capacity of 212 = 4,096 » 4,000 binary digits. To achieve a total electronic storage of about 4,000 words we propose to use 40 Selectrons, thereby achieving a memory of 212 words of 40 binary digits each. (Cf. again 2.3.)

4.3. There are two possible means for storing a particular word in the Selectron memory-or, in fact, in either a delay line memory or in a storage tube with amplitude-sensitive deflection. One method is to store the entire word in a given tube and then to get the word out by picking out its respective digits in a serial fashion. The other method is to store in corresponding places in each of the 40 tubes one digit of the word. To get a word from the memory in this scheme requires, then, one switching mechanism to which all 40 tubes are connected in parallel. Such a switching scheme seems to us to be simpler than the technique needed in the serial system and is, of course, 40 times faster. We accordingly adopt the parallel procedure and thus are led to consider a so-called parallel machine, as contrasted with the serial principles being considered for the EDVAC. (In the EDVAC the peculiar characteristics of the acoustic delay line, as well as various other considerations, seem to justify a serial procedure. For more details, cf. the reports referred to in 4.1.) The essential difference between these two systems lies in the method of performing an addition; in a parallel machine all corresponding pairs of digits are added simultaneously, whereas in a serial one these pairs are added serially in time.

4.4. To summarize, we assume that the fast electronic memory consists of 40 Selectrons which are switched in parallel by a common switching arrangement. The inputs of the switch are controlled by the control.

4.5. Inasmuch as a great many highly important classes of problems require a far greater total memory than 212 words, we now consider the next stage in our storage hierarchy. Although the solution of partial differential equations frequently involves the manipulation of many thousands of words, these data are generally required only in blocks which are well within the 212 capacity of the electronic memory. Our second form of storage must therefore be a medium which feeds these blocks of words to the electronic memory. It should be controlled by the control of the computer and is thus an integral part of the system, not requiring human intervention.

There are evidently two distinct problems raised above. One can choose a given medium for storage such as teletype tapes, magnetic wire or tapes, movie film or similar media. There still remains the problem of automatic integration of this storage medium with the machine. This integration is achieved logically by introducing appropriate orders into the code which can instruct the machine to read or write on the medium, or to move it by a given amount or to a place with given characteristics. We discuss this question a little more fully in 6.8.

Let us return now to the question of what properties the secondary storage medium should have. It clearly should be able to store information for periods of time long enough so that only a few per cent of the total computing time is spent in re-registering information that is "fading off." It is certainly desirable, although not imperative, that information can be erased and replaced by new data. The medium should be such that it can be controlled, i.e. moved forward and backward, automatically. This consideration makes certain media, such as punched cards, undesirable. While cards can, of course, be printed or read by appropriate orders from some machine, they are not well adapted to problems in which the output data are fed directly back into the machine, and are required in a sequence which is non-monotone with respect to the order of the cards. The medium should be capable of remembering very large numbers of data at a much smaller price

96 Part 2 ÷ The instruction-set processor: main-line computers

Section 1 ÷ Processors with one address per instruction

than electronic devices. It must be fast enough so that, even when it has to be used frequently in a problem, a large percentage of the total solution time is not spent in getting data into and out of this medium and achieving the desired positioning on it. If this condition is not reasonably well met, the advantages of the high electronic speeds of the machine will be largely lost.

Both light- or electron-sensitive film and magnetic wires or tapes, whose motions are controlled by servo-mechanisms integrated with the control, would seem to fulfil our needs reasonably well. We have tentatively decided to use magnetic wires since we have achieved reliable performance with them at pulse rates of the order of 25,000/sec and beyond.

4.6. Lastly our memory hierarchy requires a vast quantity of dead storage, i.e. storage not integrated with the machine. This storage requirement may be satisfied by a library of wires that can be introduced into the machine when desired and at that time become automatically controlled. Thus our dead storage is really nothing but an extension of our secondary storage medium. It differs from the latter only in its availability to the machine.

47. We impose one additional requirement on our secondary memory. It must be possible for a human to put words on to the wire or other substance used and to read the words put on by the machine. In this manner the human can control the machine's functions. It is now clear that the secondary storage medium is really nothing other than a part of our input-output system, cf. 6.8.4 for a description of a mechanism for achieving this.

4.8. There is another highly important part of the input-output which we merely mention at this time, namely, some mechanism for viewing graphically the results of a given computation. This can, of course, be achieved by a Selectron-like tube which causes its screen to fluoresce when data are put on it by an electron beam.

4.9. For definiteness in the subsequent discussions we assume that associated with the output of each Selectron is a flip-flop. This assemblage of 40 flip-flops we term the Selectron Register.

5. The arithmetic organ

5.1. In this section we discuss the features we now consider desirable for the arithmetic part of our machine. We give our tentative conclusions as to which of the arithmetic operations should be built into the machine and which should be programmed. Finally, a schematic of the arithmetic unit is described.

5.2. In a discussion of the arithmetical organs of a computing machine one is naturally led to a consideration of the number system to be adopted. In spite of the longstanding tradition of building digital machines in the decimal system, we feel strongly in favor of the binary system for our device. Our fundamental unit of memory is naturally adapted to the binary system since we do not attempt to measure gradations of charge at a particular point in the Selectron but are content to distinguish two states. The flip-flop again is truly a binary device. On magnetic wires or tapes and in acoustic delay line memories one is also content to recognize the presence or absence of a pulse or (if a carrier frequency is used) of a pulse train, or of the sign of a pulse. (We will not discuss here the ternary possibilities of a positive-or-negative-or-no-pulse system and their relationship to questions of reliability and checking, nor the very interesting possibilities of carrier frequency modulation.) Hence if one contemplates using a decimal system with either the iconoscope or delay-line memory one is forced into a binary coding of the decimal system-each decimal digit being represented by at least a tetrad of binary digits. Thus an accuracy of ten decimal digits requires at least 40 binary digits. In a true binary representation of numbers, however, about 33 digits suffice to achieve a precision of 1010. The use of the binary system is therefore somewhat more economical of equipment than is the decimal.

The main virtue of the binary system as against the decimal is, however, the greater simplicity and speed with which the elementary operations can be performed. To illustrate, consider multiplication by repeated addition. In binary multiplication the product of a particular digit of the multiplier by the multiplicand is either the multiplicand or null according as the multiplier digit is 1 or 0. In the decimal system, however, this product has ten possible values between null and nine times the multiplicand, inclusive. Of course, a decimal number has only 1og102 ~ 0.3 times as many digits as a binary number of the same accuracy, but even so multiplication in the decimal system is considerably longer than in the binary system. One can accelerate decimal multiplication by complicating the circuits, but this fact is irrelevant to the point just made since binary multiplication can likewise be accelerated by adding to the equipment. Similar remarks may be made about the other operations.

An additional point that deserves emphasis is this: An important part of the machine is not arithmetical, but logical in nature. Now logics, being a yes-no system, is fundamentally binary. Therefore a binary arrangement of the arithmetical organs contributes very significantly towards producing a more homogeneous machine, which can be better integrated and is more efficient.

The one disadvantage of the binary system from the human point of view is the conversion problem. Since, however, it is completely known how to convert numbers from one base to

another and since this conversion can be effected solely by the use of the usual arithmetic processes there is no reason why the computer itself cannot carry out this conversion. It might b argued that this is a time consuming operation. This, however is not the case. (Cf. 9.6 and 9.7 of Part II. Part II is a report issued under the title Planning and Coding of Problems for an Electronic Computing Instrument.1) Indeed a general-purpose computer, used as a scientific research tool, is called upon to do a very great number of multiplications upon a relatively small amount of input data, and hence the time consumed in the decimal to binary conversion is only a trivial percentage of the total computing time. A similar remark is applicable to the output data.

In the preceding discussion we have tacitly assumed the desirability of introducing and withdrawing data in the decimal system. We feel, however, that the base 10 may not even be a permanent feature in a scientific instrument and consequently will probably attempt to train ourselves to use numbers base 2 or 8 or 16. The reason for the bases 8 or 16 is this: Since 8 and 16 are powers of 2 the conversion to binary is trivial; since both are about the size of 10, they violate many of our habits less badly than base 2. (Cf. Part II, 9.4.)

5.3. Several of the digital computers being built or planned in this country and England are to contain a so-called "floating decimal point". This is a mechanism for expressing each word as a characteristic and a mantissa-e.g. 123.45 would be carried in the machine as (0.12345,03), where the 3 is the exponent of 10 associated with the number. There appear to be two major purposes in a "floating" decimal point system both of which arise from the fact that the number of digits in a word is a constant, fixed by design considerations for each particular machine. The first of these purposes is to retain in a sum or product as many significant digits as possible and the second of these is to free the human operator from the burden of estimating and inserting into a problem "scale factors"- multiplicative constants which serve to keep numbers within the limits of the machine.

There is, of course, no denying the fact that human time is consumed in arranging for the introduction of suitable scale factors. We only argue that the time so consumed is a very small percentage of the total time we will spend in preparing an interesting problem for our machine. The first advantage of the floating point is, we feel, somewhat illusory. In order to have such a floating point one must waste memory capacity which could otherwise be used for carrying more digits per word. It would therefore seem to us not at all clear whether the modest advantages of a floating binary point offset the loss of memory capacity and the increased complexity of the arithmetic and control circuits.

There are certainly some problems within the scope of our device which really require more than 2- 40 precision. To handle such problems we wish to plan in terms of words whose lengths are some fixed integral multiple of 40, and program the machine in such a manner as to give the corresponding aggregates of 40 digit words the proper treatment. We must then consider an addition or multiplication as a complex operation programmed from a number of primitive additions or multiplications (cf. ò ò 9, Part II). There would seem to be considerable extra difficulties in the way of such a procedure in an instrument with a floating binary point.

The reader may remark upon our alternate spells of radicalism and conservatism in deciding upon various possible features for our mechanism. We hope, however, that he will agree, on closer inspection, that we are guided by a consistent and sound principle in judging the merits of any idea. We wish to incorporate into the machine-in the form of circuits-only such logical concepts as are either necessary to have a complete system or highly convenient because of the frequency with which they occur and the influence they exert in the relevant mathematical situations.

5.4. On the basis of this criterion we definitely wish to build into the machine circuits which will enable it to form the binary sum of two 40 digit numbers. We make this decision not because addition is a logically basic notion but rather because it would slow the mechanism as well as the operator down enormously if each addition were programmed out of the more simple operations of "and", "or", and "not". The same is true for the subtraction. Similarly we reject the desire to form products by programming them out of additions, the detailed motivation being very much the same as in the case of addition and subtraction. The cases for division and square-rooting are much less clear.

It is well known that the reciprocal of a number a can be formed to any desired accuracy by iterative schemes. One such scheme consists of improving an estimate X by forming X' = 2X - aX2. Thus the new error 1 - aX' is (1 - aX)2, which is the square of the error in the preceding estimate. We notice that in the formation of X', there are two bona fide multiplications-we do not consider multiplication by 2 as a true product since we will have a facility for shifting right or left in one or two pulse times. If then we somehow could guess 1/a to a precision of 2-5, 6 multiplications-3 iterations-would suffice to give a final result good to 2-40. Accordingly a small table of 24 entries could be used to get the initial estimate of 1/a. In this way a reciprocal 1/a

1

See Bibliography [Goldstine and von Neumann, 1963b, 1963c, 1963d]. References in this chapter are all to this report.

Section 1 Processors with one address per instruction

could be formed in 6 multiplication times, and hence a quotient b/a in 7 multiplication times. Accordingly we see that the question of building a divider s really a function of how fast it can be made to operate compared to the iterative method sketched above: In order to justify its existence, a divider must perform a division in a good deal less than 7 multiplication times. We have, however, conceived a divider which is much faster than these 7 multiplication times and therefore feel justified in building it, especially since the amount of equipment needed above the requirements of the multiplier is not important.

It is, of course, also possible to handle square roots by iterative techniques. In fact, if X is our estimate of a1/2, then X' = 1/2(X + a/X) is a better estimate. We se that this scheme involves one division per iteration. As will be seen below in our more detailed examination of the arithmetic organ we do not include a square- rooter in our plans because such a device would involve more equipment than we feel is desirable in a first model. (Concerning the iterative method of square-rooting, cf. 8.10 in Part II)

55. The first part of our arithmetic organ requires little discussion at this point. It should be a parallel storage organ which can receive a number and add it to the one already in it, which is also able to clear its contents and which can transmit what it contains. We will call such an organ an Accumulator. It is quite conventional in principle in past and present computing machines of the most varied types, e.g. desk multipliers, standard IBM counters, more modem relay machines, the ENIAC. There are of, course, numerous ways to build such a binary accumulator. We distinguish two broad types of such devices: static, and dynamic or pulse-type accumulators. These will be discussed in 5.11, but it is first necessary to make a few remarks concerning the arithmetic of binary addition. In a parallel accumulator, the first step in an addition is to add each digit of the addend to the corresponding digit of the augend. The second step is to perform the carries, and this must be done in sequence since a carry may produce a carry. In the worst case, 39 carries will occur. Clearly it is inefficient to allow 39 times as much time for the second step (performing the carries) as for the first step (adding the digits). Hence either the carries must be accelerated, or use must be made of the average number of carries or both.

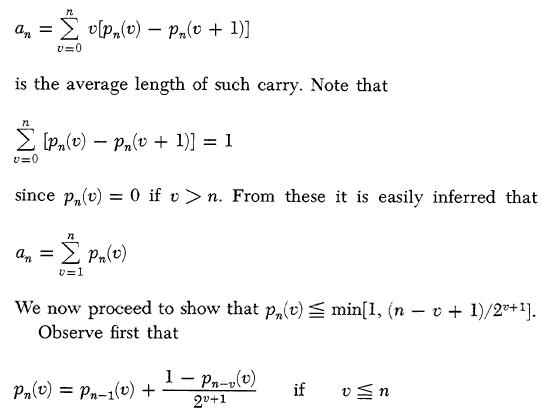

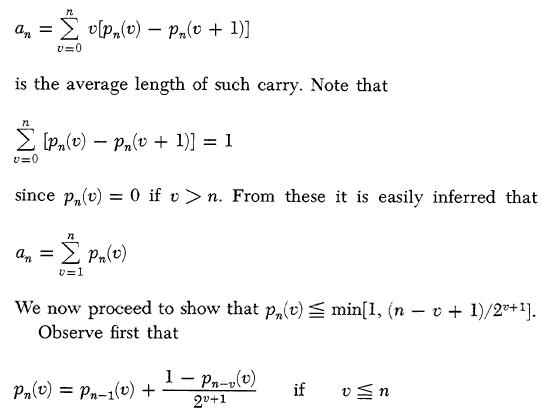

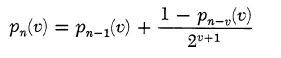

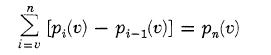

5.6. We shall show that for a sum of binary words, each of length n, the length of the largest carry sequence is on the average not in excess of 2log n. Let pn(v) designate the probability that a carry sequence is of length v or greater in the sum of two binary words of length n. Then clearly pn(v) - pn(v + 1) is the probability that the largest carry sequence is of length exactly v and the weighted average

Indeed, pn(v) is the probability that the sum of two n-digit numbers contains a carry sequence of length ³ v. This probability obtains by adding the probabilities of two mutually exclusive alternatives: First: Either the n - 1 first digits of the two numbers by themselves contain a carry sequence of length ³ v. This has the probability pn-1(v). Second: The n - 1 first digits of the two numbers by themselves do not contain a carry sequence of length ³ v. In this case any carry sequence of length ³ v in the total numbers (of length n) must end with the last digits of the total sequence. Hence these must form the combination 1, 1. The next v - 1 digits must propagate the carry, hence each of these must form the combination 1, 0 or 0, 1. (The combinations 1, 1 and 0, 0 do not propagate a carry.) The probability of the combination 1, 1 is 1/4, that one of the alternative combinations 1, 0 or 0, 1 is 1/2 . The total probability of this sequence is therefore 1/4(1/2)v-1 = (1/2)v+1. The remaining n - v digits must not contain a carry sequence of length ³ v. This has the probability 1 - pn-v(v). Thus the probability of the second case is [1 - pn-v(v)]/2 v+l. Combining these two cases, the desired relation

obtains. The observation that pn(v) = 0 if v

>n is trivial.

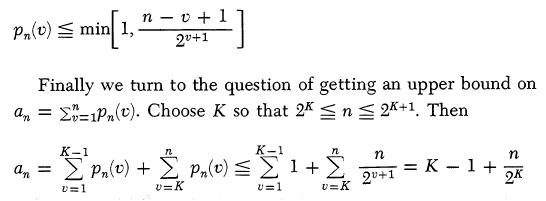

We see with the help of the formulas proved above

that pn(v) - pn-1(v) is always £ 1/2 v+1, and hence that the sum

is not in excess of (n - v + l)/2 v+1 since

there are n - v + 1 terms in the sum; since, moreover, each

pn(v) is a probability, it is not greater than 1. Hence

we have

This last expression is clearly linear in n in the interval 2K £ n £ 2 K+1, and it is =K for n = 2K and =K+ 1 for n = 2 K+1 , i.e it is = 2log n at both ends of this interval. Since the function 2log n is everywhere concave from below, it follows that our expression is £ 2log n throughout this interval. Thus an £ 2log n. This holds for all K, i.e. for all n, and it is the in equality which we wanted to prove.

For our case n = 40 we have an £ log240 ~5.3, i.e. an average length of about 5 for the longest carry sequence. (The actual value of a40 is 4.62.)

5.7. Having discussed the addition, we can now go on to the subtraction. It is convenient to discuss at this point our treatment of negative numbers, and in order to do that right, it is desirable to make some observations about the treatment of numbers in general.

Our numbers are 40 digit aggregates, the left-most digit being the sign digit, and the other digits genuine binary digits, with positional values 2-1, 2-2 , . . . , 2-39 (going from left to right). Our accumulator will, however, treat the sign digit, too, as a binary digit with the positional value 20-at least when it functions as an adder. For numbers between 0 and 1 this is clearly all right: The left-most digit will then be 0, and if 0 at this place is taken to represent a + sign, then the number is correctly expressed with its sign and 39 binary digits.

Let us now consider one or more unrestricted 40 binary digit numbers. The accumulator will add them, with the digit-adding and the carrying mechanisms functioning normally and identically in all 40 positions. There is one reservation, however: If a carry originates in the left-most position, then it has nowhere to go from there (there being no further positions to the left) and is "lost". This means, of course, that the addend and the augend, both numbers between 0 and 2, produced a sum exceeding 2, and the accumulator, being unable to express a digit with a positional value 21, which would now be necessary, omitted 2. That is, the sum was formed correctly, excepting a possible error 2. If several such additions are performed in succession, then the ultimate error may be any integer multiple of 2. That is, the accumulator is an adder which allows errors that are integer multiples of 2-it is an adder modulo 2.

It should be noted that our convention of placing the binary point immediately to the right of the left-most digit has nothing to do with the structure of the adder. In order to make this point clearer we proceed to discuss the possibilities of positioning the binary point in somewhat more detail.

We begin by enumerating the 40 digits of our numbers (words) from left to right. In doing this we use an index h = 1, . . . , 40. Now we might have placed the binary point just as well between digits j and j + 1, j = 0, . . . , 40. Note, that j = .0 corresponds to the position at the extreme left (there is no digit h = j = 0); j = 40 corresponds to the position at the extreme right (there is no position h = j + 1 = 41); and j = 1 corresponds to our above choice. Whatever our choice of /, it does not affect the correctness of the accumulator's addition. (This is equally true for subtraction, cf. below, but not for multiplication and division, cf. 5.8.) Indeed, we have merely multiplied all numbers by 2j-1 (as against our previous convention), and such a "change of scale" has no effect on addition (and subtraction). However, now the accumulator is an adder which allows errors that are integer multiples of 2j it is an adder modulo 2j. We mention this because it is occasionally convenient to think in terms of a convention which places the binary point at the right end of the digital aggregate. Then j = 40, our numbers are integers, and the accumulator is an adder modulo 240. We must emphasize, however, that all of this, i.e. all attributions of values to j, are purely convention-i.e. it is solely the mathematician's interpretation of the functioning of the machine and not a physical feature of the machine. This convention will necessitate measures that have to be made effective by actual physical features of the machine-i.e. the convention will become a physical and engineering reality only when we come to the organs of multiplication.

We will use the convention j = 1, i.e. our numbers lie in 0 and 2 and the accumulator adds modulo 2.

This being so, these numbers between 0 and 2 can be used to represent all numbers modulo 2. Any real number x agrees modulo 2 with one and only one number x between 0 and 2-or, to be quite precise: 0 £ x < 2. Since our addition functions modulo 2, we see that the accumulator may be used to represent and to add numbers modulo 2.

This determines the representation of negative numbers: If x < 0, then we have to find the unique integer multiple of 2, 2s

Section 1 Processors with one address per instruction

(s = 1, 2, . . . ) such that 0 £ ~ < 2 for ~ = x + 2s (i.e. - 2s £ x < 2(1 - s)), and represent x by the digitalization of ~.

In this way, however, the sign digit character of the left-most digit is lost: It can be 0 or 1 for both x ³ 0 and x < 0, hence 0 in the left-most position can no longer be associated with the + sign of x. This may seem a bad deficiency of the system, but it is easy to remedy-at least to an extent which suffices for our purposes. This is done as follows:

We usually work with numbers x between -1 and 1-or, to be quite precise: -1 £ x < 1. Now the ~ with 0 £ ~ < 2, which differs from x by an integer multiple of 2, behaves as follows: If x ³ 0. then 0 £ x < 1. hence ~ = x. and so 0 £ ~ < 1. the left-most-digit of ~ is 0. If x <0, then -1£ x< 0, hence ~ = x + 2, and so 1 £ ~ < 2, the left-most digit of ~ is 1. Thus the left-most digit (of ~) is now a precise equivalent of the sign (of x): 0 corresponds to + and 1 to - .

Summing up:

The accumulator may be taken to represent all real numbers modulo 2, and it adds them modulo 2. If x lies between -1 and 1 (precisely: - 1 £ x < 1)-as it will in almost all of our uses of the machine-then the left-most digit represents the sign: 0 is + and 1 is - .

Consider now a negative number x with -1 £ x < 0. Put x = - y, 0 < y £ 1. Then we digitalize x by representing it as x + 2 = 2 - y = 1 + (1 - y). That is, the left-most (sign) digit of x = - y is, as it should be, 1; and the remaining 39 digits are those of the complement of y = - x = ÷ x÷ , ie. those of 1 - y. Thus we have been led to the familiar representation of negative numbers by complementation.

The connection between the digits of x and those of - x is now easily formulated, for any x ³ 0. Indeed, - x is equivalent to

(This digit index i = 1, . . . , 39 is related to our previous digit index h = 1 , . . . , 40 by i = h - 1. Actually it is best to treat as if its domain included the additional value i = 0-indeed i = 0 then corresponds to h = 1, i.e. to the sign digit. In any case expresses the positional value of the digit to which it refers more simply than h does: This positional value is 2-i = 2-(h-1) . Note that if we had positioned the binary point more generally between j and j + 1, as discussed further above, this positional value would have been 2-(h-j) . We now have, as pointed out previously, j = 1.) Hence its digits obtain by subtracting every digit of x from 1-by complementing each digit, i.e. by replacing 0 by 1 and 1 by 0-and then adding 1 in the right-most position (and effecting all the carries that this may cause). (Note how the left-most digit, interpreted as a sign digit, gets inverted by this procedure as it should be.)

A subtraction x - y is therefore performed by the accumulator, Ac, as follows: Form x + y', where y' has a digit 0 or 1 where y has a digit 1 or 0, respectively, and then add 1 in the right-most position. The last operation can be performed by injecting a carry into the right-most stage of Ac-since this stage can never receive a carry from any other source (there being no further positions to the right).

5.8. In the light of 5.7 multiplication requires special care, because here the entire modulo 2 procedure breaks down. Indeed, assume that we want to compute a product xy, and that we had to change one of the factors, say x, by an integer multiple of 2, say by 2. Then the product (x + 2)y obtains, and this differs from the desired xy by 2y. 2y, however, will not in general be an integer multiple of 2, since y is not in general an integer.

We will therefore begin our discussion of the multiplication by eliminating all such difficulties, and assume that both factors x, y lie between 0 and 1. Or, to be quite precise: 0 £ x < 1, 0 £ y < 1.

To effect such a multiplication we first send the multiplier x into a register AR, the Arithmetic Register, which is essentially just a set of 40 flip-flops whose characteristics will be discussed below. We place the multiplicand y in the Selectron Register, SR (cf. 4.9) and use the accumulator, Ac, to form and store the partial products. We propose to multiply the entire multiplicand by the successive digits of the multiplier in a serial fashion. There are, of course, two possible ways this can be done: We can either start with the digit in the lowest position-position 2-39-or in the highest position-position 2-1-and proceed successively to the left or right, respectively. There are a few advantages from our point of view in starting with the right-most digit of the multiplier. We therefore describe that scheme.

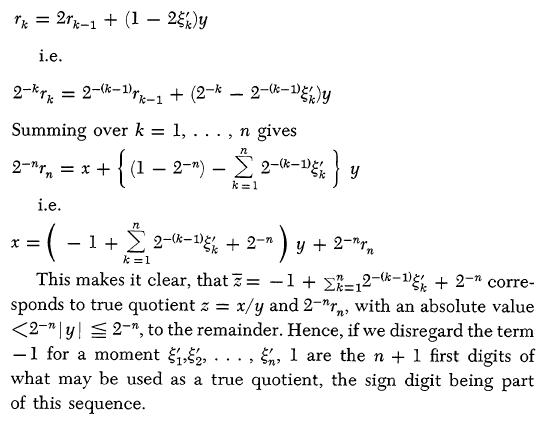

The multiplication takes place in 39 steps, which correspond to the 39

(non-sign) digits of the multiplier x = 0, x

1,x 2, . . . , x 39 = (0. x 1x 2, . . . ,x 39),

enumerated backwards: x 39, . . . ,x 2,x 1.

Assume that the k - 1 first steps (k = 1,..., 39) have already

taken place, involving multiplication of the multiplicand y with the k -

1 last digits of the multiplier: x

39, . . . , x 41-k ; and that we are now at the kth step, involving

multiplication with the kth last digit: x 40-k . Assume furthermore, that Ac now contains the

quantity Pk-1, the result of the k -

1 first steps. [This is the (k - 1)st partial product. For k

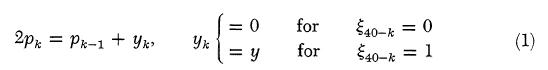

= 1 clearly P0 = 0.1 We now form

2pk = Pk-1

+ x 40-ky,

i.e.

That is, we do nothing or add y, according to whether x 40-k = 0 or 1. We can then form pk by halving 2pk.

Note that the addition of (1) produces no carry beyond the 20 position, i.e. the sign digit: 0 £ ph < 1 is true for h = 0, and if it is true for h = k - 1 , then (1) extends it to h = k also, since 0 £ yk < 1. Hence the sum in (1) is ³ 0 and <2, and no carries beyond the 20 position arise.

Hence pk obtains from 2pk by a simple right shift, which is combined with filling in the sign digit (that is freed by this shift) with a 0. This right shift is effected by an electronic shifter that is part of Ac.

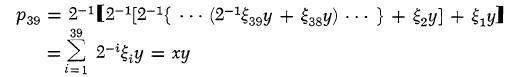

Now

Thus this process produces the product xy, as desired. Note that this xy is the exact product of x and y.

Since x and y are 39 digit binaries, their exact product xy is a 78 digit binary (we disregard the sign digit throughout). However, Ac will only hold 39 of these. These are clearly the left 39 digits of xy. The right 39 digits of xy are dropped from Ac one by one in the course of the 39 steps, or to be more specific, of the 39 right shifts. We will see later that these right 39 digits of xy should and will also be conserved (cf. the end of this section and the end of 5.12, as well as 6.6.3). The left 39 digits, which remain in Ac, should also be rounded off, but we will not discuss this matter here. (cf. loc. cit. above and 9.9, Part II).

To complete the general picture of our multiplication technique we must consider how we sense the respective digits of our multiplier. There are two schemes which come to one's mind in this connection. One is to have a gate tube associated with each flip-flop of AR in such a fashion that this gate is open if a digit is 1 and closed if it is null. We would then need a 39-stage counter to act as a switch which would successively stimulate these gate tubes to react. A more efficient scheme is to build into AR a shifter circuit which enables AR to be shifted one stage to the right each time Ac is shifted and to sense the value of the digit in the right-most flip-flop of AR. The shifter itself requires one gate tube per stage. We need in addition a counter to count out the 39 steps of the multiplication, but this can be achieved by a six stage binary counter. Thus the latter is more economical of tubes and has one additional virtue from our point of view which we discuss in the next paragraph.

The choice of 40 digits to a word (including the sign) is probably adequate for most computational problems but situations certainly might arise when we desire higher precision, i.e. words of greater length. A trivial illustration of this would be the computation of p to more places than are now known (about 700 decimals, i.e. about 2,300 binaries). More important instances are the solutions of N linear equations in N variables for large values of N. The extra precision becomes probably necessary when N exceeds a limit somewhere between 20 and 40. A justification of this estimate has to be based on a detailed theory of numerical matrix inversion which will be given in a subsequent report. It is therefore desirable to be able to handle numbers of 39k digits and signs by means of program instructions. One way to achieve this end is to use k words to represent a 39k digit number with signs. (In this way 39 digits in each 40 digit word are used, but all sign digits excepting the first one, are apparently wasted; cf. however the treatment of double precision numbers in Chapter 9, Part II.) It is, of course, necessary in this case to instruct the machine to perform the elementary operations of arithmetic in a manner that conforms with this interpretation of k-word complexes as single numbers. (Cf. 9.8-9.10, Part II.) In order to be able to treat numbers in this manner, it is desirable to keep not 39 digits in a product, but 78; this is discussed in more detail in 6.6.3 below. To accomplish this end (conserving 78 product digits) we connect, via our shifter circuit, the right-most digit of Ac with the left-most non-sign digit of AR. Thus, when in the process of multiplication a shift is ordered, the last digit of Ac is transferred into the place in AR made vacant when the multiplier was shifted.

5.9. To conclude our discussion of the multiplication of positive numbers, we note this:

As described thus far, the multiplier forms the 78 digit product, xy, for a 39 digit multipler x and a 39 digit multiplicand y. We assumed x ³ 0, y ³ 0 and therefore had xy ³ 0 and we will only depart from these assumptions in 5.10. In addition to these, however, we also assumed x <1, y <1, i.e. the x, y have their binary points both immediately right of the sign digit, which implied the same for xy. One might question the necessity of these additional assumptions.

Prima facie they may seem mere conventions, which affect only the mathematician's interpretation of the functioning of the machine, and not a physical feature of the machine. (Cf. the corresponding situation in addition and subtraction, in 5.7.) Indeed, if x had its binary point between digits j and j + 1 from the left (cf. the discussion of 5.7 dealing with this j; it also applies to k below), and y between k and k + 1, then our above method of multiplication would still give the correct result xy, provided that

Section 1 Processors with one address per instruction

the position of the binary point in xy is appropriately assigned. Specifically: Let the binary point of xy be between digits l and l + 1. x has the binary point between digits j and j + 1, and its sign digit is 0, hence its range is 0 £ x < 2j-1. Similarly y has the range 0 £ y < 2k-1, and xy has the range 0 £ xy <2l-1. Now the ranges of x and y imply that the range of xy is necessarily 0 £ xy < 2j-1 2k-1 = 2 j+k-2 . Hence l = j + k - 1. Thus it might seem that our actual positioning of the binary point-immediately right of the sign digit, i.e. j = k = 1-is still a mere convention.

It is therefore important to realize that this is not so: The choices of j and k actually correspond to very real, physical, engineering decisions. The reason for this is as follows: It is desirable to base the running of the machine on a sole, consistent mathematical interpretation. It is therefore desirable that all arithmetical operations be performed with an identically conceived positioning of the binary point in Ac. Applying this principle to x and y gives j = k. Hence the position of the binary point for xy is given by j + k - 1 = 2j- 1. If this is to be the same as for x, and y, then 2j - 1 = j, i.e. j = 1 ensues-that is, our above positioning of the binary point immediately right of the sign digit.

There is one possible escape: To place into Ac not the left 39 digits of xy (not counting the sign digit 0), but the digits j to j + 38 from the left. Indeed, in this way the position of the binary point of xy will be (2j - 1) - (j - 1) = j, the same as for x and y.

This procedure means that we drop the left j - 1 and right 40 + j digits of xy and hold the middle 39 in Ac. Note that positioning of the binary point-means that x < 2j-1, y <2 j-1 and xy can only be used if xy <2 j-1. Now the assumptions secure only xy <22j-2. Hence xy must be 2j-1 times smaller than it might be. This is just the thing which would be secured by the vanishing of the left j - 1 digits that we had to drop from Ac, as shown above.

If we wanted to use such a procedure, with those dropped left j - 1 digits really existing, i.e. with j ¹ 1, then we would have to make physical arrangements for their conservation elsewhere. Also the general mathematical planning for the machine would be definitely complicated, due to the physical fact that Ac now holds a rather arbitrarily picked middle stretch of 39 digits from among the 78 digits of xy. Alternatively, we might fail to make such arrangements, but this would necessitate to see to it in the mathematical planning of each problem, that all products turn out to be 2j-1 times smaller than their a priori maxima. Such an observance is not at all impossible; indeed similar things are unavoidable for the other operations. [For example, with a factor 2 in addition (of positives) or subtraction (of opposite sign quantities). Cf. also the remarks in the first part of 5.12, dealing with keeping "within range".] However, it involves a loss of significant digits, and the choice j = 1 makes it unnecessary in multiplication.

We will therefore make our choice j = 1, i.e. the positioning of the binary point immediately right of the sign digit, binding for all that follows.

5.10. We now pass to the case where the multiplier x and the multiplicand y may have either sign + or -, i.e. any combination of these signs.

It would not do simply to extend the method of 5.8 to include the sign digits of x and y also. Indeed, we assume -1 £ x < 1, -1 £ y < 1, and the multiplication procedure in question is definitely based on the ³ 0 interpretations of x and y. Hence if x < 0, then it is really using x + 2, and if y < 0, then it is really using y + 2. Hence for x < 0, y ³ 0 it forms

(x + 2)y = xy + 2y

for x ³ 0, y <0 it forms

x(y + 2) = xy + 2x

for x < 0, x < 0, it forms

(x + 2)(y + 2) = xy + 2x + 2y + 4

or since things may be taken modulo 2, xy + 2x + 2y. Hence correction terms -2y, -2x would be needed for x < 0, y < 0, respectively (either or both).

This would be a possible procedure, but there is one difficulty: As xy is formed, the 39 digits of the multiplier x are gradually lost from AR, to be replaced by the right 39 digits of xy. (Cf. the discussion at the end of 5.8.) Unless we are willing to build an additional 40 stage register to hold x, therefore, x will not be available at the end of the multiplication. Hence we cannot use it in the correction 2x of xy, which becomes necessary for y < 0.

Thus the case x < 0 can be handled along the above lines, but not the case y <0.

It is nevertheless possible to develop an adequate procedure, and we now proceed to do this. Throughout this procedure we will maintain the assumptions -1 £ x < 1, -1 £ y < 1. We proceed in several successive steps.

First: Assume that the corrections necessitated by the possibility of y <0 have been taken care of. We permit therefore y ³ 0. We will consider the corrections necessitated by the possibility of x < 0.

Let us disregard the sign digit of x, which is 1, i.e. replace it by 0. Then x goes over into x' = x - 1 and as -1 £ x< 0, this x' will actually behave like (x - 1) + 2 = x + 1. Hence our multiplication procedure will produce x'y = (x + l)y = xy + y,

and therefore a correction - y is needed at the end. (Note that we did not use the sign digit of x in the conventional way. Had we done so, then a correction -2y would have been necessary, as seen above.)

We see therefore: Consider x ~0. Perform first all necessary steps for forming x'y(y ~ 0), without yet reaching the sign digit of x (i.e. treating x as if it were ³ 0). When the time arrives at which the digit x 0 of x has to become effective-i.e. immediately after x 1 became effective, after 39 shifts (cf. the discussion near the end of 5.8)-at which time Ac contains, say, ~ (this corresponds to the p39 of 5.8). then form

This ~ is xy. (Note the difference between this last step, forming ~, and the 39 preceding steps in 5.8, forming p1, p2 , . . . , p39.)

Second: Having disposed of the possibility x < 0, we may now assume x ³ 0. With this assumption we have to treat all y ~ 0. Since y ³ 0 brings us back entirely to the familiar case of 5.8, we need to consider the case y <0 only.

Let y' be the number that obtains by disregarding the sign digit of y' which is 1, i.e. by replacing it by 0. Again y' acts not like y - 1, but like (y - 1) + 2 = y + 1. Hence the multiplication procedure of 5.8 will produce xy' = x(y + 1) = xy + x, and therefore a correction x is needed. (Note that, quite similarly to what we saw in the first case above, the suppression of the sign digit of y replaced the previously recognized correction - 2x by the present one - x.) As we observed earlier, this correction - x cannot be applied at the end to the completed xy' since at that time x is no longer available. Hence we must apply the correction - x digitwise, subtracting every digit at the time when it is last found in AR, and in a way that makes it effective with the proper positional value.

Third: Consider then x = 0, x 1, x 2 , . . . , x 39 = x 1, x 2 . . .x 39). The 39 digits x 1 . . . x 39 of x are lost in the course of the 39 shifts of the multiplication procedure of 5.8, going from right to left. Thus the operation No. k + 1 (k = 0, 1 38, cf. 5.8) finds x 39-k in the right-most stage of AR, uses it, and then loses it through its concluding right shift (of both Ac and AR). After this step 39 - (k + 1) = 38 - k further steps, i.e. shifts follow, hence before its own concluding shift there are still 39 - k shifts to come. Hence the positional values are 239-k times higher than they will be at the end. x 39- k should appear at the end, in the correcting term - x, with the sign - and the positional value 2-(39-k) . Hence we may inject it during the step k + 1 (before its shift) with the sign - and the positional value 1. That is to say, - x 39-k in the sign digit.

This, however, is inadmissible. Indeed, x 39-k might cause carries (if x 39-k= 1), which would have nowhere to go from the sign digit (there being no further positions to the left). This error is at its origin an integer multiple of 2, but the 39 - k subsequent shifts reduce its positional value 239-k times. Hence it might contribute to the end result any integer multiple of 2-(38-k) ¾ and this is a genuine error.

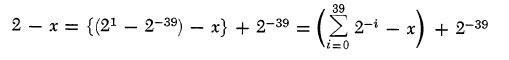

Let us therefore add 1 - x 39-k to the sign digit, i.e. 0 or 1 if x 39-k is 1 or 0, respectively. We will show further below, that with this procedure there arise no carries of the inadmissible kind. Taking this momentarily for granted, let us see what the total effect is. We are correcting not by -x but by å i39=1 2-i - x = 1 - 2-39 - x. Hence a final correction by -1 + 2-39 is needed. Since this is done at the end (after all shifts), it may be taken modulo 2. That is to say, we must add 1 + 2-39 i.e. 1 in each of the two extreme positions. Adding 1 in the right-most position has the same effect as in the discussion at the end of 5.7 (dealing with the subtraction). It is equivalent to injecting a carry into the right-most stage of Ac. Adding 1 in the left-most position, i.e. to the sign digit, produces a 1, since that digit was necessarily 0. (Indeed, the last operation ended in a shift, thus freeing the sign digit, cf. below.)

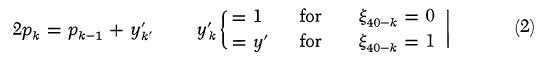

Fourth: Let us now consider the question of the carries that may arise in the 39 steps of the process described above. In order to do this, let us describe the kth step (k = 1, . . . , 39), which is a variant of the kth step described for a positive multiplication in 5.8, in the same way in which we described the original kth step bc. cit. That is to say, let us see what the formula (1) of 5.8 has become. It is clearly 2pk = pk-1 + (1 - x 40-k) + x 40-ky' i.e.

That is, we add 1 (y's sign digit) or y' (y without its sign digit), according to whether x 40-k = 0 or 1. Then pk should obtain from 2pk again by halving.

Now the addition of (2) produces no carries beyond the 20 position, as we asserted earlier, for the same reason as the addition of (1) in 5.8. We can argue in the same way as there: 0 £ ph <1 is true for h = 0, and if it is true for h = k - 1, then (1) extends it to h = k also, since 0 £ y'k £ 1. Hence the sum in (2) is ³ 0 and <2, and no carries beyond the 20 position arise.

Fifth: In the three last observations we assumed y < 0. Let us now restore the full generality of y ~ 0. We can then describe

Section 1 Processors with one address per instruction

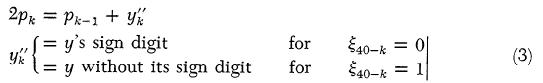

the equations (1) of 5.8 (valid for y ³ 0) and (2) above (valid for y <0) by a single formula,

Thus our verbal formulation of (2) applies here, too: We add y's sign digit or y without its sign, according to whether x 40-k = 0 or 1. All pk are ³ 0 and <1, and the addition of (3) never originates a carry beyond the 20 position. pk obtains from 2pk by a right shift, filling the sign digit with a 0. (Cf. however, Part II, Table 2 for another sort of right shift that is desirable in explicit form, i.e. as an order.)

For y ³ 0, xy is p39, for y <0, xy obtains from p39 by injecting a carry into the right-most stage of Ac and by placing a 1 into the sign digit in Ac.

Sixth: This procedure applies for x ³ 0. For x <0 it should also be applied, since it makes use of x's non-sign digits only, but at the end y must be subtracted from the result.

This method of binary multiplication will be illustrated in some examples in 5.15.

5.11. To complete our discussion of the multiplicative organs of our machine we must return to a consideration of the types of accumulators mentioned in 5.5. The static accumulator operates as an adder by simultaneously applying static voltages to its two inputs-one for each of the two numbers being added. When steady-state operation is reached the total sum is formed complete with all carries. For such an accumulator the above discussion is substantially complete, except that it should be remarked that such a circuit requires at most 39 rise times to complete a carry. Actually it is possible that the duration of these successive rises is proportional to a lower power of 39 than the first one.

Each stage of a dynamic accumulator consists of a binary counter for registering the digit and a flip-flop for temporary storage of the carry. The counter receives a pulse if a 1 is to be added in at that place; if this causes the counter to go from 1 to 0 a carry has occurred and hence the carry flip-flop will be set. It then remains to perform the carries. Each flip-flop has associated with it a gate, the output of which is connected to the next binary counter to the left. The carry is begun by pulsing all carry gates. Now a carry may produce a carry, so that the process needs to be repeated until all carry flip-flops register 0. This can be detected by means of a circuit involving a sensing tube connected to each carry flip-flop. It was shown in 5.6 that, on the average, five pulse times (flip-flop reaction times) are required for the complete carry. An alternative scheme is to connect a gate tube to each binary counter which will detect whether an incoming carry pulse would produce a carry and will, under this circumstance, pass the incoming carry pulse directly to the next stage. This circuit would require at most 39 rise times for the completion of the carry. (Actually less, cf. above.)

At the present time the development of a static accumulator is being concluded. From preliminary tests it seems that it will add two numbers in about 5 m sec and will shift right or left in about 1 m sec.

We return now to the multiplication operation. In a static accumulator we order simultaneously an addition of the multiplicand with sign deleted or the sign f the multiplicand (cf. 5.10) and a complete carry and then a shift for each of the 39 steps. In a dynamic accumulator of the second kind just described we order in succession an addition of the multiplicand with sign deleted or the sign of the multiplicand, a complete carry, and a shift for each of the 39 steps. In a dynamic accumulator of the first kind we can avoid losing the time required for completing the carry (in this case an average of 5 pulse times, cf. above) at each of the 39 steps. We order an addition by the multiplicand with sign deleted or the sign of the multiplicand, then order one pulsing of the carry gates, and finally shift the contents of both the digit counters and the carry flip-flops. This process is repeated 39 times. A simple arithmetical analysis which may be carried out in a later report, shows that at each one of these intermediate stages a single carry is adequate, and that a complete set of carries is needed at the end only. We then carry out the complement corrections, still without ever ordering a complete set of carry operations. When all these corrections are completed and after round-off, described below, we then order the complete carry mentioned above.

5.12. It is desirable at this point in the discussion to consider rules for rounding-off to n-digits. In order to assess the characteristics of alternative possibilities for such properly, and in particular the role of the concept of "unbiasedness", it is necessary to visualize the conditions under which rounding-off is needed.

Every number x that appears in the computing machine is an approximation of another number x', which would have appeared if the calculation had been performed absolutely rigorously. The approximations to which we refer here are not those that are caused by the explicitly introduced approximations of the numerical-mathematical set-up, e.g. the replacement of a (continuous) differential equation by a (discrete) difference equation. The effect of such approximations should be evaluated mathematically by the person who plans the problem for the machine, and should not be a direct concern of the machine. Indeed, it has to be handled

by a mathematician and cannot be handled by the machine, since its nature, complexity, and difficulty may be of any kind, depending upon the problem under consideration. The approximations which concern us here are these: Even the elementary operations of arithmetic, to which the mathematical approximation-formulation for the machine has to reduce the true (possibly transcendental) problem, are not rigorously executed by the machine. The machine deals with numbers of n digits, where n, no matter how large, has to be a fixed quantity. (We assumed for our machine 40 digits, including the sign, i.e. n = 39.) Now the sum and difference of two n-digit numbers are again n-digit numbers, but their product and quotient (in general) are not. (They have, in general, 2n or ¥ -digits, respectively.) Consequently, multiplication and division must unavoidably be replaced by the machine by two different operations which must produce n-digits under all conditions, and which, subject to this limitation, should lie as close as possible to the results of the true multiplication and division. One might call them pseudo-multiplication and pseudo-division; however, the accepted nomenclature terms them as multiplication and division with round-off. (We are now creating the impression that addition and subtraction are entirely free of such shortcomings. This is only true inasmuch as they do not create new digits to the right, as multiplication and division do. However, they can create new digits to the left, i.e. cause the numbers to "grow out of range". This complication, which is, of course, well known, is normally met by the planner, by mathematical arrangements and estimates to keep the numbers "within range". Since we propose to have our machine deal with numbers between -1 and 1, multiplication can never cause them to "grow out of range". Division, of course, might cause this complication, too. The planner must therefore see to it that in every division the absolute value of the divisor exceeds that of the dividend.)

Thus the round-off is intended to produce satisfactory n-digit approximations for the product xy and the quotient x/y of two n-digit numbers. Two things are wanted of the round-off: (1) The approximation should be good, i.e. its variance from the "true" xy or x/y should be as small as practicable; (2) The approximation should be unbiased, i.e. its mean should be equal to the "true" xy or x/y.

These desiderata must, however, be considered in conjunction with some further comments. Specifically: (a) x and y themselves are likely to be the results of similar round-offs, directly or in directly inherent, i.e. x and y themselves should be viewed as unbiased n-digit approximations of "true" x' and y' values; (b) by talking of "variances" and "means" we are introducing statistical concepts. Now the approximations which we are here considering are not really of a statistical nature, but are due to the peculiarities (from our point of view, inadequacies) of arithmetic and of digital representation, and are therefore actually rigorously and uniquely determined. It seems, however, in the present state of mathematical science, rather hopeless to try to deal with these matters rigorously. Furthermore, a certain statistical approach, while not truly justified, has always given adequate practical results. This consists of treating those digits which one does not wish to use individually in subsequent calculations as random variables with equiprobable digital values, and of treating any two such digits as statistically independent (unless this is patently false).

These things being understood, we can now undertake to discuss round-off procedures, realizing that we will have to apply them to the multiplication and to the division.

Let x = (.x 1 . . . x n) and y = (.h 1 . . . h n) be unbiased approximations of x' and y'. Then the "true" xy = (.x 1 . . . x nx n+1 . . . x 2n) and the "true" x/y = (.w 1 . . . w nw n+1w n+2 . . .) (this goes on ad infinitum!) are approximations of x'y' and x'/y'. Before we discuss how to round them off, we must know whether the "true" xy and x/y are themselves unbiased approximations of x'y' and x'/y'. xy is indeed an unbiased approximation of x'y', i.e. the mean of xy is the mean of x( = x') times the mean of y( = y'), owing to the independence assumption which we made above. However, if x and y are closely correlated, e.g. for x = y, i.e. for squaring, there is a bias. It is of the order of the mean square of x - x', i.e. of the variance of x. Since x has n digits, this variance is about l/22n (If the digits of x', beyond n are entirely unknown, then our original assumptions give the variance l/l2.22n.) Next, x/y can be written as x.y-1, and since we have already discussed the bias of the product, it suffices now to consider the reciprocal y-1. Now if y is an unbiased estimate of y', then y-1 is not an unbiased estimate of y'-1, i.e. the mean of y's reciprocal is not the reciprocal of y's mean. The difference is ~y-3 times the variance of y, i.e. it is of essentially the same order as the bias found above in the case of squaring.

It follows from all this that it is futile to attempt to avoid biases of the order of magnitude l/22n or less. (The factor 1/12 above may seem to be changing the order of magnitude in question. However, it is really the square root of the variance which matters and Ö (1/12 ~ 0.3 is a moderate factor.) Since we propose to use n = 39, therefore l/278(~3 ´ l0-24) is the critical case. Note that this possible bias level is 1/239(~2 x 10-12) times our last significant digit. Hence we will look for round-off rules to n digits for the "true" xy = (.x 1 . . . x nx n+1 . . . x 2n) and x/y = (.w 1 . . . w nw n+1w n+2 . . . ). The desideratum (1) which we formulated previously, that the variance should be small, is still valid. The

Section 1 Processors with one address per instruction

desideratum (2), however, that the bias should be zero, need, according to the above, only be enforced up to terms of the order 1/22n.

The round-off procedures, which we can use in this connection, fall into two broad classes. The first class is characterized by its ignoring all digits beyond the nth, and even the nth digit itself, which it replaces by a 1. The second class is characterized by the procedure of adding one unit in the (n + 1)st digit, performing the carries which this may induce, and then keeping only the n first digits.

When applied to a number of the form (.v1 . . . vnv n+1v n+2 . . . ) (ad infinitum!), the effects of either procedure are easily estimated. In the first case we may say we are dealing with (.v1, . . ., v n-1) plus a random number of the form (.0 . . . , 0vnv n+1v n+2 . . .), i.e. random in the interval 0, 1/2n-1 . Comparing with the rounded off (.v1v2 . . . vn-1l), we therefore have a difference random in the interval - l/2n, l/2n. Hence its mean is 0 and its variance 1/3 . 22n. In the second case we are dealing with (.v1 . . . vn) plus a random number of the form (.0 . . . 00vn+1 vn+2 . . . ), i . e . random in the interval 0, 1/2n. The "rounded-off" value will be (.v1 . . . vn) increased by 0 or by 1/2n, according to whether the random number in question lies in the interval 0, 1/2n+1, or in the interval 1/2n+1, 1/2n. Hence comparing with the "rounded-off" value, we have a difference random in the intervals 0, 1/2n+1, and 0, - l/2n+1, i.e. in the interval -1/2n+1, 1/2n+1. Hence its mean is 0 and its variance (1/12)22n.

If the number to be rounded-off has the form (.v1 . . . vn vn+1 vn+2 . . . vn+p) (p finite), then these results are somewhat affected. The order of magnitude of the variance remains the same; indeed for large p even its relative change is negligible. The mean difference may deviate from 0 by amounts which are easily estimated to be of the order 1/2n .1/2p = 1/2 n+p.

In division we have the first situation, x/y = (.w 1 . . . w nw n+1w n+2 . . . ), i.e. p is infinite. In multiplication we have the second one, xy = (.x 1 . . . x nx n+1 . . . x 2n), i.e. p = n. Hence for the division both methods are applicable without modification. In multiplication a bias of the order of 1/22n may be introduced. We have seen that it is pointless to insist on removing biases of this size. We will therefore use the unmodified methods in this case, too.

It should be noted that the bias in the case of multiplication can be removed in various ways. However, for the reasons set forth above, we shall not complicate the machine by introducing such corrections.

Thus we have two standard "round-off" methods, both unbiased to the extent to which we need this, and with the variances 1/3 . 22n, and (1/12)22n that is, with the dispersions (1/Ö 3)(1/2n) = 0.58 times the last digit and (1/2 Ö 3)(1/2n) = 0.29 times the last digit. The first one requires no carry facilities, the second one requires them.

Inasmuch as we propose to form the product x'y' in the accumulator, which has carry facilities, there is no reason why we should not adopt the rounding scheme described above which has the smaller dispersion, i.e. the one which may induce carries. In the case, however, of division we wish to avoid schemes leading to carries since we expect to form the quotient in the arithmetic register, which does not permit of carry operations. The scheme which we accordingly adopt is the one in which w n is replaced by 1. This method has the decided advantage that it enables us to write down the approximate quotient as soon as we know its first (n - 1) digits. It will be seen in 5.14 and 6.6.4 below that our procedure for forming the quotient of two numbers will always lead to a result that is correctly rounded in accordance with the decisions just made. We do not consider as serious the fact that our rounding scheme in the case of division has a dispersion twice as large as that in multiplication since division is a far less frequent operation.

A final remark should be made in connection with the possible, occasional need of carrying more than n = 39 digits. Our logical control is sufficiently flexible to permit treating k (=2, 3, . . . words as one number, and thus effecting n = 39k. In this case the round-off has to be handled differently, cf. Chapter 9, Part II. The multiplier produces all 78 digits of the basic 39 by 39 digit multiplication: The first 39 in the Ac, the last 39 in the AR. These must then be manipulated in an appropriate manner. (For details, cf. 6.6.3 and 9.9-9.10, Part II.) The divider works for 39 digits only: In forming x/y, it is necessary, even if x and y are available to 39k digits, to use only 39 digits of each, and a 39 digit result will appear. It seems most convenient to use this result as the first step of a series of successive approximations. The successive improvements can then be obtained by various means. One way consists of using the well known iteration formula (cf. 5.4). For k = 2 one such step will be needed, for k = 3, 4, two steps, for k = 5, 6, 7, 8 three steps, etc. An alternative procedure is this: Calculate the remainder, using the approximate, 39 digit, quotient and the complete, 39k digit, divisor and dividend. Divide this again by the approximate, 39 digit, divisor, thus obtaining essentially the next 39 digits of the quotient. Repeat this procedure until the full 39k desired digits of the quotient have been obtained.

5.13. We might mention at this time a complication which arises when a floating binary point is introduced into the machine. The operation of addition which usually takes at most 1/10 of a