|

| One of Forty Source Images |

Imagine taking a virtual tour of a place such as a beautiful estate or a historic district. Many methods have been used to represent the scene and give the tour, such as a set of photographs, a single video recording, nonlinear video segments, and panoramic images. All of these techniques record and playback imagery acquired from the actual scene. However, they constrain the viewer from moving freely and viewing the scene from any position. Another approach, a walkthrough of a hand-modeled geometric representation of the environment, allows free exploration of the scene, but for two reasons lacks realism that the recording-based methods have. First, every detail in a hand-built model must be noticed and then modeled by a person - an inherently limited endeavor; and second, the renderings of the model are subject to the current state of the art in interactive computer graphics.

One great hope for image-based rendering is that it can combine the best aspects of these two virtual scene exploration approaches to yield a compelling, detailed recreation of a scene that can be explored freely by the viewer. That is, imagine another video tour of a beautiful place. The video imagery will be as good as a video recording of the scene (hopefully better), but you can steer the video anywhere that you are interested in exploring.

Our basic approach is to use images with depth taken from several places around the environment, process these images to create an image-based model of the scene, and then render from this model using appropriate techniques on computer graphics hardware.

|

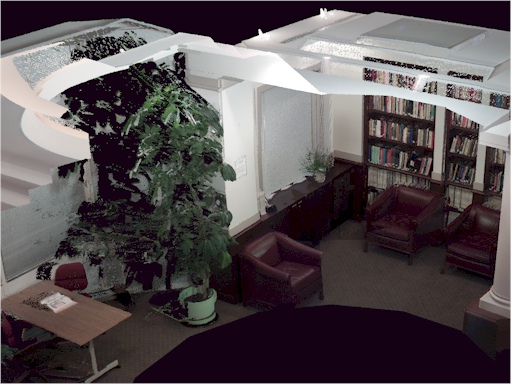

| A View Created Using Our Method |

The range data is acquired using a scanning laser rangefinder built by Lars Nyland. Each scan is approximately 10 million range samples at a resolution of 25 samples per degree and an accuracy of approx. 6 mm. We then take color images using a high-resolution digital camera (1728x1152 pixels) and register the color images with the laser scans. We repeat this acquisition process from several locations in the scene and register these positions. After acquiring these scans (roughly one gigabyte per room) we must process the data to prepare it for rendering.

Once we have processed the images to choose which image fragments to use to represent each surface in the environment we are ready to render the scene. We use high-end 3D graphics hardware. Normally we use either PixelFlow, a graphics supercomputer designed here in our department, or Evans, a 32 processor SGI Origin 2000 RealityMonster. We can render a lower resolution version of the scene on a PC.

|

|

| PixelFlow | Evans (our SGI RealityMonster) |

See my home page: David K. McAllister

Fellowship from

Maintained by David K. McAllister

Last Modified 21 Feb 1999