Ideas for Independent Research Projects (COMP 991)

This web-page is created for first-year and second-year graduate students who

are looking for ideas/advisors for independent research projects. Here, I've

described the type of research we conduct, as well as provided a sample list of

well-defined ideas for independent research projects (COMP 991). Most of these

sample projects are scoped to be completed within 2-3 semesters, but are likely

to lead to other interesting issues that can be pursued in the form of a PhD

dissertation as well.

The list provided below is intended to merely help prospective students who do

not already have research ideas that they would like to pursue. If you do have

other interests in the general area of Networks and/or Distributed Systems, feel

free to send me an email or talk to me.

Research Theme:

TCP/IP is the dominant transport protocol used in the Internet. The most useful service semantics provided by TCP is that of "reliability" and flow control. In order to avoid congestion collapse in the Internet, TCP also incorporates mechanisms for "congestion control". While this mix of mechanisms seems to work well for traditional applications such as the web---which is characterized by short TCP transfers---it is not clear how well do these mechanisms work for newer applications (large file transfers) and high-speed networks. It is the goal of our research to understand the scalability of traditional TCP mechanisms for newer requirements, and to explore the design of alternate mechanisms where traditional ones fail.Over the past few years, we've been involved in several projects related to the design of scalable monitoring infrastructures, accurate probing techniques, as well as passive analysis of traces of real Internet sessions. Details about these projects can be found here.

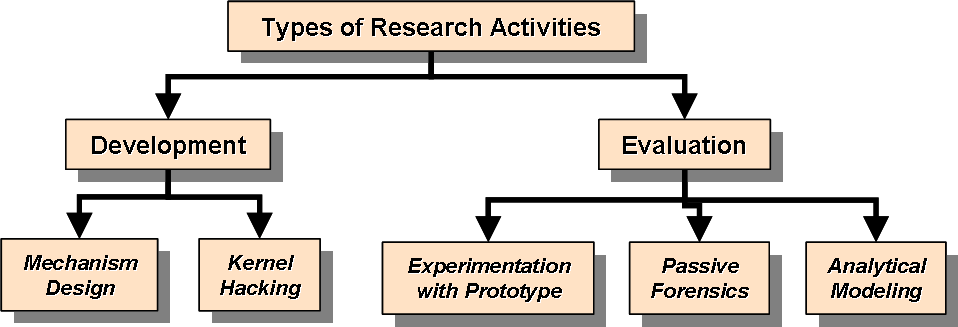

Most of these projects involve the use of several skills---as illustrated

below---to conduct research, including analytical modeling, mechanism design,

passive analysis, implementation in a prototype, and experimentation.

|

Suggested Topics for Independent Research Projects (COMP 991):

With the above-described research theme in mind, the following research problems are of immediate interest (and build on our recent work in this area). Some of these projects are smaller in scope than others and would be pursued in collaboration with existing PhD students. All of these require good experimental and analytical skills.-

A Study of the Impact of Application-layer, transport-layer, and network-layer factors on Internet Traffic Burstiness

The issue of provisioning Internet routers with small buffers is receiving considerable attention in the networking research community. Most of existing work, though, studies only the interaction between the TCP transport protocol and router queues. We believe that several other factors---including the application/user data-generation behavior, the size of data transferred, the network topology, and the level of traffic aggregation---can significantly influence traffic burstiness as well. In this project, we plan to experimentally study the impact of each of such factors.Specifically, we will use an extensive lab testbed to recreate Internet-derived traffic. We will then control and study the impact on router queues of several factors related to the application-layer, transport-layer, as well as the network-layer.

Research-components/open-issues in this project:

-

The primary issue to be addressed in this project is that of designing an

empirical methodology to isolate the impact of each of the factors we are

interested in. The primary constraint would be to do so for each factor while

retaining realism in the way the other factors are incorporated.

-

A second issue would be to design metrics and analysis techniques that can

effectively quantify the impact of each factor. Based on our past work, we

expect wavelet-based analysis to play a crucial role in this.

-

The primary issue to be addressed in this project is that of designing an

empirical methodology to isolate the impact of each of the factors we are

interested in. The primary constraint would be to do so for each factor while

retaining realism in the way the other factors are incorporated.

-

Study interaction between different congestion-control protocols and design mechanisms that facilitate their co-existence:

The design of congestion control mechanisms for high-speed networks is a very active area of research. While several new protocols have been proposed in the recent literature, each of these protocols relies on a different combination of congestion-detection and congestion-avoidance mechanisms. This raises the nasty issue of how well would these protocols co-exist if deployed simultaneously on a network (which is likely to be the case in the future). Unfortunately, this issue has not been adequately addressed in the research community.In this research project, we address this issue by studying the interaction between different congestion-detection and congestion-avoidance mechanisms.

Research-components/open-issues in this project:

-

The first likely step of this project is likely to be an experimental study of

the interaction between different congestion-control protocols/mechanisms. This

would help us understand the network and traffic conditions under which the

protocols can or can not peacefully co-exist.

- The next step would be to characterize analytically the set of conditions observed above. The hope is that this effort may also lead to new principles for designing congestion-control mechanisms that can co-exist peacefully with others under a wide range of conditions.

-

The first likely step of this project is likely to be an experimental study of

the interaction between different congestion-control protocols/mechanisms. This

would help us understand the network and traffic conditions under which the

protocols can or can not peacefully co-exist.

-

Study the feasibility of using FPGAs for implementing critical functions of high-speed protocols in network cards:

Several high-speed transport protocols (including one being designed in our group) rely on fine-grained delay and inter-packet spacing information for estimating quantities like the spare bandwidth on a path. Unfortunately, for end-to-end capacities in the gigabit range, current PC platforms are hardly able to provide accurate, predictable, and fine-grained timestamping.In this project, we will study the fesibility of relying specially-designed FPGA-based network interface cards for achieving timestamping accuracy of the order of a few micro-seconds.

Research-components/open-issues in this project:

-

This project would investigate data-structures and streamlined code that help

facilitate accurate timestamping. It is also likely to yields guidelines for the

memory and computation limits that future high-speed protocols ought to satisfy.

This project would be done in collaboration with researchers at

RENCI.

- A second issue that would need to be addressed in the redesign of a traditional software-only protocol-stack to be able to efficiently exploit the availability of the above-mentioned NIC-based accurate timestamping. This would be explored in the context of the RAPID congestion-control protocl being designed in our group.

-

This project would investigate data-structures and streamlined code that help

facilitate accurate timestamping. It is also likely to yields guidelines for the

memory and computation limits that future high-speed protocols ought to satisfy.

This project would be done in collaboration with researchers at

RENCI.

-

Real-time passive tcp performance analysis tool:

We have recently developed a passive tool for analyzing traces of real-world TCP connections. The tool is currently capable of accurately estimating TCP RTTs and losses. In addition, it reports advertised window limits and packets in flight. We have used this tool extensively on traces of millions of TCP connections in order to: (i) study the variability in TCP RTT, (ii) study the efficacy of its loss detection/recovery mechanisms, and (iii) study the efficacy of delay-based congestion predictors.The above tool currently works offline: it first extracts a per-connection trace from an aggregate trace and then analyzes it. We are now interested in developing an online version of this tool that can observe an ongoing TCP transfer and help identify in real-time its performance-limiting factors---such a tool would be immensely useful in helping Internet applications enhance their performance. Specifically, as soon as a connection starts/requests monitoring, the tool observes its packets and on-the-fly estimates TCP state---such as the advertised window, congestion-window, etc. The tool then infers whether the network, the end-system configuration, or the protocol version is limiting the transfer rate of the connections. If it is the network, it sends out active probes to locate the problem. Otherwise, it tells how the end-system can be re-configured.

Research-components/open-issues in this project:

-

The primary open issue in this project is in designing analysis techniques to

figure out the performance limiting factor for a TCP session simply by observing

its packet trace.

- Also, an online tool would need to maintain in memory the state of potentially 100s of 1000s of connections. Hence the design of per-flow data structures and lookup has to be performed efficiently. This project is likely to build upon an initial code-base that has been developed by Don Smith for an online version of TMIX.

-

The primary open issue in this project is in designing analysis techniques to

figure out the performance limiting factor for a TCP session simply by observing

its packet trace.

-

How is the available-bandwidth (AB) of an Internet path related to the end-to-end delay and loss rate?

Internet applications can be quite diverse in what support they require from the Internet. While some applications---such as online games---have stringent requirements of low response times but do not need much bandwidth, other applications---such as scientific computing and HDTV streaming---require large bandwidth for transfering massive amounts of data. In this project, we evaluate the applicability of traditional performance metrics, such as end-to-end delay and loss rates, for emerging high-bandwidth applications. Specifically, we are interested in answering the question: do high-bandwidth paths always have low delays and loss rates?Research-components/open-issues in this project:

- The primary issue in this project is that of collecting Internet operational data and studying the correlation between AB, delay, and losses, as a function of several factors---including the type of Internet application, the type of network topology traversed, the time of day, as well as the user population.

- Research would also need to be conducted on how the above-mentioned correlation changes with increase in link capacities, traffic aggregation, and traffic load. This issue will be pursued by relying on a simulation- as well as lab-testbed.

Previous COMP 991 Offerings:

-

Design congestion-control mechanisms that rely on recently-developed AB estimation techniques:

This project was started as COMP 991 research in Fall 2007. It has been fairly successful and has produced a very interesting new congestion-control protocol and is currently being submitted to a leading conference.The design of congestion control mechanisms for TCP-like transport protocols is an active area of research, especially for the evolving high-speed networks of tomorrow. A key challenge faced by researchers is balancing the tradeoff between scalability (to high network speeds) and "friendliness" to existing Internet traffic. Several window-based protocols have been developed and implemented that attempt to quickly converge on the correct window, while being "nice" to regular network traffic. The result often has traces of legacy TCP mechanisms, which have been hacked, twisted, and tuned in order to achieve better scalability, while preserving the friendliness of regular TCP.

In this research project, we take an alternate view that a traditional window-based transport protocol is especially unsuitable for high-speed regimes, especially due to its bursty behavior. Instead, we believe that recently developed light-weight mechanisms for quickly and reliably probing for the end-to-end available bandwidth, can be leveraged for designing rate-based protocols that quickly converge on the right sending rate.

Research-components/open-issues in this project:

-

The primary issue to be addressed in this project is that of figuring out how to

design an in-band AB estimation logic and mechanism for a transport

protocol---the primary criteria are that the estimation be light-weight, quick,

and non-intrusive.

-

A second issue that needs to be resolved is, once the AB is estimated, how

should the data transmission be implemented. Specifically, should a window-based

transmission be used (which could be bursty and cause short-timescale

congestion), should a paced-window transmission be used (which could be somewhat

tricky to implement), or should a rate-based transmission be used instead?

-

Another key open issue that is faced by any new transport protocol, though, is that of

"survival": given the aggressive probing nature of TCP, can such mechanisms fare

well? Wouldn't a (high-speed) TCP connection keep pushing such connections out

of the bottleneck link? In such a case, how do you design congestion-avoidance

mechanisms that ensure that such connections survive, yet do not push the

"other" TCP connections out?

-

The primary issue to be addressed in this project is that of figuring out how to

design an in-band AB estimation logic and mechanism for a transport

protocol---the primary criteria are that the estimation be light-weight, quick,

and non-intrusive.