Proposal slides - September 29, 2003

This project is an extension of the work done by Max Garber and Ming Lin in [1,2]. In those papers, they present a framework for motion planning of rigid and articulated bodies using a constrained dynamics [3] approach. I would like to extend this idea for use with multiple agents, particularly in conjunction with a higher-level behavioral model.

In [1,2], the motion of each rigid robot is influenced by virtual forces induced by geometric and other constraints. For articulated robots, joint connectivity and angle limits can be enforced by these constraints, and spatial relationships can be enforced between multiple robots. Constraints are divided into two major categories: hard constraints and soft constraints. Hard constraints must be satisfied at every time step in the simulation. Examples of hard constraints are non-penetration, articulated robot joint connectivity, and articulated robot joint angle limits. Soft constraints are used to encourage robots to behave in a certain way or proceed in certain directions. Examples of soft constraints are goal attraction, surface repulsion, and path following. The technique presented is used in dynamic environments with moving obstacles and is applicable to complex scenarios.

In addition to constraint-based motion planning, this project draws ideas from the behavioral modeling realm of research (e.g. Craig Reynolds' original flocking paper [4]). In particular, the generation of real-time dynamic autonomous agents is explored. There is much work that has been done in this area. As an example, Siome Goldenstein has presented a scalable methodology for agent modeling based on nonlinear dynamical systems and kinetic data structures [5,6]. In that paper, they used a three-layer approach: a local layer, a global environment layer, and a global planning layer. The local layer is responsible for modeling low-level behaviors using nonlinear dynamical systems theory. The global environment layer efficiently tracks each agent's immediate environment to provide nearby obstacle/agent information to help in behavioral decision-making. The global planning layer essentially implements target tracking and navigation through an environment while avoiding local minima.

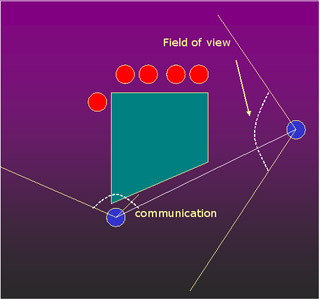

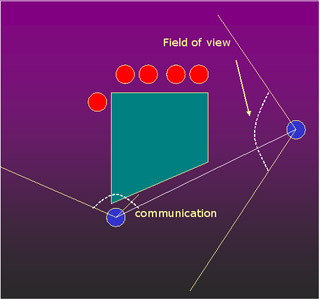

The purpose of this project is to extend the constraint-based motion planning system in [1,2] to allow for additional constraints that might be required in a multi-agent system, such as line-of-sight constraints. In addition, these multi-agent systems will be controlled by a behavioral level that will incorporate ideas of information sharing between agents. One can imagine many situations in which multiple agents would have to interact with each other in order to accomplish a specified task. I am considering scenarios in which agent cooperation is not required but of great benefit to all agents, such as a military or capture-the-flag type situation. There are cases in which information shared between multiple agents can be very beneficial. As a simple example, the figure below shows two opposing teams.

The left blue agent, when peeking around the corner, can see one red agent, but knows nothing about the other four red agents waiting around the other side of the central barrier. The right blue agent, on the other hand, knows that there are four more red agents there. If the two blue agents have line of sight and can communicate (assuming a simple case where other types of communication are not possible), then this information can be combined to give both agents an effectively larger view of the environment.

In an ideal situation, a team would like to know as much about its environment as possible in order to generate a viable plan of action. This implies that the agents should spread out across the environment such that their combined view will cover as much of the environment as possible. However, there are also situations in which the agents may need to hide due to immediate danger, such as entering the line of sight of an enemy. I hope to take these high-level behaviors and map them into constraints in a situation in which motion planning must be driven by more than just reaching an end goal. I would also like to look at what can be done in the case of having incomplete information about where the obstacles (enemies) are, or information that becomes invalid over time (e.g. if an agent looks at one area and sees an enemy and then turns away, the potential location of the enemy over time becomes less and less certain).

[1] Garber, M. and Lin, M. Constraint-Based Motion Planning using Voronoi Diagrams. Proc. Fifth International Workshop on Algorithmic Foundations of Robotics (WAFR), 2002.

[2] Garber, M. and Lin, M. Constraint-Based Motion Planning for Virtual Prototyping. Proc. ACM Symposium on Solid Modeling and Applications, 2002.

[3] Witkin, A. and Baraff, D. Physically Based Modeling: Principles and Practice. ACM Press, 1997. Course Notes of ACM SIGGRAPH.

[4] Reynolds, C. W.. Flocks, Herds, and Schools: A Distributed Behavioral Model. Computer Graphics, 21(4): 25-34, 1987.

[5] Goldenstein, S., Large, E., and Metaxas, D. Dynamic Autonomous Agents: Game Applications. Computer Animation, 1998.

[6] Goldenstein, S., Karavelas, M., Metaxas, D., Guibas, L., Aaron, E., and Goswami, A. Scalable nonlinear dynamical systems for agent steering and crowd simulation. Computer and Graphics, 25(6): 983-998, 2001.

[7] Stout, B. Smart move: Path-finding. Game Developer, Oct. 1996.

[8] Vinckle, S. Real-time pathfinding for multiple objects. Game Developer, June 1997.

[9] Pottinger, D. Coordinated unit movement. Game Developer, Jan. 1999.