In this assignment, we had to write a program to automatically compute the fundamental matrix for pairs of images. The algorithm for automatically computing the fundamental matrix has several steps. I will show these steps using a series of images that show each stage of the computation. To test my program, I used three image pairs. The street scene is from INRIA Sophia Antipolis, and the corridor scene and building scene are from the Oxford Visual Geometry Group.

In all tests, I used a feature window size of 15x15 and a search region window size of 101x101 for finding putative matches. My distance threshold was set to 1.5 pixels for RANSAC and guided matching.

I will first demonstrate the procedure using the street scene shown below.

The first step is to find a set of interest points in each image using a corner detector. These interest points are shown in the images below. 456 features were found in each image.

Next, we need to find a set of putative matches between these feature points across the two images. This is done based on proximity and similarity of intensity neighborhoods of pairs of features. The putative matches that were found are shown below in each image. 214 putative correspondences were found.

Once we have a set of putative correspondences between feature points, we can start to compute the fundamental matrix. The first step in this process is to run the RANSAC algorithm, which repeatedly selects a random sample of 7 correspondences to compute the fundamental matrix. The result of this algorithm is the fundamental matrix F that has the largest number of inliers. The 194 inliers that resulted after 6 iterations are displayed below. At the end of this step, the Sampson error per pixel was 0.293238, and the residual error was 1.302671.

Now that we have an initial estimate of the fundamental matrix F, we can refine it by running it through an non-linear iterative algorithm and by using guided matching. The iterative algorithm changes the parameters that affect F such that the error is minimized (I am using the Sampson approximation to geometric error). Guided matching finds more interest points by restricting the search window to be along the epipolar line for each feature point. After running the results from the previous step through this part of the algorithm (2 iterations), the Sampson error per pixel was 0.140039, and the residual error was 0.028275. The following 207 matches were obtained:

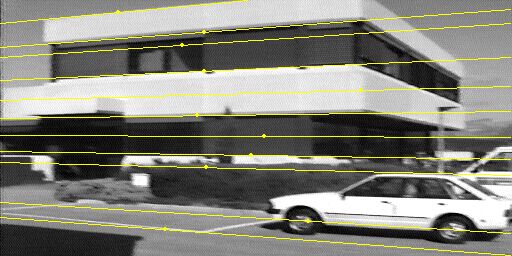

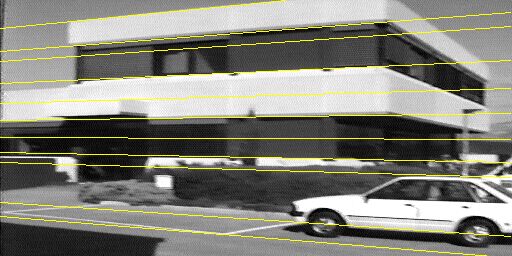

Note that some of the matches are still clearly wrong (in particular the long lines that extend almost all the way across the image), but the overall error has been brought down considerably. Now that we have an F matrix, we can take a look at a few epipolar lines:

Here are some more test images. This is the corridor scene.

Feature points:

Putative correspondences (299 found):

After 7 iterations of RANSAC (283 inliers, Sampson error per pixel = 0.295301, residual error = 0.206738):

After 3 iterations of non-linear estimation and guided matching (299 matches, Sampson error per pixel = 0.127922, residual error = 0.049280):

A few epipolar lines:

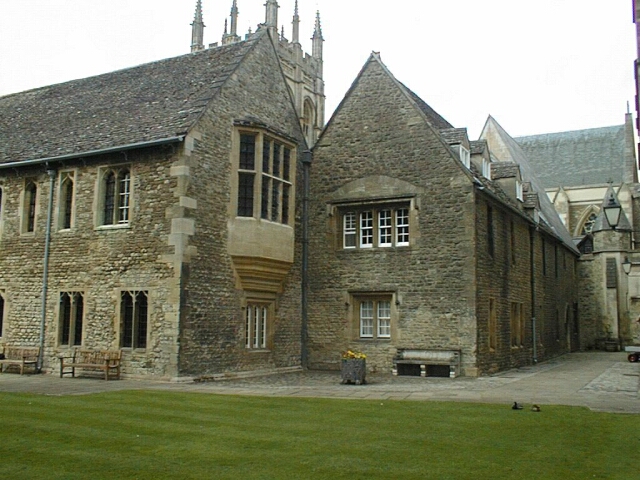

This is the building scene.

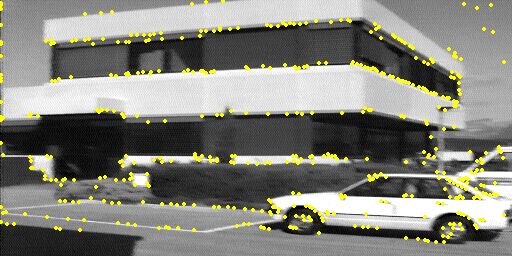

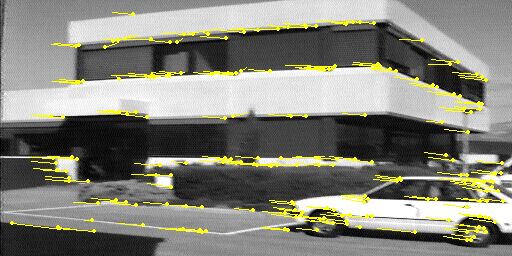

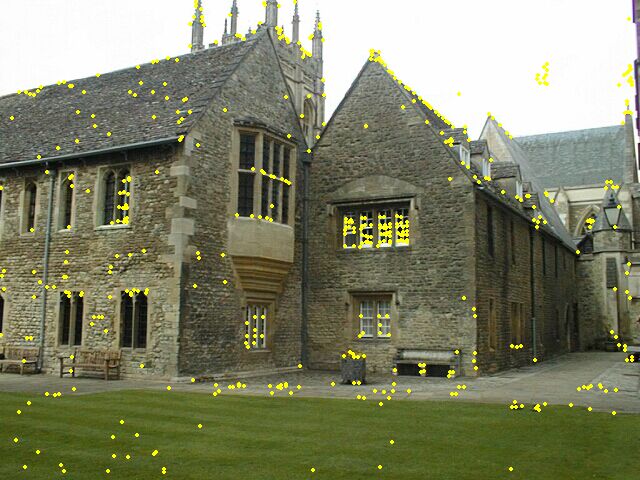

Feature points:

Putative correspondences (68 found):

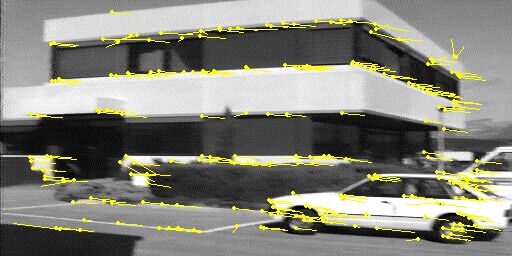

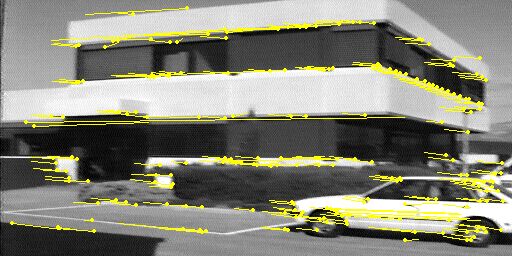

After 37 iterations of RANSAC (50 inliers, Sampson error per pixel = 0.293039, residual error = 0.326195):

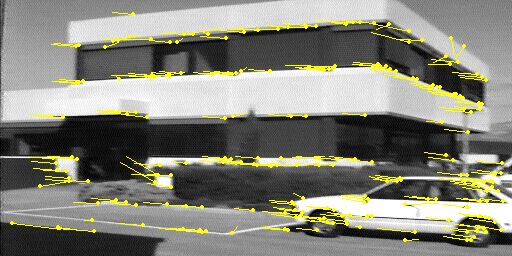

After 4 iterations of non-linear estimation and guided matching (71 matches, Sampson error per pixel = 0.210598, residual error = 0.001056):

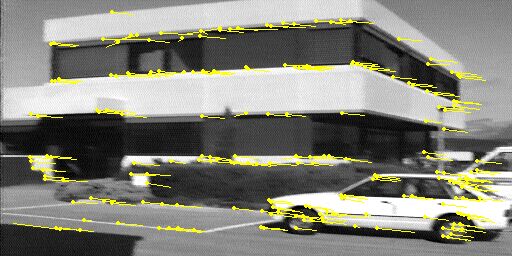

A few epipolar lines:

The code can be downloaded here.