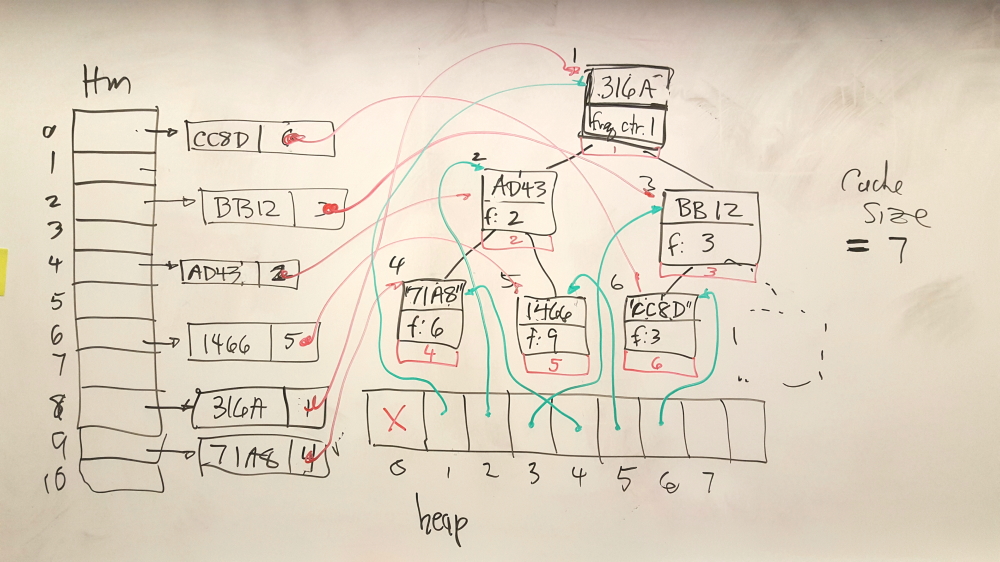

You are going to build in Java a Binary Heap (BHEAP). Specifically, you will code a minimum binary heap (BHEAP) with the numerically smallest priority number at the root of the tree. We will a couple of the heap operations efficient (faster than O(N)) with the help of a hashmap. Let's call this ADT a "HashHeap". This is a data structure that would support, for example, a Least Frequently Used caching policy in various operating system functions (like caching pages from virtual memory).

In the text (and class examples) the heaps have contained an integer (the priority). This is for clarity of the examples... and the array containing the heap elements was array of integer. In this example, on order to allow hashing access to the heap elements, we will have the heap array contain pointers to dynamically allocated cells. One of the fields in a cell will be a priority number, to be used to structure the heap. The other field(s) in a cell object will be data for the application (cache pages, for example). For this assignment, the particular data is not relevant, so we use a structure similar to the cells from the previous two assignments. See the code template for details.

Caching is a well proven strategy for speeding up many computing tasks. When data is stored in some slower stoage medium (like a disk or the cloud), we can retrieve data from that slow medium the first time we need it, and then save it into some faster memory (like on-chip RAM) to use for subsequent accesses. From time to time altered data needs to be written from the cache back to the slower storage. When the cache fills (it has limited size) and we need to retrieve more information from slow storage, we must decide what part of the cache to send back to make room for the newly retrieved data.

There are many strategies for replacing portions of a cache when we need to. Least Recently Used (LRU) is one common approace... choose the cache frame that was used the farthest back in time and replace it with the new page data. This recognizes "locality", that when we reference variables we often re-reference them in a near time frame. The reasoning behind LRU is that we find the cache frame that is inactive the longest, assuming that the timeframe in which the code using the data in that frame is most likely to have passed. A slighty altered FIFO Queue will keep track of this for us. When a cache frame is referenced, we find it in the queue, and move it to the head of the queue. When we need to replace a cache frame, we take out the one at the end of the queue.

Another replacement strategy is called Least Frequently Used (LFU). Here, we find the cache frame that has not been referenced very many times and replace that one. Cache frames that are referenced heavily will stay in the cache. To do this we have to keep a counter on each cache frame, and bump up that counter each time the program uses memory in the cache. We can use a minimum binary heap to efficiently decide which cache frame has the smallest use counter (and so has been referenced least frequently) when we need to replace a frame. This is the sort of thing we are supporting in this assignment.

You can think of your "cache" in this assignment as a collection of cells that are dynamically allocated in your program. The cells contain some representation of the memory data and some supporting information to make the cache and LFU policy work. The memory data is not important to use here, so we will simply use a hexadecimal string (representing a memory address... such as perhaps the beginning address of a memory block) as the entire data field. Then we have two other data structures containing pointers to these cells: a MinBinHeap where the cell pointers are stored in the heap array; and a HashMap that pairs up the hexadecimal "address" for a cell with a pointer to the cell for that address. As such, the HashMap will use String as key, and cell object as value. We will use the HashMap from the Java collections library, but you will write your own minimum binary heap code as part of this assignment (following the template below).

The Cache_LFU then will then be an object containing both a HashMap and a MinBinHeap (MBH). It will have an interface of operations that it responds to, the main one being "refer". The refer operation takes a String parameter, the address of a memoory block needed by the application code using the cache. For example, consider the call

cache.refer("AA88");

This indicates the page for address AA88 is needed from the cache, and several things

might be the case:

-- the frame might be in the cache, so it can be used as is and we

must bump up the frequency counter by 1 to reflect the new access to that frame

-- the frame might NOT be in the cache, so it must be added to the cache and

its frequency counter set to 1 to record this first access.

Since we need to add a frame to the cache, we have 2 posibilities:

-- if the cache is not full, there is room to just create a new frame, add

it to the cache and record the frequency counter as 1

-- if the cache IS full, then we must take something out to make room for

the new frame; we do this by asking the heap which frame is least frequently

used and remove that one; we then have room to make the new frame with its

frequency counter set to 1

What does it mean to "add a frame to the cache" ?

There are several steps. First, we make a new cache frame object and

set its fields to hold the String address and the frequency counter 1.

We then must "put" an entry for the (address, frame object) pair into the HashMap.

This gives us O(1) accress to the frame object if we know the address String.

Then we have to put that frame object into the MnBinHeap as well... and with the

frequency count field being used as the priority to guide where the cell ends

up in the heap array. Note that since the frequency counter is 1 when we add

a new frame to the heap, it will percolate up towards the root of the heap tree...

towards slot 1 in the heap array.

Other frames that have been referened more will be lower down in the heap.

Now, what happens when we "refer" to an address and the frame is already in the cache? We know a frame is in the cache, because we do a "get" on the HashMap and find an entry for the (address, frame object) there. In this case, there is no need to make any new frames, but we do need to record that we have another reference to the frame as the address. So we follow the pointer to the frame object and increase it frequency count field by 1. This means its no longer (possibly) in the proper place in the heap array. So we also mush do an heap.incElt(frame_object) operation on the heap and move the frame object to its new proper location.

So, here is how the HashMap helps. We do not have to do a O(N) search throught the heap array to find where the frame object parameter is located (at what array subscript). Rather we have the pointer to the frame object from the HashMap (a O(1) "search") and we have a field in the frame object that tells which array subscript is lives at in the heap array. So we can directly addresss the heap array at that element, and do the bubbling down required by the incElt operation.

Note that this means we must keep the "slot" field in the frame object updated properly whenever we move a cell around in the heap array.

Keeping the "slot" field of each frame object updated is a cheap (small constant) amount of work every time we swap array elements. We are happy to do that work to change the element search on incElt from O(N) to O(1). We win!

Think on the incElt operation. In order to bump up the priority of some item in the MBH we first need to find the item we are interested in. In the array representation of the MBH, this means searching every item in the array, from slot 0 up. This means in worst case, O(N) for N items in the MBH.

Using a HashMap to save pointers to all cells in the MBH means we can cut the cost of the incElt to O(log N). Here's how: we must rearrange the MBH because some cache frame is being referenced, and so the frequency counter (priority) must be bumped up. We have the address of the cache frame (or some unique ID for it, here a hex string) so we access the HashMap and get back a pointer to the cell in the MBH in O(1). Once we have that pointer we have the subscript where we can find it in the MBH array in O(1).

We go there via array access, and do the increase-key operation on the cell ( O(log N) , including increment priority field). As we move cells around in the MBH array, we must remember to update the subscript information in those cells telling where they live in the MBH array.

The payoff: We will need this sort of HashMap support to make graph manupulation algorithm perform efficiently when we build a graph data structure in Assn 5. We will need to find components of a graph in O(1) time instead of searching through a list of N elements. In this HashHeap assignment, the HashMap helps us keep a O(N) operation down to O(log N)... which is good to do, but not a killer if we have to do the O(N) search instead. In the graph applications, the HashMap will help up have O(N) and O(N log N) operations instead of O(N^2) or worse. This then becomes crucial rather than just nice.

Hand in your code as per the instructions under

Assignment 4 in Sakai.

For uniformity, to aid grading, you must write your program with these specific structures.

package assignment4_f20;

public interface Heap {

/*

Interface: The Heap will provide this collection of operations:

insert

in: a CacheFrame object, containing String key (address),

the priority (frequency counter) and the array subscript (slot).

We may have duplicate priorities being inserted

return: void

effect: the heap size goes up one

delMin

in: nothing

return: nothing

effect: the heap size goes down one, the element at the root is removed

getMin

in: nothing

return: a CacheFrame object (the one in slot 1 on the array)

effect: no change in heap state

size

in: nothing

return: integer 0 or greater, the count of the number of elements in the heap

effect: no change in heap state

incELt

in: a CacheFrame object

return: nothing

effect: frequency counter (priority) in the CacheFrame object is incremented by 1

heap state is altered by perhaps the indicated element being bubbled down

also, slot numbers in moved heap elements change to reflect new locations

decElt

in: a CacheFrame object

return: nothing

effect: frequency counter (priority) in the CacheFrame object is decreased by 1

heap state is altered by perhaps the indicated element being bubbled up

also, slot numbers in moved heap elements change to reflect new locations

error: do no frequency change is the CacheFrame object already has priority 1

(all priorities must be 1 or greater).

*/

// ADT operations

void insert(CacheFrame elt);

void delMin();

CacheFrame getMin();

int size();

void incElt( CacheFrame elt );

void decElt( CacheFrame elt );

// not really an abstract op of a heap but

// we need it for grading this assignment

CacheFrame[] getHeap();

}

package assignment4_f20;

import java.util.HashMap;

public interface Cache {

/*

Interface: The Cache will provide this collection of operations:

size:

in: nothing

return: an integer, the maximum number of frames the cache will hold

effect: no cache state change

numElts:

in: nothing

return: an integer, the number of frames currently stored in the cache

effect: no cache state change

isFull:

in: nothing

return: a boolean, telling is the number of items in the cache is the same as the max limit

effect: no cache state change

refer:

in: String, a hexadecimal value as a string

assume this op will always be sent a string the looks like hex

no need to check or verify

return: boolean, telling true (we found the hex address in the cache)

or false (we did not find the address in cache)

effect: cache state changes by possibly having a frame removed (if it was full)

and by having a new frame added if the address is not in the cache.

if the address IS in the cache, then all that changes in the frequency counter

for that frame, which goes up one.

Also the number of frames in the cache goes up one if we add a frame

*/

// ADT operations

public int size();

public int numElts();

public boolean isFull();

public boolean refer( String address );

// not really ops of an abstract cache but

// we need this for grading this assignment

public MinBinHeap getHeap();

public HashMap getHashMap();

}

package assignment4_f20;

public interface Frame {

String getValue(); // will be a hexadecimal "address" of a memory block

// there is no setValue as the address will not change once the frame is made

int getPriority(); // will be a frequency counter, starts at 1

void setPriority(int p);

int getSlot();

void setSlot(int s);

}

package assignment4_f20;

public class CacheFrame implements Frame {

public String value; // a hex string for an address block

public int priority; // a fequency counter, used as priority

private int slot; // the subscript where this element is in the heap array

public CacheFrame(String av, int ap) {

this.value = av;

this.priority = ap;

this.slot = 0; // this will change as the array is manipulated

}

public String getValue() { return this.value; }

// no setValue... value will not change once set in constructor

public int getPriority() { return this.priority; }

public void setPriority(int p) { this.priority = p; }

public int getSlot() { return this.slot; }

public void setSlot(int s) { this.slot = s; }

@Override

public String toString() {

return "(val: " + value + ", pri:" + priority + ", slot: " + slot + ")";

}

}

package assignment4_f20;

public class MinBinHeap implements Heap {

private CacheFrame[] array; // load this array

private int size; // how many items currently in the heap

private int arraySize; // Everything in the array will initially

// be null. This is ok! Just build out

// from array[1]

public MinBinHeap(int nelts) {

this.array = new CacheFrame[nelts+1]; // remember we dont use slot 0

this.arraySize = nelts+1;

this.size = 0;

this.array[0] = new CacheFrame(null, 0); // 0 not used, so this is arbitrary

}

// Please do not remove or modify this method! Used to test your entire Heap.

@Override

public CacheFrame[] getHeap() { return this.array; }

//===============================================================

//

// here down you implement the ops in the interface

//

//===============================================================

}

package assignment4_f20;

import java.util.HashMap;

public class Cache_LFU implements Cache {

HashMap<String, CacheFrame> map;

// allocate from java collections lib

// do it this way so we all start with default size and

// default lambda and default hash function for string keys

MinBinHeap heap; // your own heap code above

int limit; // max num elts the cache can hold

int size; // current number elts in the cache

Cache_LFU (int maxElts) {

this.map = new HashMap<String, CacheFrame>();

this.heap = new MinBinHeap(maxElts);

this.limit = maxElts;

this.size = 0;

}

// dont change this we need it for grading

public MinBinHeap getHeap() { return this.heap; }

public HashMap getHashMap() { return this.map; }

// =========================================================

//

// you fill in code for the other ops in the interface

//

//==========================================================

}

package assignment4_f20;

public class Playground {

public static void main(String[] args) {

// Add more tests as methods and call them here!!

RunMytests();

// etc.

}

public static void RunMyTests() {

Cache_LFU lfc = new Cache_LFU(3);

lfc.refer("AA8C");

lfc.refer("AA8C");

lfc.refer("1234");

lfc.refer("234A");

lfc.refer("AA8C");

lfc.refer("234A");

lfc.refer("ABCD");

lfc.refer("234A");

lfc.refer("ABCD");

lfc.refer("1101");

lfc.refer("2202"); lfc.refer("2202");

lfc.refer("2202"); lfc.refer("2202");

System.out.println(lfc.size());

System.out.println(lfc.numElts());

printHeap(lfc.getHeap().getHeap(), lfc.getHeap().size());

// etc.

}

public static void printHeap(CacheFrame[] e,int len) {

// this method skips over unused 0th index....

System.out.println("Printing Heap");

for(int i=1; i< len+1; i++) {

System.out.print("(p."+e[i].value+",f"+e[i].priority+",s"+e[i].getSlot()+")\t");

}

System.out.print("\n");

}

}

package assignment4_f20;

import gradingTools.comp410f20.assignment4.testcases.Assignment4Suite;

public class RunTests {

public static void main(String[] args){ //runs Assignment 4 oracle tests

Assignment4Suite.main(args);

}

}

Ordering is done based on the integers as priorities. In the test data we use, the integer priorities will not be unique -- there may well be duplicate values. Every new frame object we put in the heap has priority 1 (frequency 1 reflecting its first reference). So most inserts will percolate upwards to near the root. Remember when you move elements around in the heap array to go to the frame object and update the "slot" field.

This operation returns a frame object. It does NOT alter the heap. The heap has the same elements in the same arrangement after as it did before. If getMin is done on an empty heap, return null.

This operation removes the root element from the heap. The size of the heap goes down by 1 (if the heap was not already empty). If delMin is done on an empty heap, treat it as a no-op... i.e., do nothing other than return void. Remember when you move elements around in the heap array to go to the frame object and update the "slot" field.

The size operation simply returns the count of how many elements are in the heap. It should be 0 if the heap is empty, and always return a 0 or greater.

The incElt operation is a very active method in our assignment, as it will be called many times when the Cache has a refer operation. The parameter is a pointer to a frame object, so you must access that object and first add 1 to its priority (do a ++ on the frequency field). We are not implementing a full "change by delta" operation like in your text... we are just doing a "delta" of +1 (and -1 for decElt). Once the frequency/priority is incremented, apply the bubble down (up) activity to move the frame to the new proper place in the heap array to maintain heap order.

The thing to watch with these operations (and all operations that move elements around in the heap array) is to make sure when a heap elements moves to a different array slot, you update the slot field in the frame object, to properly reflect where that frame object now resides in the array.

In decElt, watch out for decrementing a priority that is already 1. We wont allow frequency counts to go below 1. If this is attempted, just leave the frequency at 1.

Almost all the action in the cache is from this one operation, and it is just a bit complicated. However, if you properly use the interface ops of the HashMap and the MinBinHeap you will find that when the smoke clears, the code is about 15 lines (not counting comments).

There are two main possibilities when a call like " refer( '2A4C' ); " is done. First we check to see if the frame "2A4C" is in the cache. We do this by consulting the HashMap and find it either is there, or is not.

If "2A4C" is in the cache, we dont do much other than up the frequency count for that frame by 1. We do that by finding the frame object (HashMap) and then telling the MinBinHeap to do a incElt on the object.

If "2A4C" is not in the cache, we have a couple other choices. We have to see if the cache is full or not. If the cache is not full, then there is room for one more frame so we make a frame, put the frequency counter in that frame to 1, and insert it into both HashMap and the MinBinHeap.

If the cache IS full, then we do the most work. First we have to toss out a frame to make room for the new one. This means asking the MinBinHeap to delMin(), removing the root frame (which has the lowest frequency count); we also must remove the (address,frame) pair (address from the tossed frame) from the HashMap since we tossed it out of the cache. Then we have to what we do if the cache is not full... since it is now not full... we have made room for the new frame.

There is no specific output or format required for this assignment. We assume you will use the "print" capability provided (and potentially expand on it) to test your code beyond the scope of the oracle.

Furthermore, please note that just because the Oracle gives you 100, you are not guaranteed any specific grade on the assignment. We do try to put as many things as we can in there edge-case wise, but testing your programs is your responsibility. The main goal of the Oracle is to provide some direction. However, it is not the end-all be-all determiner of correctness. Please do not use this tool as a crutch.

Follow the instructions given in the assignment on Sakai.