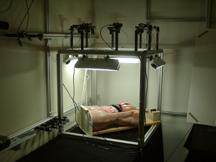

Camera Cube Acquisition System:

Under separate funding from the National Science Foundation we developed an 8-camera acquisition rig to use for algorithm development and some preliminary data collection. We are working on using the same camera cube for this funded work, to collect 8 synchronized image streams from a few small-scale (1/2 meter cubed) tasks meant to imitate real procedures.

Under separate funding from the National Science Foundation we developed an 8-camera acquisition rig to use for algorithm development and some preliminary data collection. We are working on using the same camera cube for this funded work, to collect 8 synchronized image streams from a few small-scale (1/2 meter cubed) tasks meant to imitate real procedures.

Imperceptible Structured Light (ISL):

We believe that one of the most critical goals related to 3D reconstructions is to improve the signal-to-noise ratio of the features in the 2D images used in the reconstruction. We previously developed novel ideas for doing so, using what we call “imperceptible structured light” (ISL). During his sabbatical, Professor Henry Fuchs chose ISL as one of his primary research areas. With collaborators; Professor Markus Gross, Daniel Cotting and Martin Naef at ETH (Zurich, Switzerland), he worked on a new approach that can be implemented using some commercial off-the-shelf digital projectors. Crucial to the success is the ability for us to control light from the projector more precisely than today's average user. For example, in order to make multiple overlapping projectors' images into a single seamless large high resolution image, we need to be able to know quite precisely the output light level at each projector pixel that corresponds to each input pixel value. In our many years of research with projectors, this is the first company that has a projector that will give us access to this precise control (through a separate serial communication port). Until now, we have had to reverse engineer this information which is getting increasingly impractical if we want to have others be able to replicate our research results. This precise level of control is available in this projector to all customers but much of it is not documented, since it is only now used for engineering testing purposes.

We believe that one of the most critical goals related to 3D reconstructions is to improve the signal-to-noise ratio of the features in the 2D images used in the reconstruction. We previously developed novel ideas for doing so, using what we call “imperceptible structured light” (ISL). During his sabbatical, Professor Henry Fuchs chose ISL as one of his primary research areas. With collaborators; Professor Markus Gross, Daniel Cotting and Martin Naef at ETH (Zurich, Switzerland), he worked on a new approach that can be implemented using some commercial off-the-shelf digital projectors. Crucial to the success is the ability for us to control light from the projector more precisely than today's average user. For example, in order to make multiple overlapping projectors' images into a single seamless large high resolution image, we need to be able to know quite precisely the output light level at each projector pixel that corresponds to each input pixel value. In our many years of research with projectors, this is the first company that has a projector that will give us access to this precise control (through a separate serial communication port). Until now, we have had to reverse engineer this information which is getting increasingly impractical if we want to have others be able to replicate our research results. This precise level of control is available in this projector to all customers but much of it is not documented, since it is only now used for engineering testing purposes.Transportable Capture/Compute Unit:

We have recently purchased a 5-node cluster, which we plan to use as a mobile image capture and compute unit at UNC Hospitals. Currently the system supports the reconstruction of 8 camera views in real-time. This data is then displayed for the user, in 3D, on an attached LCD monitor. We have also implemented software for head-tracked visualization of the 3D data.

We have recently purchased a 5-node cluster, which we plan to use as a mobile image capture and compute unit at UNC Hospitals. Currently the system supports the reconstruction of 8 camera views in real-time. This data is then displayed for the user, in 3D, on an attached LCD monitor. We have also implemented software for head-tracked visualization of the 3D data.

3D Reconstruction:

We have been working to improve our previously NSF-funded 3D tele-immersion testbed, in a manner that will lend itself well to use for this remote 3D medical consultation work. With long-time collaborators Kostas Daniilidis et al. at the University of Pennsylvania we designed new trinocular camera mounts, and have been installing them with our existing stereo display system. This is likely to be the basis for one end of our two-node “permanent” system.

We have been working to improve our previously NSF-funded 3D tele-immersion testbed, in a manner that will lend itself well to use for this remote 3D medical consultation work. With long-time collaborators Kostas Daniilidis et al. at the University of Pennsylvania we designed new trinocular camera mounts, and have been installing them with our existing stereo display system. This is likely to be the basis for one end of our two-node “permanent” system.

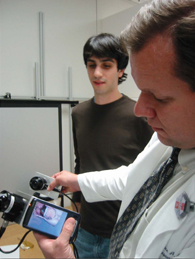

Hand-held Advisor Node:

We have put together a prototype to explore a two-handed surrogate interface idea that Dr. Welch had a few months ago. The idea is to estimate the pose of the PDA relative to a second device that serves as a “patient surrogate”. The second device could be, for example, the cover for the PDA. It could even have a person/patient drawn on it. The two-handed surrogate approach has two advantages. First, tracking a PDA relative to a second (nearby) device is a much more tractable problem than tracking the general 6D pose (position and orientation) of a PDA in (for example) the “world” coordinates. Second, we think that giving a doctor a physical object that represents the patient will turn out to be far less confusing to them.

We have put together a prototype to explore a two-handed surrogate interface idea that Dr. Welch had a few months ago. The idea is to estimate the pose of the PDA relative to a second device that serves as a “patient surrogate”. The second device could be, for example, the cover for the PDA. It could even have a person/patient drawn on it. The two-handed surrogate approach has two advantages. First, tracking a PDA relative to a second (nearby) device is a much more tractable problem than tracking the general 6D pose (position and orientation) of a PDA in (for example) the “world” coordinates. Second, we think that giving a doctor a physical object that represents the patient will turn out to be far less confusing to them.

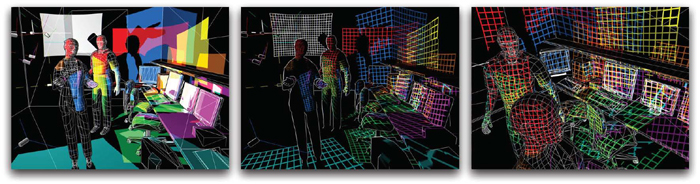

Optimal Sensor Placement:

Dr. Greg Welch and Andrei State have developed a geometric planning tool named Pandora. It is a software simulator that is “geometric” in that it only takes into account a camera’s field of visibility, resolution, and occlusions. The simulator is an interactive 3D graphics program that loads a set of 3D models representing the desired scene (room, furniture, people, props, etc.) and allows the user to specify a number of cameras in terms of position, orientation, and field of view. The program treats the cameras as shadow-casting light sources. Any part of the scene that is shadowed from a light source is invisible to the corresponding camera. By using a different color for each camera's light source, and by applying additive blending, we can visualize the areas covered by one or more cameras, as well as the areas that are completely shadowed (i.e. invisible to all cameras) thus answering the first question. We can also project a user-specified pattern onto the scene from each camera's light source. By using a grid pattern aligned with a camera's pixel grid we can visualize the approximate imaging resolution for any visible element of the scene, providing an answer to the second question. See below for examples.

Portable Camera Unit:

The Portable Camera Unit (PCU) was originally designed and constructed for use at UNC Hospital’s Burn Center. The objective was to create a mobile camera platform which could be wheeled into patient rooms and extended over the patient’s bed, to record live surgeries. Initially the PCU only held two PTZ cameras and a PC for data storage. An extensive amount of modifications were performed on the PCU in order to retrofit it for service in the HPS lab. Currently, the PCU continues to serve as a mobile camera platform, but has an added ability to act as a 2D teleconferencing system. The flat panel monitor at the base displays the camera view of the remote physician, while the audio is transferred via the central mounted microphone and is played through the speakers mounted at the base.

The Portable Camera Unit (PCU) was originally designed and constructed for use at UNC Hospital’s Burn Center. The objective was to create a mobile camera platform which could be wheeled into patient rooms and extended over the patient’s bed, to record live surgeries. Initially the PCU only held two PTZ cameras and a PC for data storage. An extensive amount of modifications were performed on the PCU in order to retrofit it for service in the HPS lab. Currently, the PCU continues to serve as a mobile camera platform, but has an added ability to act as a 2D teleconferencing system. The flat panel monitor at the base displays the camera view of the remote physician, while the audio is transferred via the central mounted microphone and is played through the speakers mounted at the base.

Remote Monitoring Station:

The Remote Monitoring Station (RMS) was designed to accomplish two related tasks. The first was to provide a 2D teleconferencing system that was superior to current systems and the second to record audio and video during each session. The RMS utilizes high-end consumer grade video cameras which are linked directly to the monitors via an S-video connection. This arrangement not only provides improved resolution, but also eliminates the lag associated with sending images over the network, as would be present using current 2D teleconferencing equipment. The audio is also sent directly from microphone to speakers to achieve a clean, lag free signal. To cover the recording tasks, the RMS has three DVD recorders. These record video streams from a quad split and two PTZ cameras. The audio is sent to the DVD recorders from the mixer and then is looped through the DVD recorders.

The Remote Monitoring Station (RMS) was designed to accomplish two related tasks. The first was to provide a 2D teleconferencing system that was superior to current systems and the second to record audio and video during each session. The RMS utilizes high-end consumer grade video cameras which are linked directly to the monitors via an S-video connection. This arrangement not only provides improved resolution, but also eliminates the lag associated with sending images over the network, as would be present using current 2D teleconferencing equipment. The audio is also sent directly from microphone to speakers to achieve a clean, lag free signal. To cover the recording tasks, the RMS has three DVD recorders. These record video streams from a quad split and two PTZ cameras. The audio is sent to the DVD recorders from the mixer and then is looped through the DVD recorders.