Roni Sengupta

|

|

I am an Assistant Professor of Computer Science at the University of North Carolina at Chapel Hill. I lead the Spatial & Physical Intelligence Lab SPIN Lab. Previously I was a Postdoctoral Research Associate in Computer Science & Engineering at University of Washington, working with Prof. Steve Seitz, Prof. Brian Curless and Prof. Ira Kemelmacher-Shlizerman in the UW Reality Lab and GRAIL (2019-2022). I completed my Ph.D. (2013 - 2019) from University of Maryland - College Park (UMD), advised by Prof. David Jacobs and my undergraduate degree (2009-2013) in Electronics and Tele-Communication Engineering from Jadavpur University, Kolkata, India. I also had the pleasure to spend time and work with many amazing researchers from NVIDIA Research, Snapchat Research, The Weizmann Institute of Science (Israel), and TU Dortmund (Germany). Email: ronisen at cs.unc.edu |

Research Interest

My research lies at the intersection of Computer Vision and Computer Graphics, mainly centered around 3D Vision and Computational Photography. My lab, Spatial & Physical Intelligence Lab (SPIN Lab), is particularly interested in developing AI techniques for solving Inverse Graphics problems, where the goal is to decompose images and videos into its' intrinsic components -- geometry, motion, material reflectance, material propereties, and lighting. We solve Inverse Graphics problems to advance various applications in visual content creation and editing, telepresence, AR/VR, robotics, and healthcare.Research Group: SPIN Lab | Teaching | Publications

Spatial & Physical Intelligence Lab (SPIN Lab)

PhD students

|

|

|

| Luchao Qi | Jun Myeong Choi | Daniel Rho |

| 4th year | 4th year | 2nd year |

|

|

|

| Ana Xiong | Noah Frahm | Yixing Lu |

| 2nd year | 2nd year | 1st year |

MS students

|

|

| Andrea Dunn Beltran | Prakrut Patel |

Undergraduate students

|

| Amisha Wadhwa |

Alumni

Former PhD Students

- Jiaye Wu (PhD) (co-advise with David Jacobs at U. Maryland).

- Daniel Lichy (PhD) (co-advise with David Jacobs at U. Maryland), now at Kitware.

- Dongxu Zhao (PhD) (co-advise with Jan-Michael Frahm), now at Google.

Former MS/BS Students

- Annie Wang (MS), now at Databricks

- Bang Gong (BS-MS)

- Pierre-Nicolas Perrin (BS-MS), now at Capitol One

- Yulu Pan (BS), now MS at UNC

- Max Christman (BS), now MS at UNC

- Andrey Ryabstev (BS-MS)(University of Washington), now at Google

- Peter Lin (BS-MS)(University of Washington), now at ByteDance

- Jackson Stokes (BS-MS)(University of Washington), now at Google

- Peter Michael (BS-MS)(University of Washington), now PhD at Cornell University

Teaching

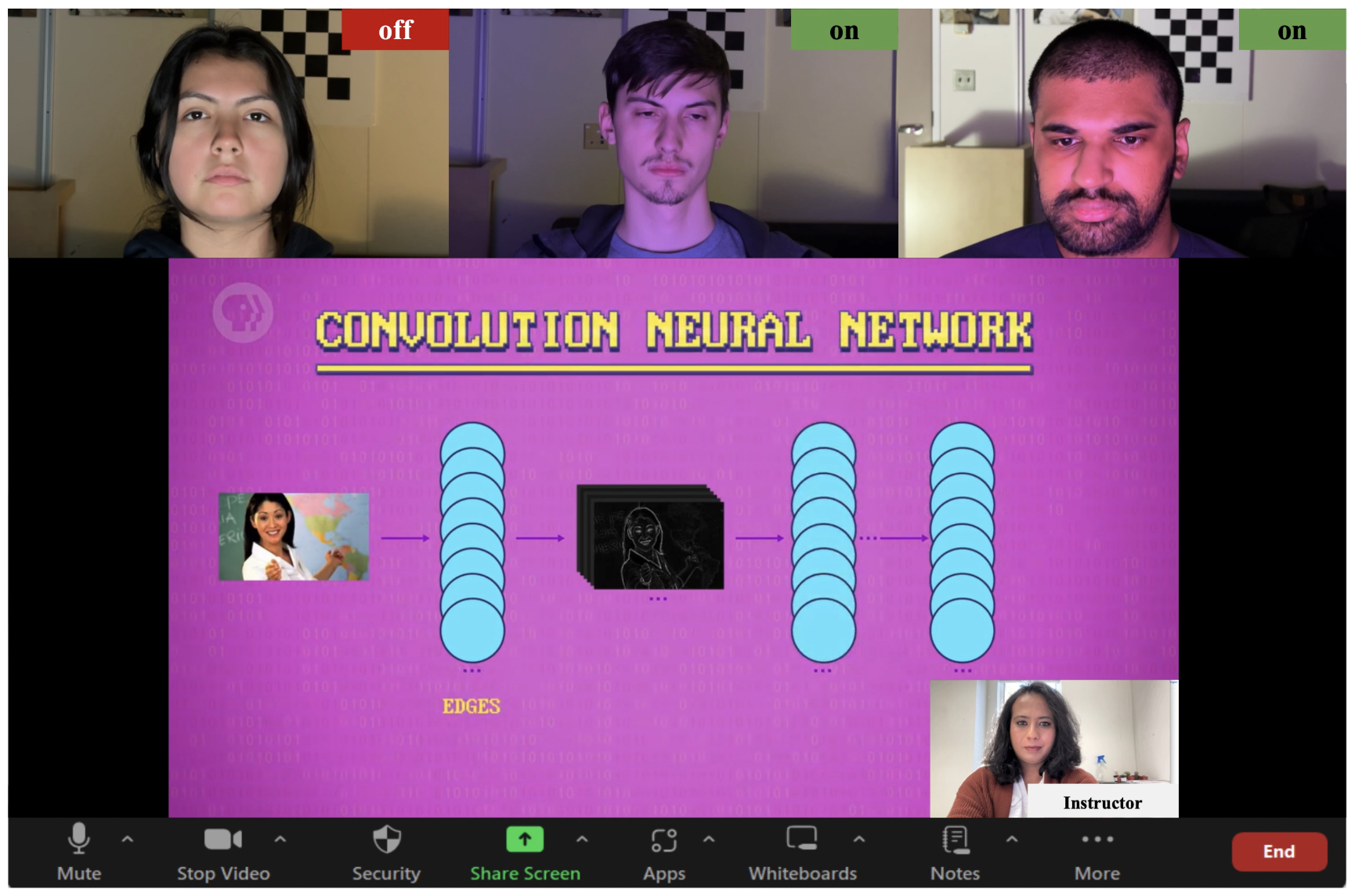

Instructor: COMP 590: Introduction to Computer Vision (Undergraduate focused): Fall 2024

Instructor: COMP 790: 3D Generative Model Spring 2024

Instructor: COMP 776/590: Computer Vision in 3D World (Graduate focused): Spring 2023, Fall 2023, Spring 2025

Instructor: COMP 790/590: Neural Rendering Fall 2022

Pre-prints

|

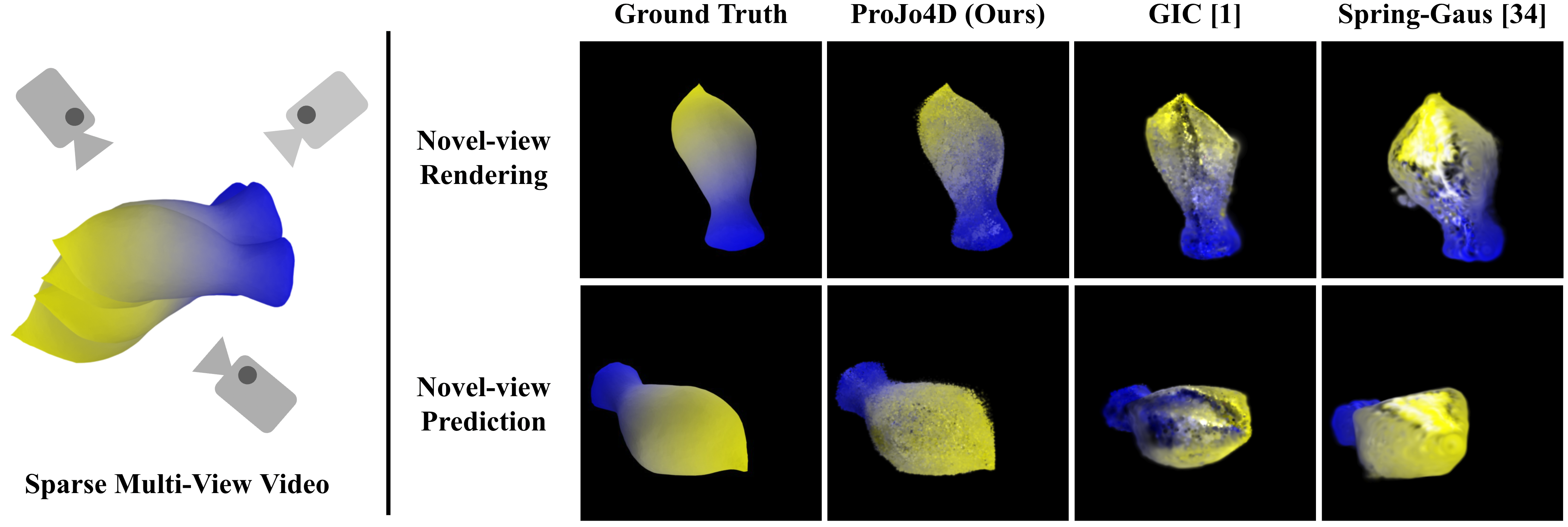

ProJo4D: Progressive Joint Optimization for Sparse-View Inverse Physics Estimation ProJo4D recovers 3D shape and physical behavior of deformable objects from sparse-view inputs using a progressive joint-optimization framework for inverse physics estimation. |

|

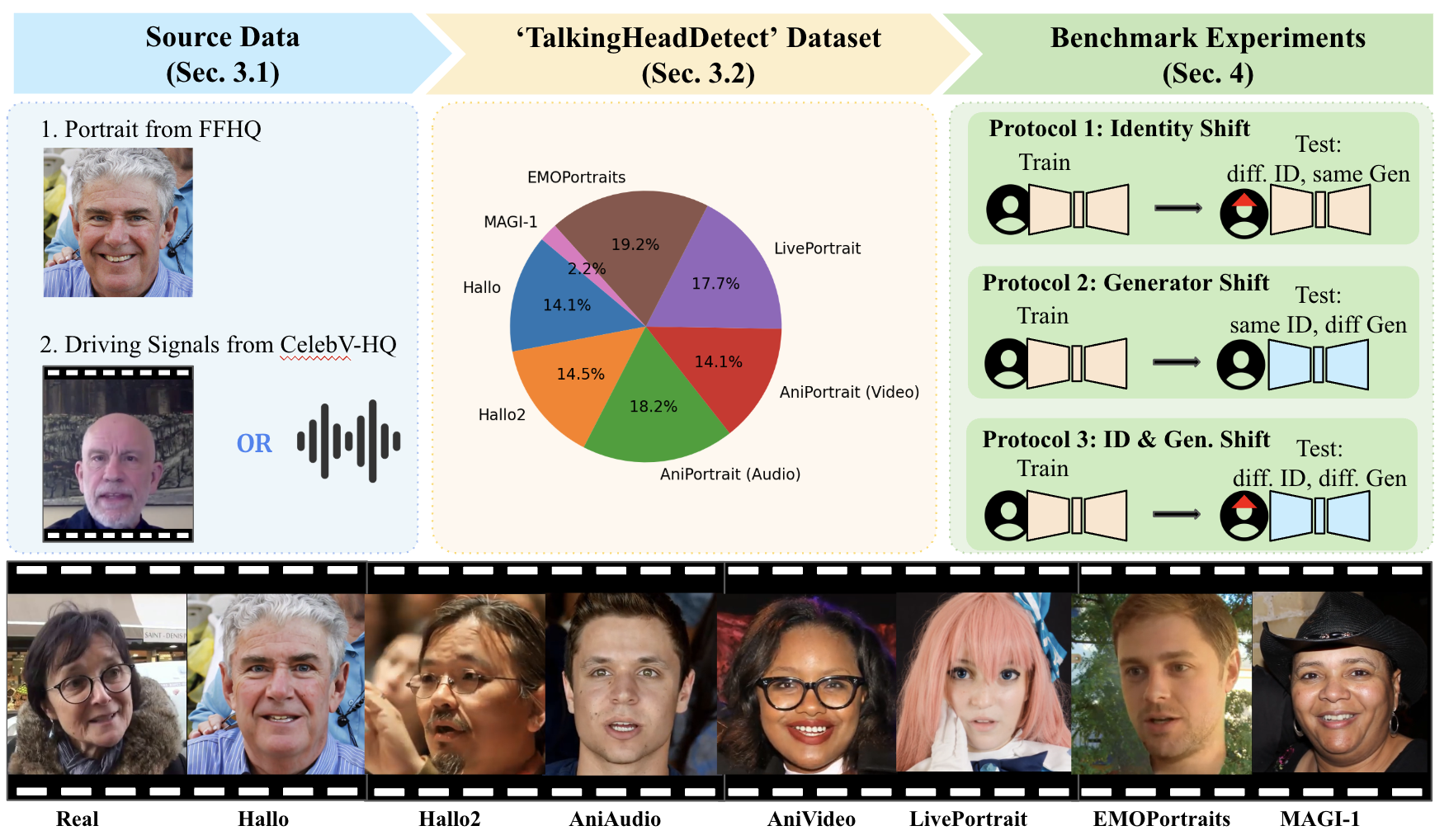

TalkingHeadBench: A Multi-Modal Benchmark & Analysis of Talking-Head DeepFake Detection PPS-Ctrl is an image translation framework that fuses Stable Diffusion and ControlNet, guided by a physics-informed Per-Pixel Shading map for realistic and structure-preserving endoscopy image translation. |

|

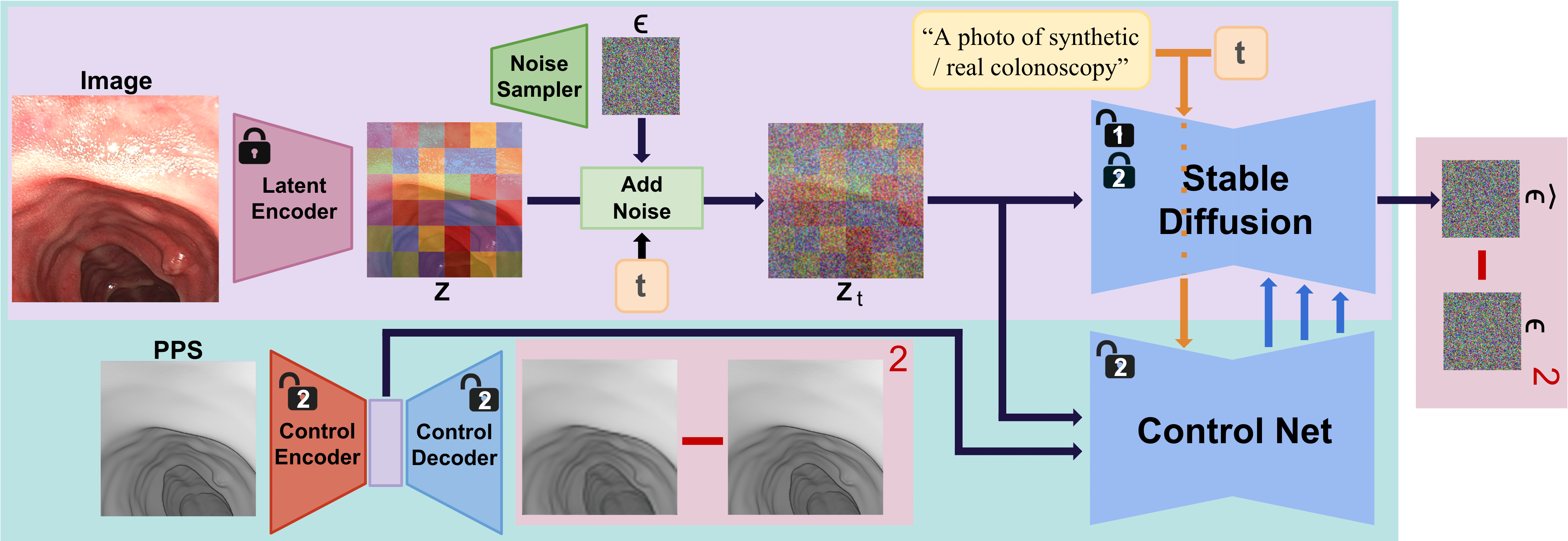

PPS-Ctrl: Controllable Sim-to-Real Translation for Colonoscopy Depth Estimation PPS-Ctrl is an image translation framework that fuses Stable Diffusion and ControlNet, guided by a physics-informed Per-Pixel Shading map for realistic and structure-preserving endoscopy image translation. |

|

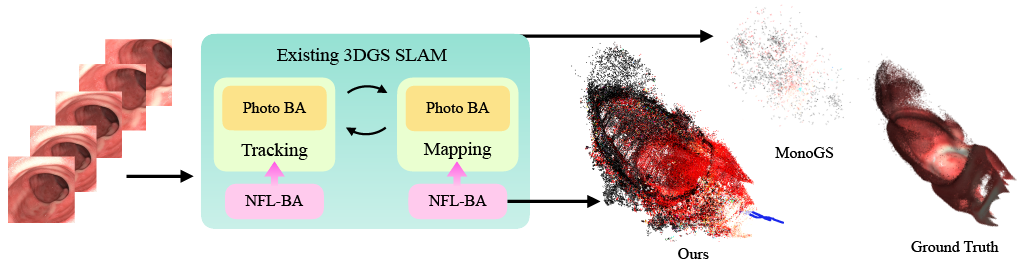

NFL-BA: Improving Endoscopic SLAM with Near-Field Light Bundle Adjustment We introduce a novel Bundle Adjustment loss that uses lighting cues, i.e. points closer and facing the camera reflects more light, for improving pose and map estimation for dense visual SLAM algorithms in endoscopy (results on 3D Gaussian SLAMs). |

|

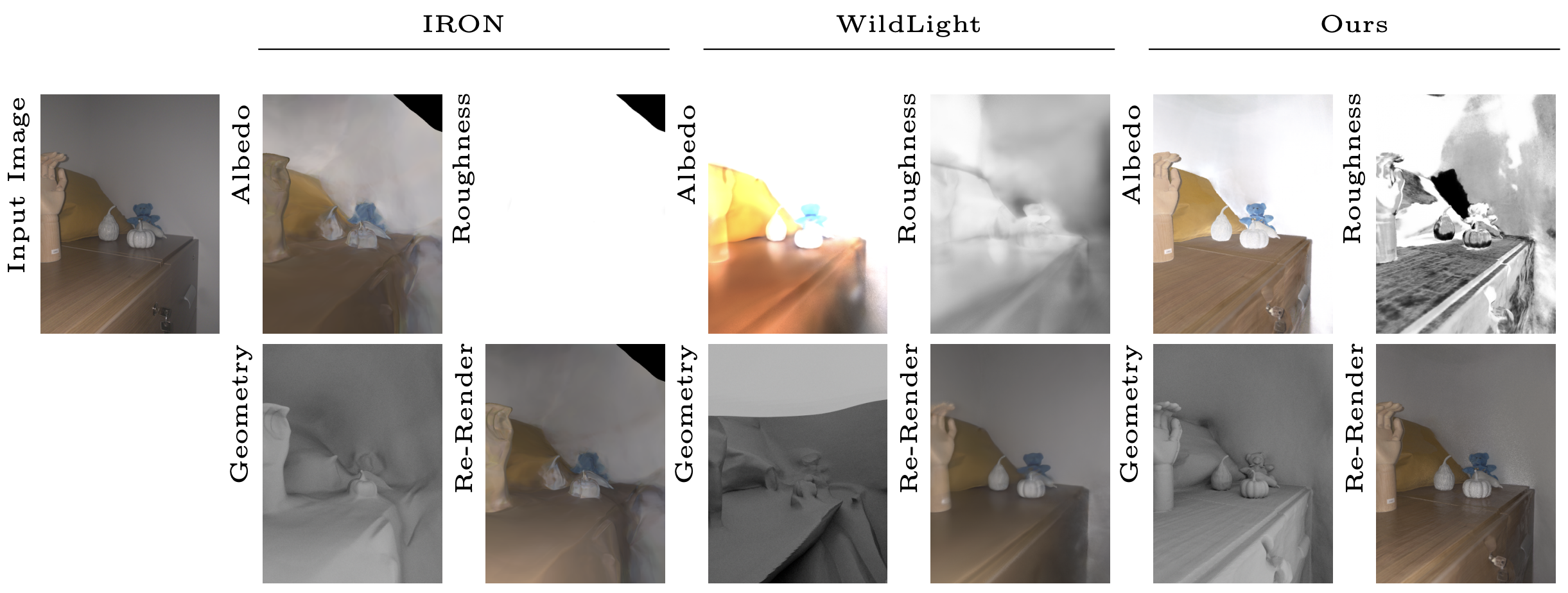

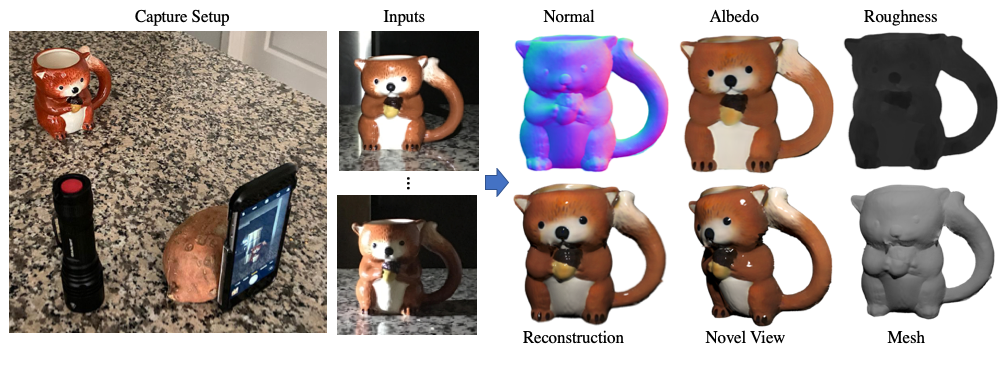

GaNI: Global and Near Field Illumination Aware Neural Inverse Rendering Global and Near-field Illumination-aware neural inverse rendering technique that can reconstruct geometry, albedo, and roughness parameters from images of a scene captured with co-located light and camera. |

Published

|

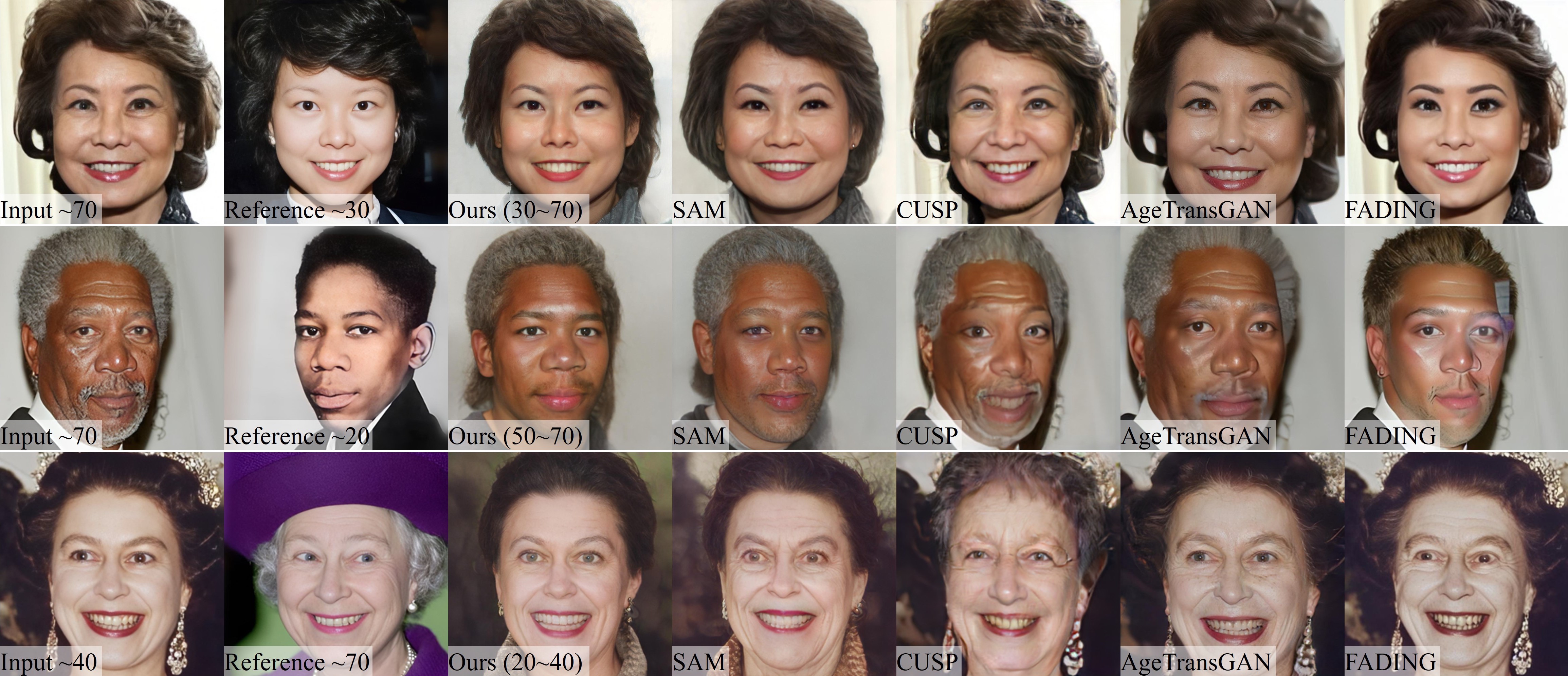

MyTimeMachine: Personalized Facial Age Transformation We personalize a pre-trained global aging prior using 50 personal selfies, allowing age regression (de-aging) and age progression (aging) with high fidelity and identity preservation. |

|

ScribbleLight: Single Image Indoor Relighting with Scribbles ScribbleLight is a generative model that supports local fine-grained control of lighting effects through scribbles that describe changes in lighting. |

|

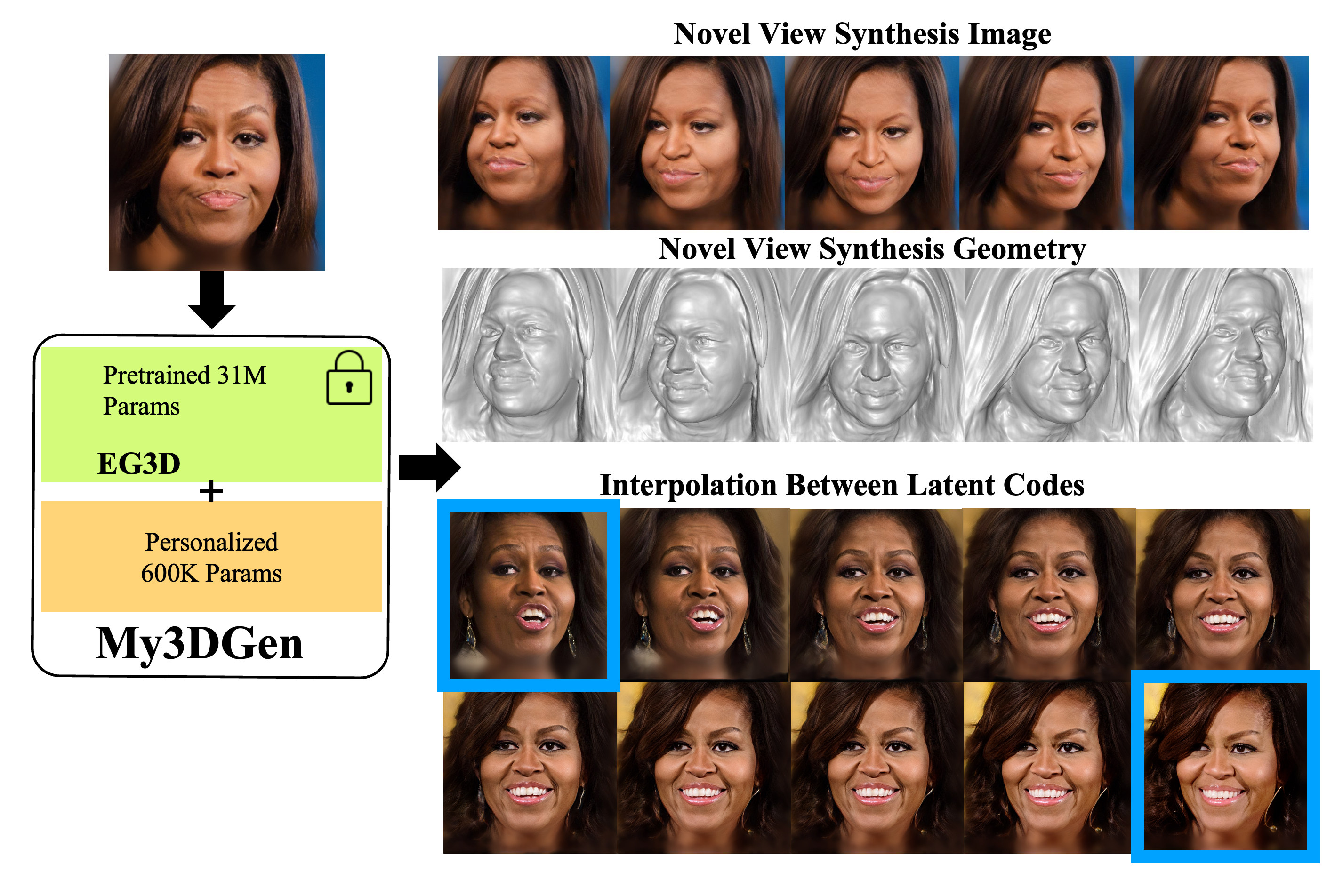

My3DGen: A Scalable Personalized 3D Generative Model We propose a parameter efficient approach for building personalized 3D generative priors by updating only 0.6 million parameters compared to a full finetuning of 31 million parameters. Personalized 3D generative priors can reconstruct any test image and synthesize novel 3D images of an individual without any test-time optimization or finetuning. |

|

Continual Learning of Personalized Generative Face Models with Experience Replay We introduce a continual learning problem of updating personalized 2D and 3D generative face models without forgetting past representations as new photos are regularly captured. |

|

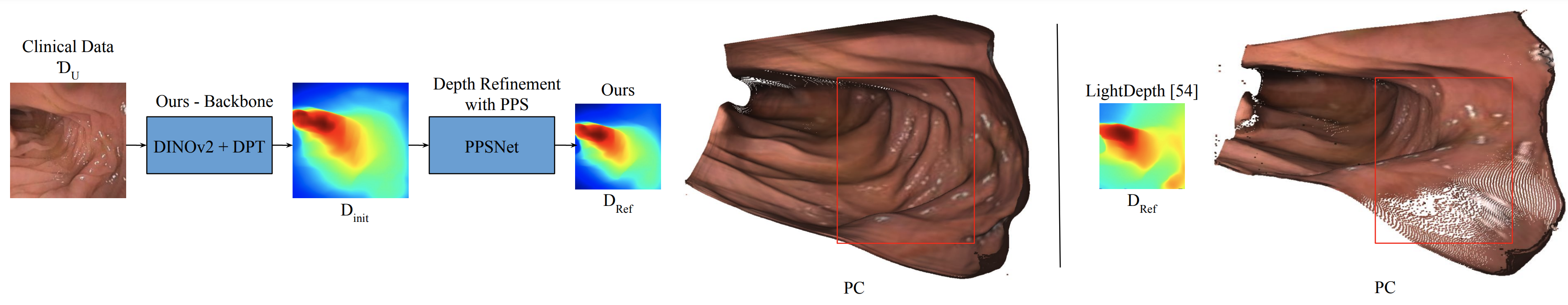

Leveraging Near-Field Lighting for Monocular Depth Estimation from Endoscopy Videos We model near-field lighting, emitted by the endoscope and reflected by the surface, as Per-Pixel Shading (PPS). We use PPS features to perform depth refinement (PPSNet) on clinical endoscopy videos with transfer learning of foundation models with self-supervision. |

|

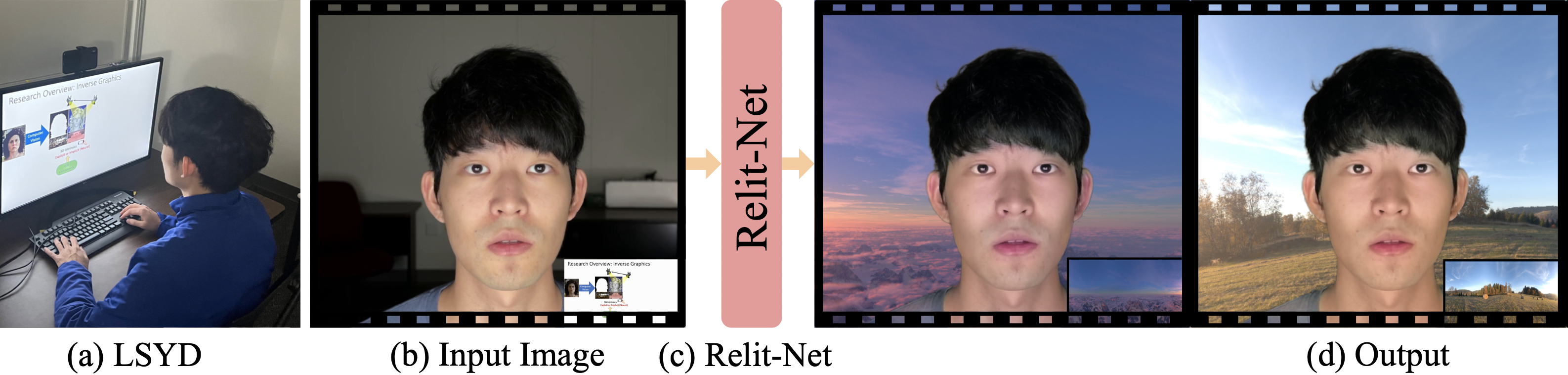

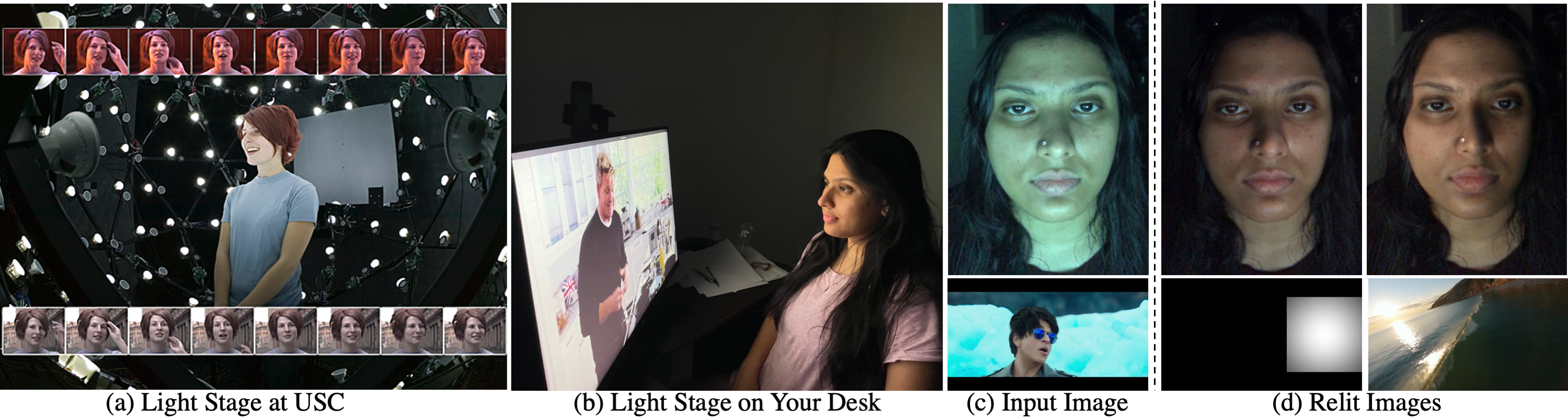

Personalized Video Relighting With an At-Home Light Stage We show how to build HQ face relighting model by recording a person watching YouTube videos on their monitor (at-home Light Stage) instead of expensive data capture with a Light Stage. |

|

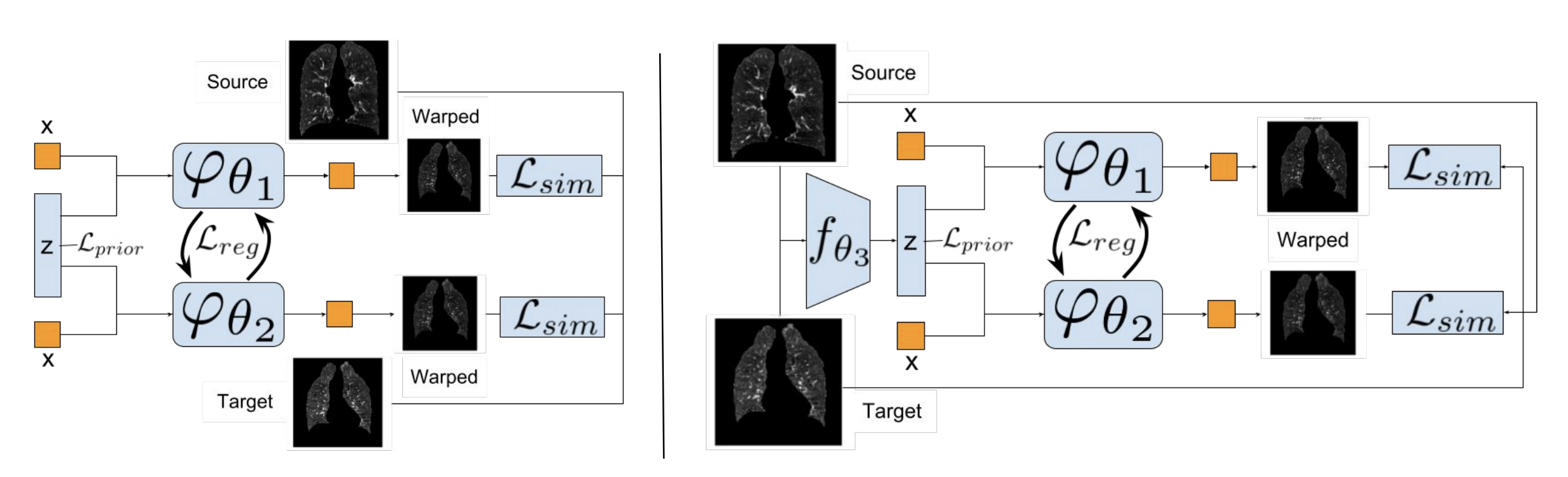

NePhi: Neural Deformation Fields for Approximately Diffeomorphic Medical Image Registration NePhi produces neural deformation field for medical image registration with less memory consumption compared to existing voxel-based deformations, unlocking the capability of applying image registration approaches on high-resolution images. |

|

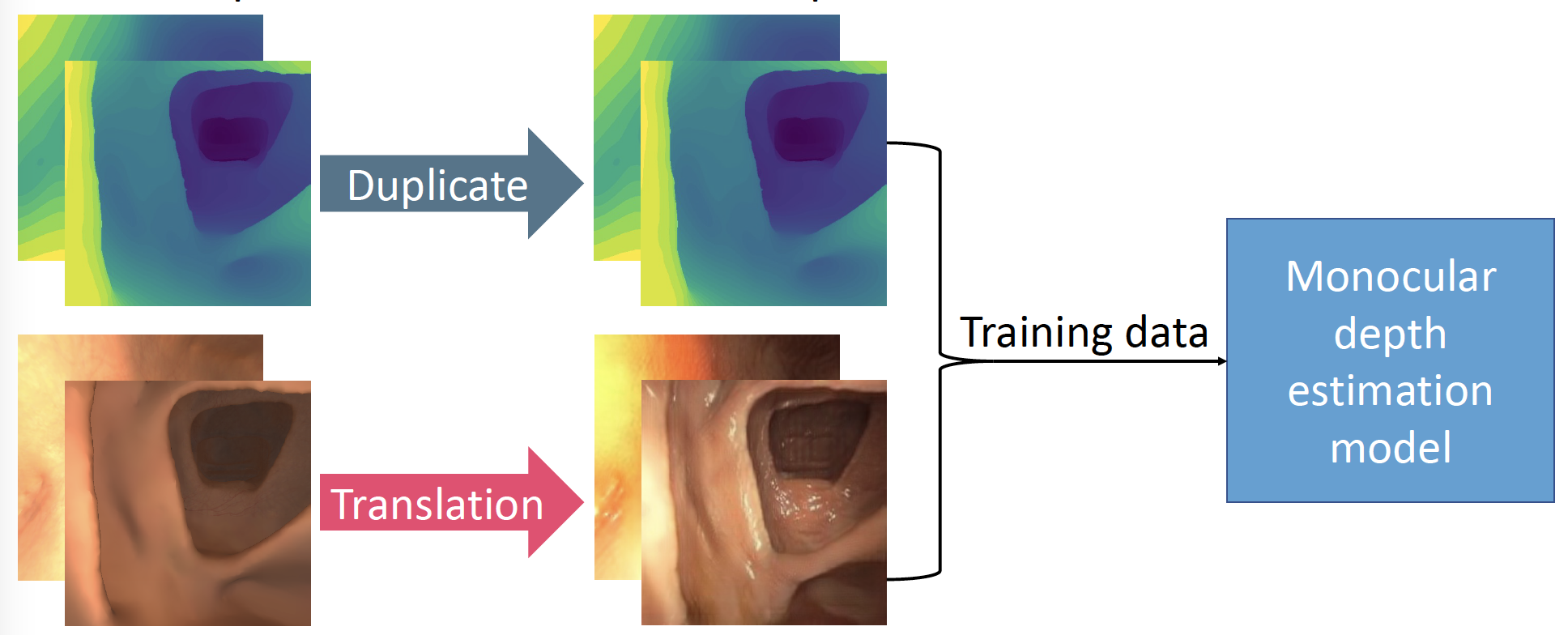

Structure-preserving Image Translation for Depth Estimation in Colonoscopy Monocular Depth Estimators suffer Sim2Real gap. We use GAN + structure preserving loss for sim2real transfer producing SOTA depths on clinical data. |

|

Building Secure and Engaging Video Communication by Using Monitor Illumination We use light reflected from the monitor to detect if a person in a video call is real/live (on) or deepfake (off). |

|

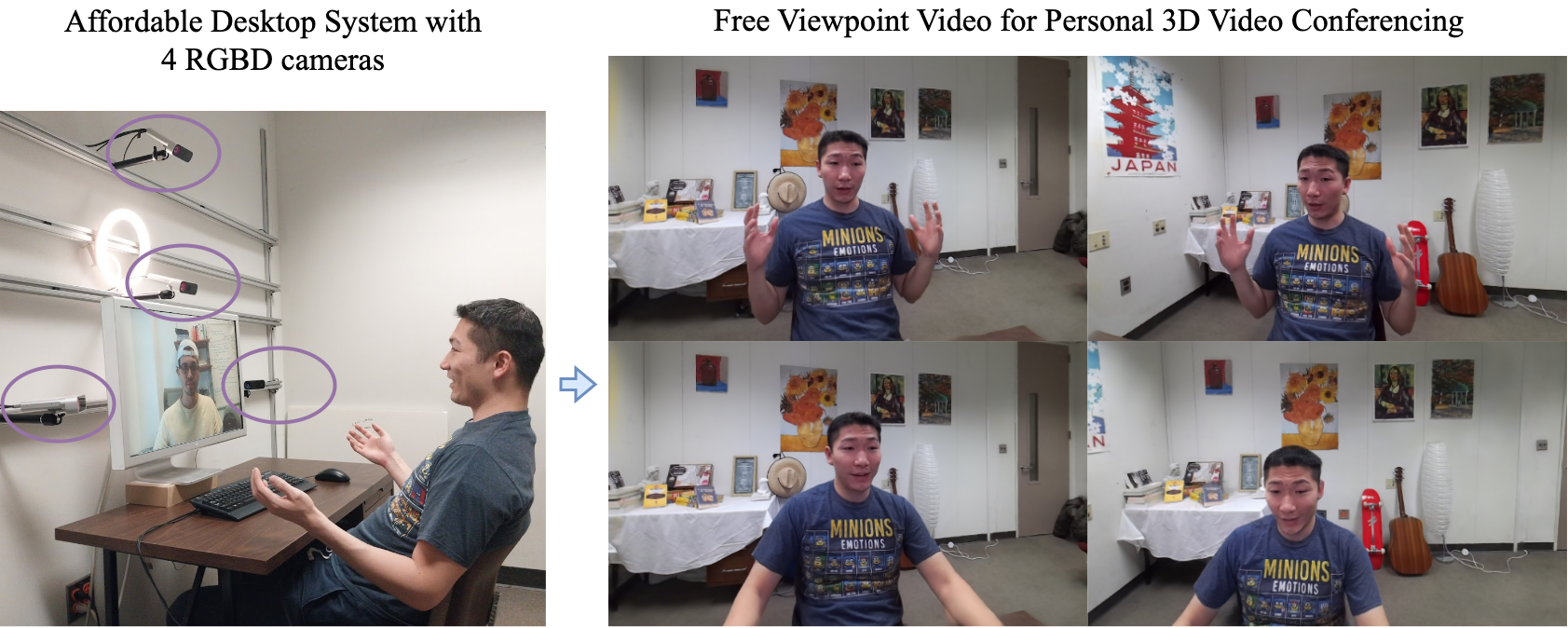

Bringing Telepresence to Every Desk We introduce a novel system that can render high-quality novel views from 4 RGBD camera focused on a tele-conferencing setup. We introduce a novel multiview point cloud rendering algorithm. |

|

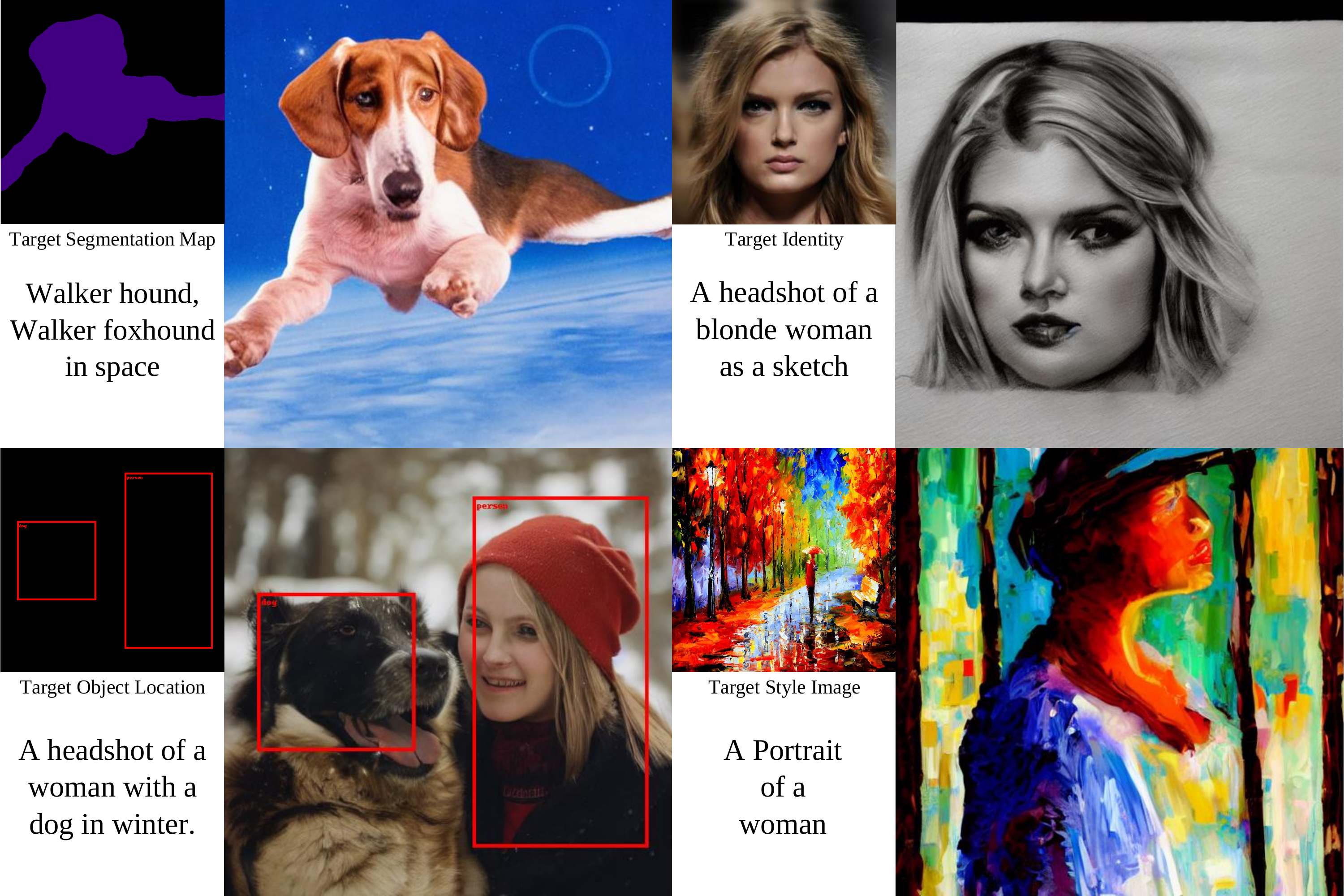

Universal Guidance for Diffusion Models Enables controlling diffusion models by arbitrary guidance modalities without the need to retrain any use-specific components. |

|

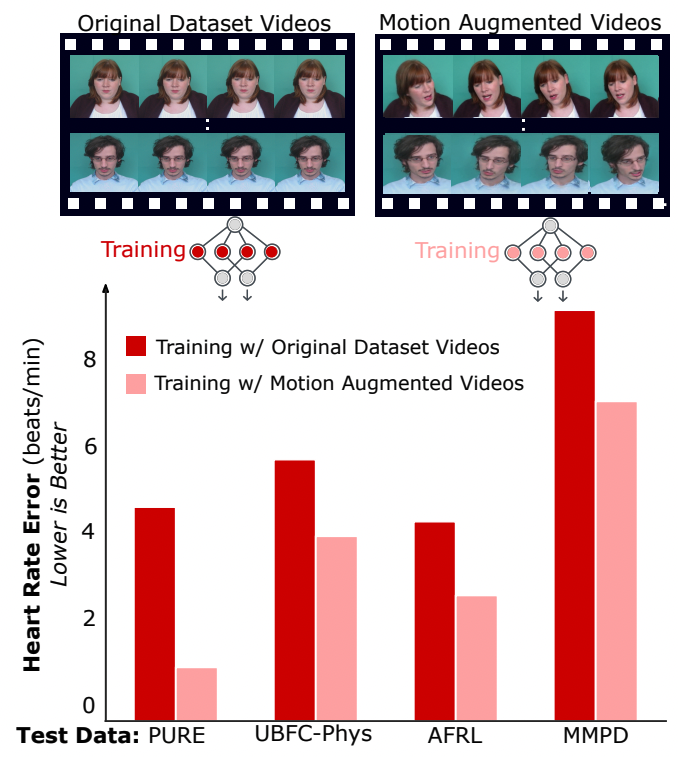

Motion Matters: Neural Motion Transfer for Better Camera Physiological Sensing Neural Motion Transfer serves as an effective data augmentation technique for PPG signal estimation from facial videos. We devise the best strategy to augment publicly available datasets with motion augmentation, improving up to 75% over SOTA techniques on five benchmark datasets. |

|

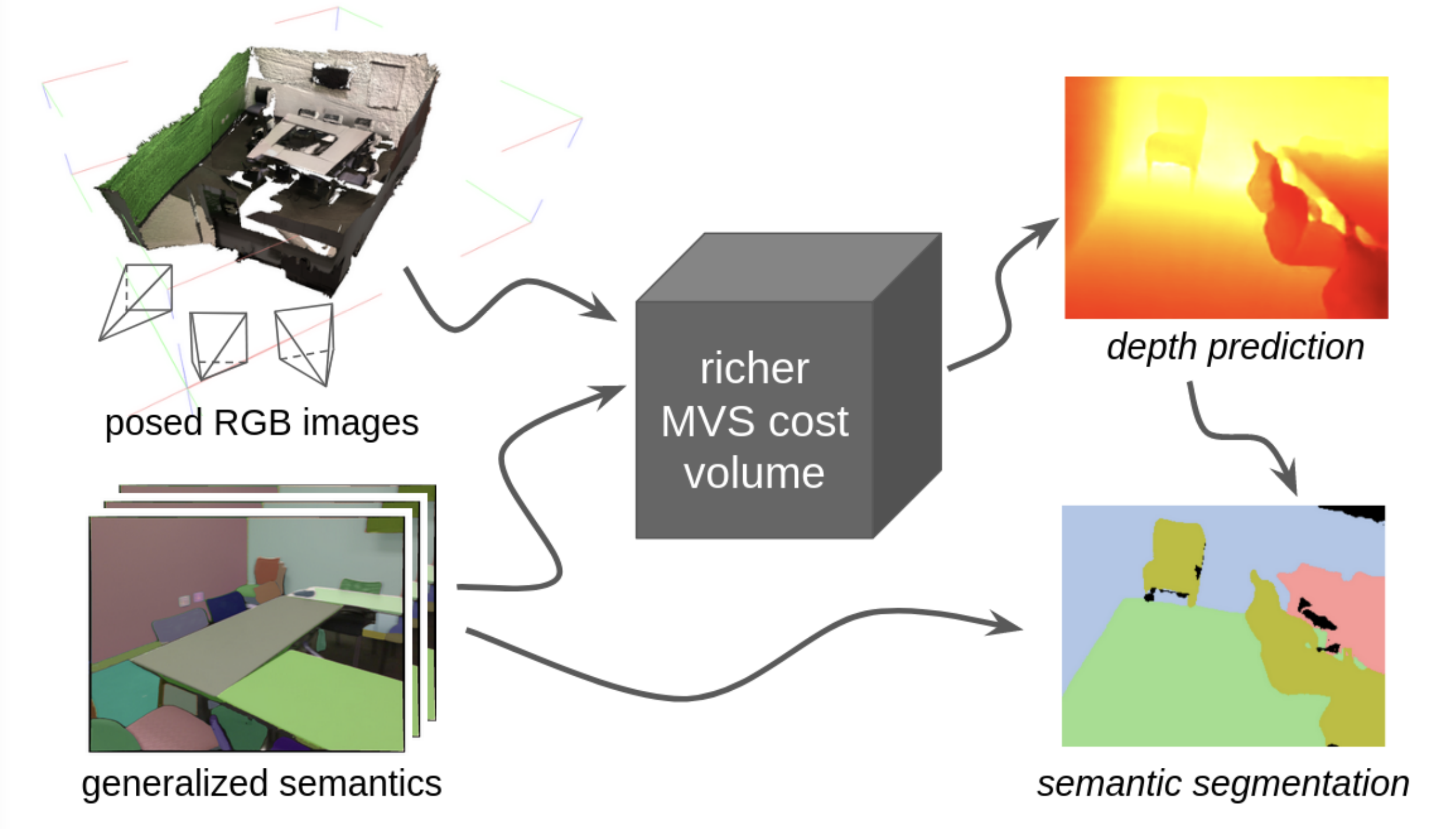

Joint Depth Prediction and Semantic Segmentation with Multi-View SAM Generalized semantic features from Segment Anything (SAM) model help to build a richer cost volume for MVS. In turn, the depth predicted from the cost volume serves as a rich prompt for improving semantic segmentation. |

|

|

rPPG-Toolbox: Deep Remote PPG Toolbox We present a comprehensive toolbox, rPPG-Toolbox, that contains unsupervised and supervised rPPG models with support for public benchmark datasets, data augmentation, and systematic evaluation. |

|

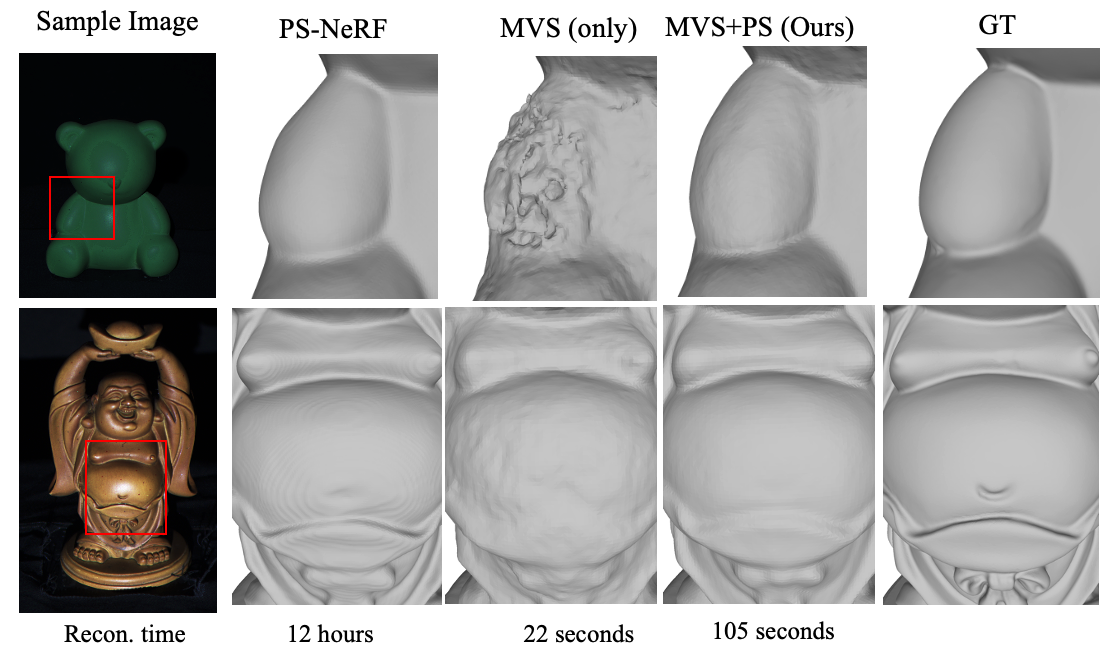

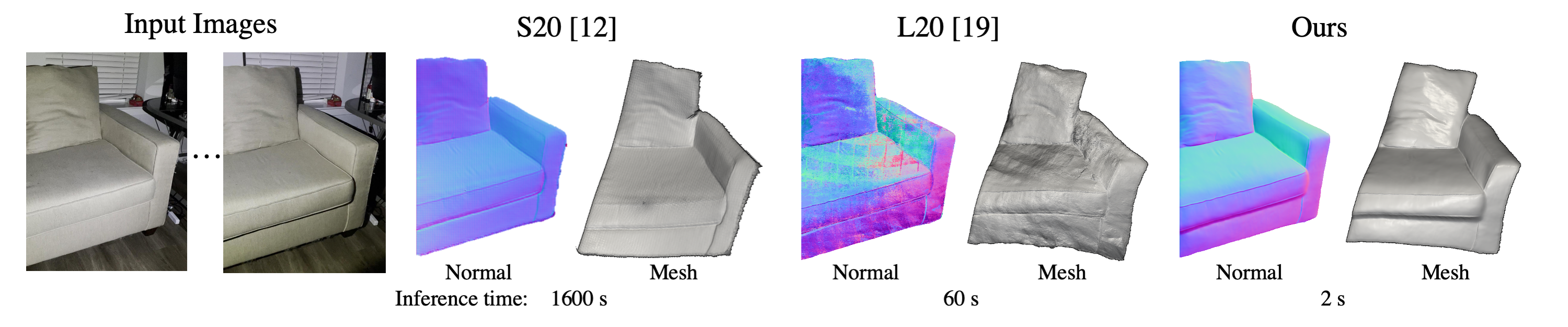

MVPSNet: Fast Generalizable Multi-view Photometric Stereo We propose generalized approach to multi-view photometric stereo that is significantly better than only multi-view stereo. It produces same reconstruction quality while being 400x faster than per-scene optimization techniques. |

|

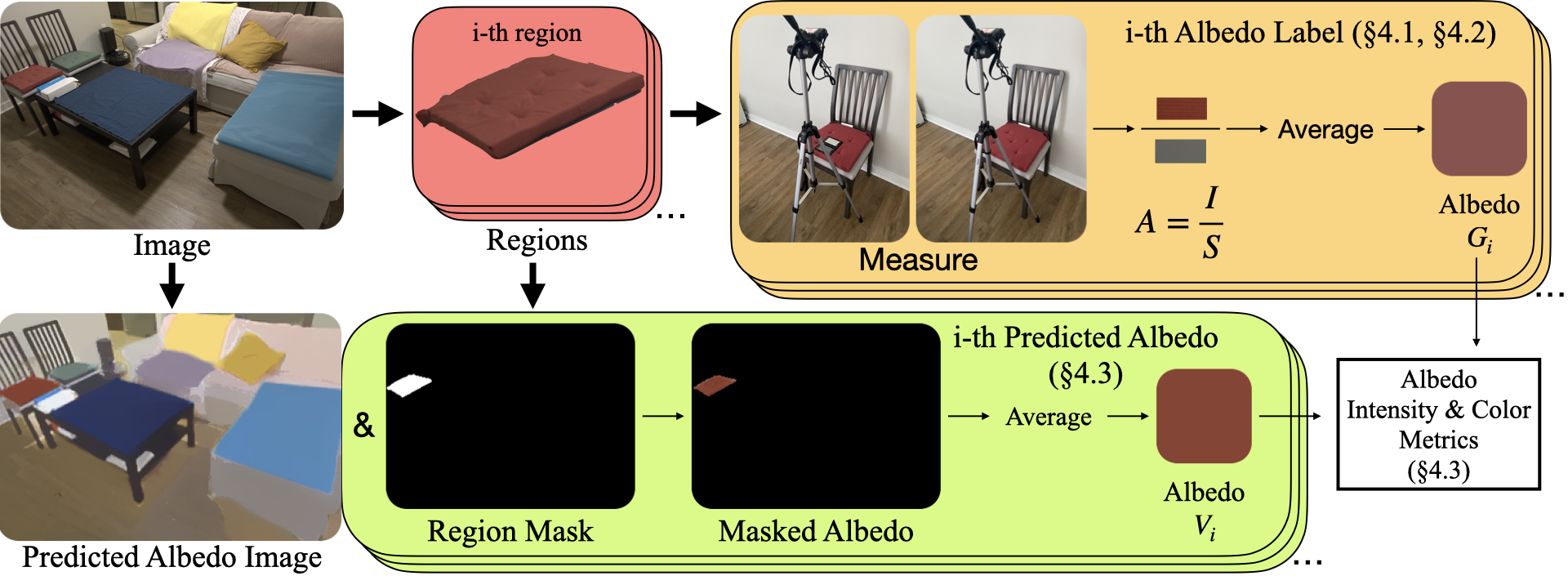

Measured Albedo in the Wild: Filling the Gap in Intrinsics Evaluation Existing benchmark (WHDR metric on IIW) for evaluating Intrinsic Image decomposition in the wild are often incomplete as it relies on pair-wise relative human judgements. In order to comprehensively evaluate albedo, we collect a new dataset, Measured Albedo in the Wild (MAW), and propose three new metrics that complement WHDR: intensity, chromaticity and texture metrics. We show that SOTA inverse rendering and intrinsic image decomposition algorithms overfit on WHDR metric and our proposed MAW benchmark can properly evaluate these algorithms that match their visual quality. |

|

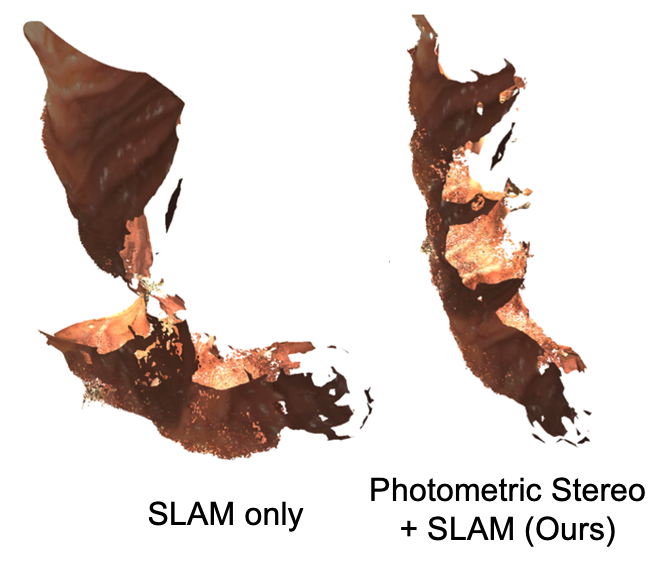

A Surface-normal Based Neural Framework for Colonoscopy Reconstruction Using SLAM + near-field Photometric Stereo for 3D colon reconstruction from colonoscopy videos. |

|

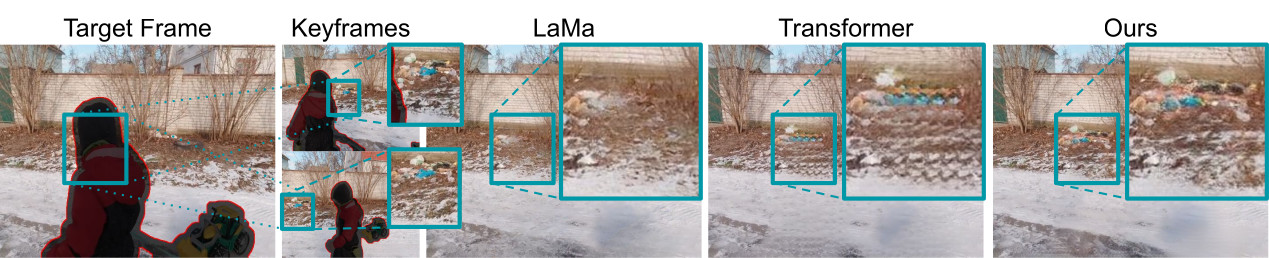

Towards Unified Keyframe Propagation Models We present a two-stream approach for video in-painting, where high-frequency features interact locally and low-frequency features interact globally via attention mechanism. |

|

Real-Time Light-Weight Near-Field Photometric Stereo Near-field Photometric Stereo technique is useful for 3D imaging of large objects. We capture multiple images of an object by moving a flashlight and reconstruct the 3D mesh. Our method is significnatly faster and memory-efficient while producing better quality than SOTA methods. |

|

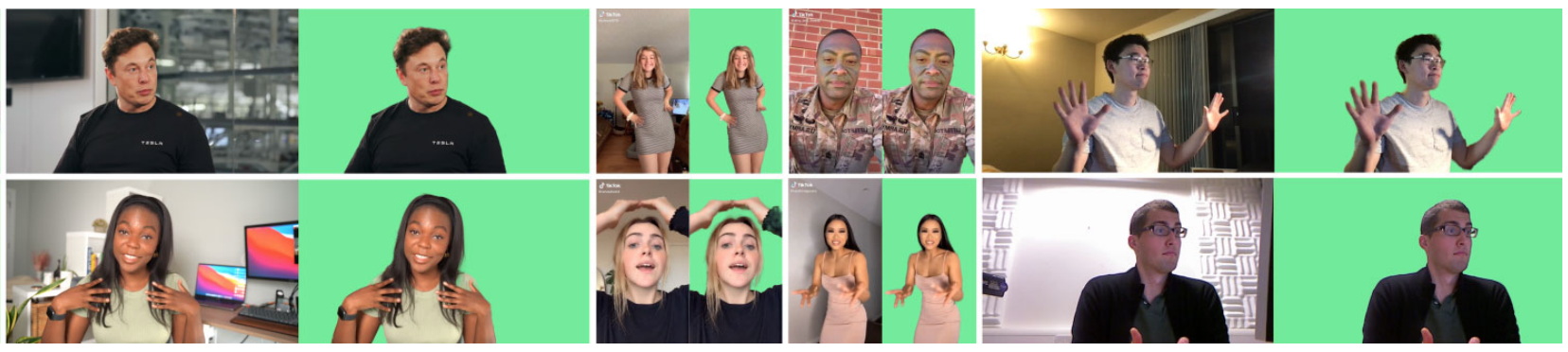

Robust High-Resolution Video Matting with Temporal Guidance Background Removal a.k.a Alpha matting on videos by exploiting temporal information with a recurrent architecture. Does not require capturing background image or manual annotations. |

|

A Light Stage on Every Desk We learn a personalized relighting model by capturing a person watching YouTube videos. Potential application includes relighting during a zoom call. |

|

Shape and Material Capture at Home High-quality Photometric Stereo can be achieved with a simple flashlight. Recovers hi-res geometry and reflectance by progressively refining the predictions at each scale, conditioned on the prediction at previous scale. |

|

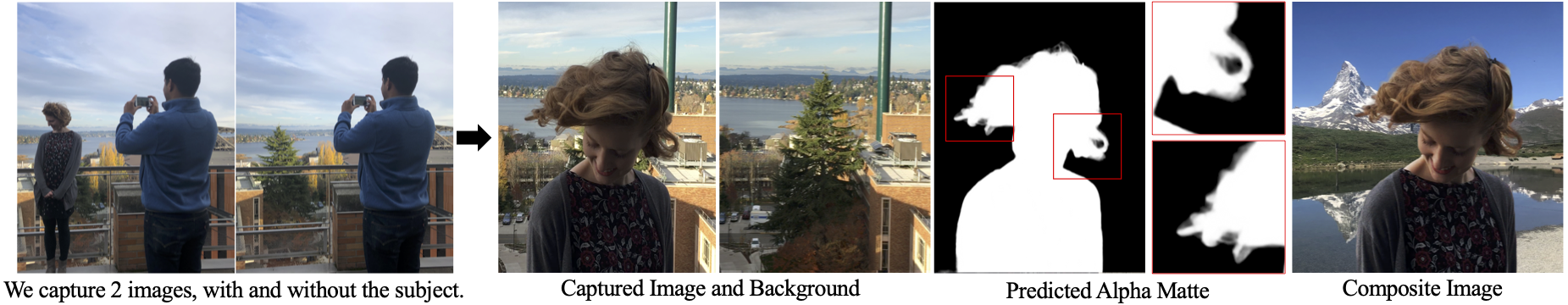

Real-Time High Resolution Background Matting Background replacement at 30fps on 4K and 60fps on HD. Alpha matte is first extracted at low-res and then selectively refined with patches. |

|

Lifespan Age Transformation Synthesis Age transformation from 0-70. Continuous aging is modeled by assuming 10 anchor age classes with interpolation in the latent space between them. |

|

Background Matting: The World is Your Green Screen By simply capturing an additional image of the background, alpha matte can be extracted easily without requiring extensive human annotation in form of trimap. |

|

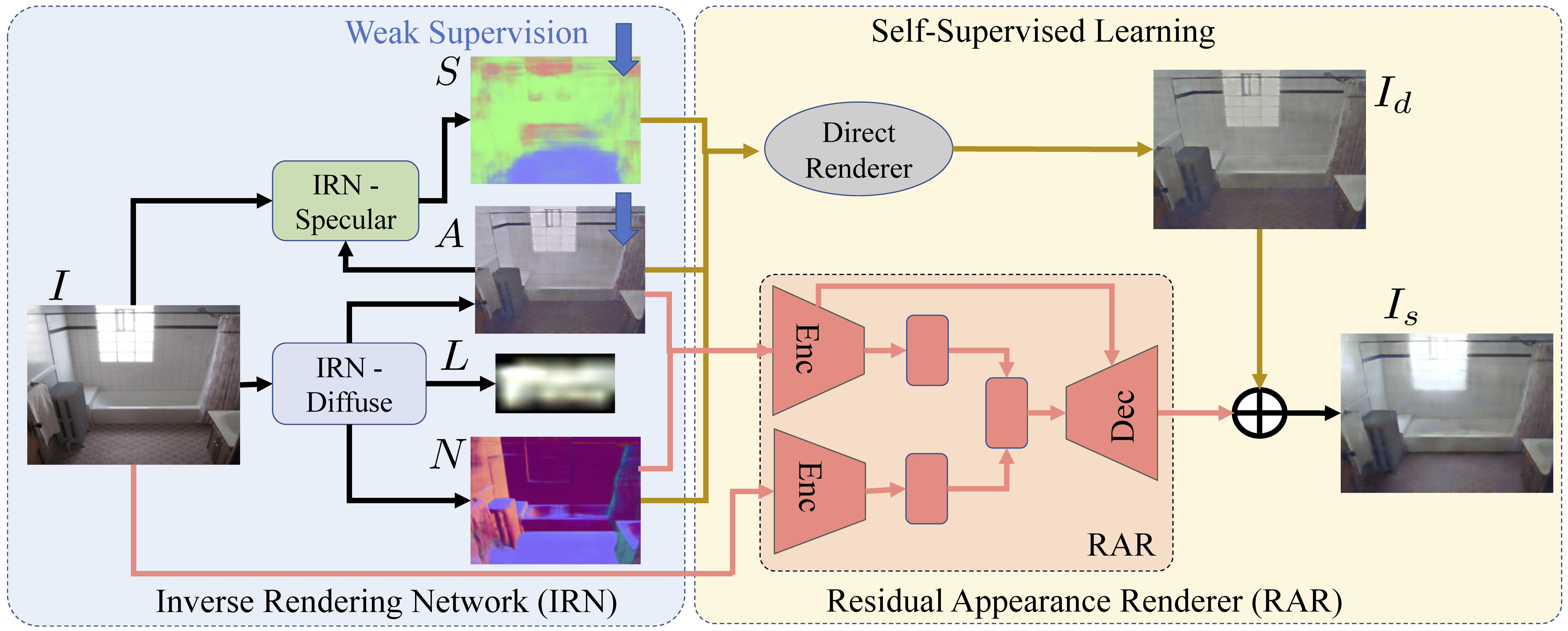

Neural Inverse Rendering of an Indoor Scene from a Single Image Self-supervision on real data is achieved with a Residual Appearnace Renderer network. It can cast shadows, add inter-reflections and near-field lighting, given the normal and albedo of the scene. |

|

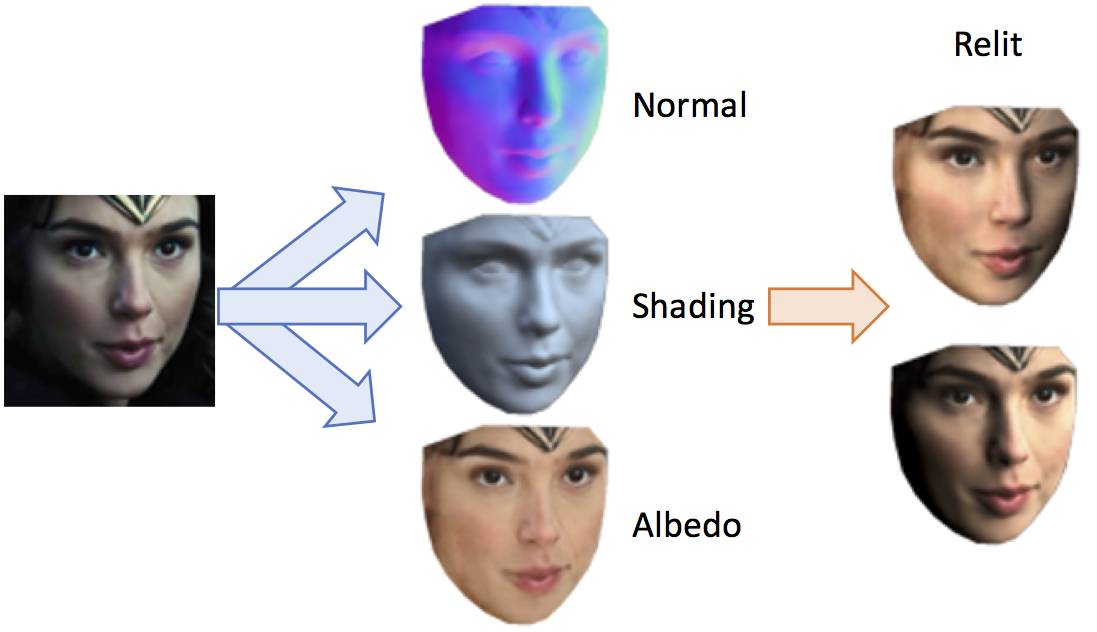

SfSNet : Learning Shape, Reflectance and Illuminance of Faces in the Wild Decomposes an unconstrained human face into surface normal, albedo and spherical harmonics lighting. Learns from synthetic 3DMM followed by self-supervised finetuning on unlabelled real images. Roni Sengupta, Daniel Lichy, Angjoo Kanazawa, Carlos D. Castillo, David Jacobs.IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 2020. [Paper] Introduces SfSMesh that utilizes the surface normal predicted by SfSNet to reconstruct a 3D face mesh. |

|

A New Rank Constraint on Multi-view Fundamental Matrices, and its Application to Camera Location Recovery We prove that a matrix formed by stacking fundamental matrices between pairs of images has rank 6. We then introduce a non-linear optimization algorithm based on ADMM, that can better estimate the camera parameters using this rank constraint. This improves Structure-from-Motion algorithms which require initial camera estimation (bundle adjustment). |

|

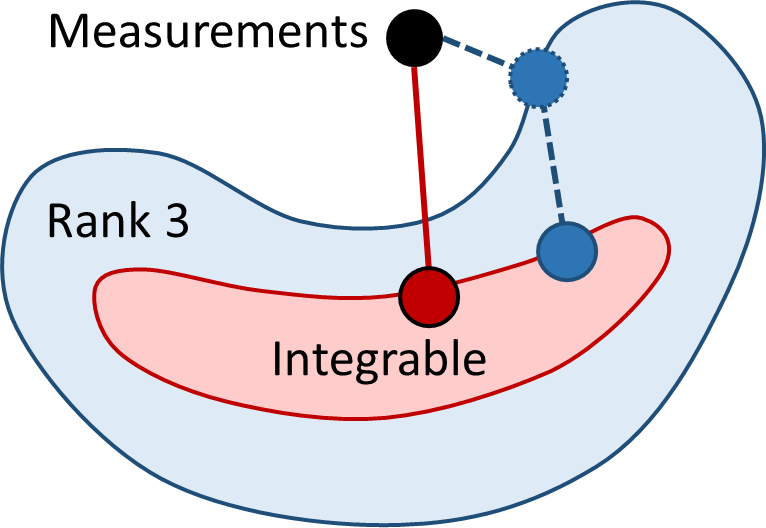

Solving Uncalibrated Photometric Stereo Using Fewer Images by Jointly Optimizing Low-rank Matrix Completion and Integrability We solve uncalibrated Photometric Stereo using as few as 4-6 images as a rank-constrained non-linear optimization with ADMM. |

|

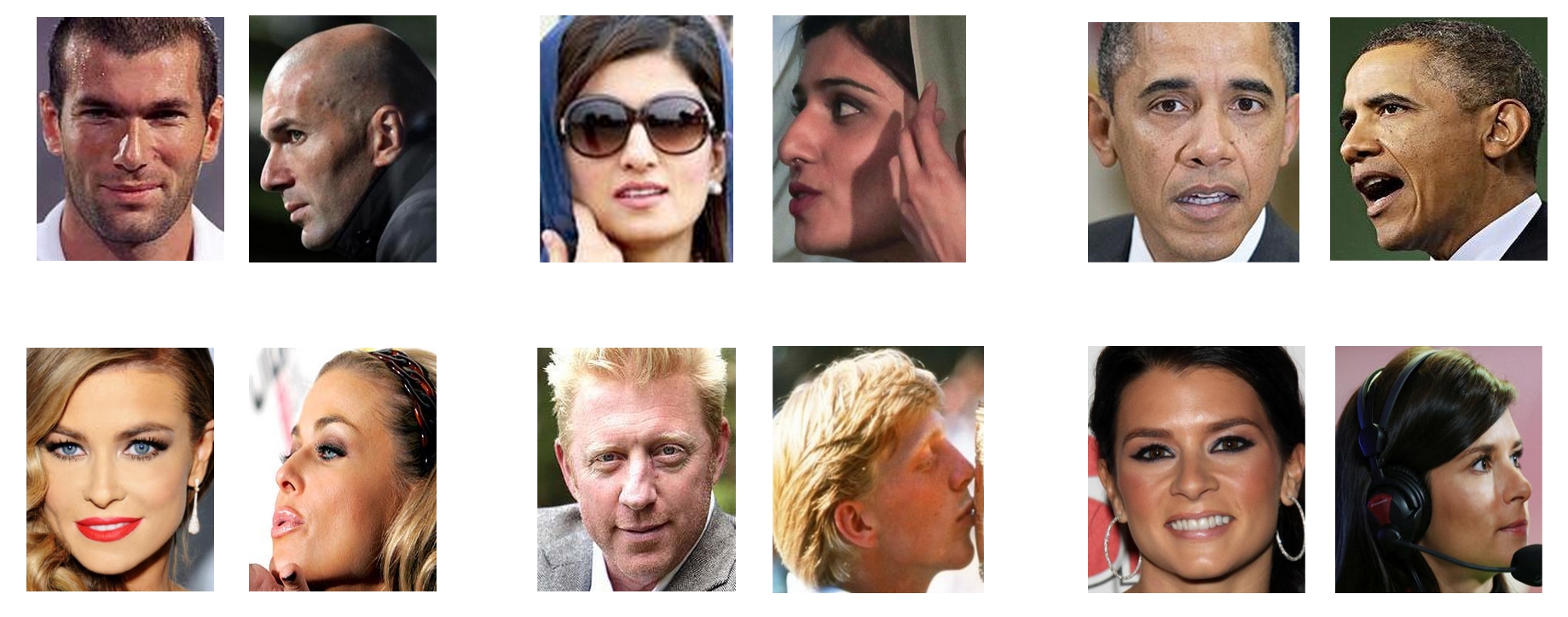

Frontal to Profile Face Verification in the Wild We introduce a dataset of frontal vs profile face verfication in the wild -- CFP. We show that SOTA face verification algorithms degrade about 10% on frontal-profile verification compared to frontal-frontal. Our dataset has been widely used to improve face verification across poses, but also for face warping and pose synthesis with GAN. |

A Frequency Domain Approach to Silhouette Based Gait Recognition |

Thesis

Constraints and Priors for Inverse Rendering from Limited Observations

Roni Sengupta

Doctoral Thesis, University of Maryland, January 2019

[pdf]