HeadTyping

Head Typing As a Literacy Learning Tool

Jason Repko

ABSTRACT

The goal of head typing is to provide an alternative method for entering text for individuals unable to do so by conventional devices such as the keyboard or mouse. The approach presented here utilizes the CAMSHIFT algorithm [2] to track the head movements of the user and provides feedback via a graphical user interface. Recent research has focused on speed and accuracy of the input [3]. This system provides a flexible and inexpensive method for practicing writing that might not be otherwise available to individuals with severe and multiple mental and physical impairments. This system is intended to be used as a tool for educators to promote literacy learning in the severely disabled.

INTRODUCTION

Teaching literacy skills can pose a challenge to educators when the student is unable to use conventional tools of composition due to lack of fine motor skills accompanied by or as a consequence of a cognitive disability. As a result, many of these students are not provided with satisfactory literacy education because of their lack the ability to write and type without substantial assistance. Furthermore, these individuals may be severely disabled or undereducated, and as a result are unable to use augmentative communication devices. Despite advancements in assistive technology, very little has been done to address the needs of this segment of the population.

Tasks such as composing a word by selecting letters from a display could be facilitated by head or gaze tracking. With the help of an instructor, students that are unable to use a keyboard or conventional writing instruments can be taught the alphabet as well as word and sentence composition. The tool designed for these individuals needs to be flexible enough account for their lack of fine motor control and uncontrolled movements that may be a result of the disability. There exist a number of systems both commercial and non-commercial that track a user´s head, torso, or eye movement and use this information to guide selection on a display [3]. Determining selection based on dwell time is often an issue in these systems. Most of these systems are designed for individuals that may be mobility impaired but may have normal cognitive abilities. As a result, when addressing these design issues, cognitive disabilities are often not taken into consideration. The goal of this system is to provide an the flexible head-typer, which accommodates the needs of students with a cognitive disability as well as mobility impairments. Consequently, the user interface for the tool is driven by large motor skills such as head and neck movements. The selection mechanism is tolerant of spastic movements, and requires minimal muscular coordination. Selection is also facilitated by clear visual feedback, and manual keyboard operation that can be performed by the teacher.

Because of the small number of users, this tool was designed to be inexpensive as compared to specialized head trackers. The flexible head-typer is designed to work with low resolution commercially available web cameras used on low-end desktop computers.

HEAD TYPING

The two basic components of the system are a head tracker and a display of letters and punctuation.

Head Tracker

The head tracker was implemented using the Continuously Adaptive Mean Shift algorithm (CAMSHIFT) [2]. CAMSHIFT is a color vision algorithm that tracks the head based on flesh colored pixels. The reason that this algorithm was chosen was that it is both fast and efficient and tolerant to low resolution web cams. Consequently, this system can be used on low-end PCs and webcams. In addition, this tracker is tolerant of image noise, and hand movements. CAMSHIFT tracker will also be able to find the face if the user moves his or her head out of the view of the camera, occludes the face, or otherwise moves the head out of view, because the end users have cognitive disabilities these scenarios should be handled.

The meanshift algorithm assigns probabilities to flesh colored image data, working over an HSV color scale. CAMSHIFT is a modification of the meanshift algorithm that dynamically adapts to the changing color distribution. For a detailed discussion of the CAMSHIFT algorithm see [1].

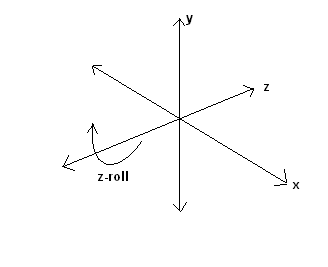

Figure 1: Movement in these directions can be tracked using the CAMSHIFT tracker.

The CAMSHIFT implementation used in this system was CAMSHIFT Tracker Design by Francois [2] that was based on Intel´s OpenCV library. The degrees of freedom tracked by CAMSHIFT are shown in Figure 1. In this system, the only tracked movement is along the x-axis.

User Interface

The user interface was designed to be tolerant of uncoordinated movement. The system is intended to be used by a student under the supervision of the teacher. The teacher can direct the student to select letters, spell words, or create sentences. For instance, if the student makes an incorrect selection then the teacher can manually override the student´s selection using manual keyboard input.

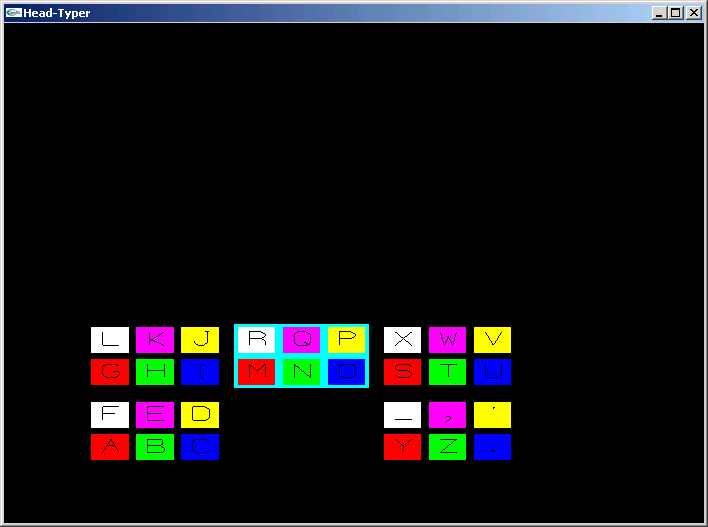

Figure 2: (1) User centers his/her head within the window. (2) Tracking begins on a keypress.

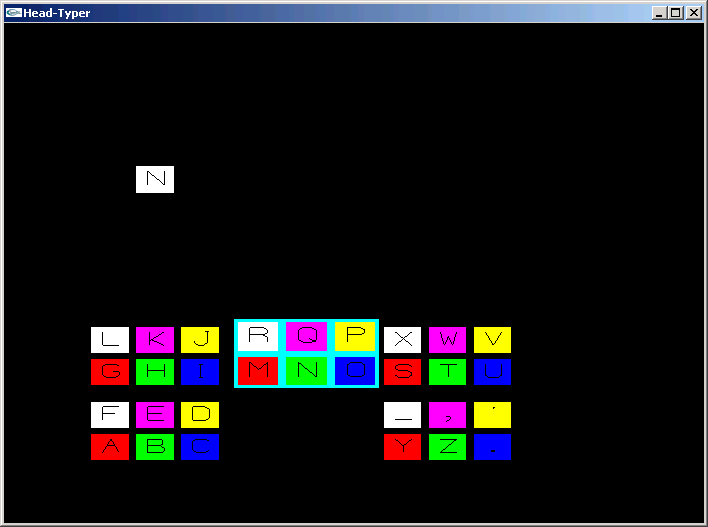

To initialize the system the students head must be centered in a white box (Figure 2). The tracking is started, and the student is presented with a board of letters and punctuation (Figure 3). The letters and punctuation are organized into six groups with five elements each. A selection of a group of letters is indicated by a selection box that appears around the boarder of the group container. The user changes which group of letters is highlighted by tilting his or her head to the left or right. Alternatively, any motion where the vertical axis of the tracked head is deviated from the vertical position, such as tilting ones torso, will change the highlighted group. The selection indicator moves through the groups in a clockwise fashion for a right tilt and counter-clockwise fashion for a left tilt. Selection is made hierarchically by first selecting one of six groups then choosing one of the five letters within that group.

(2)

(2)

(3)

(3)

(4)

(4)

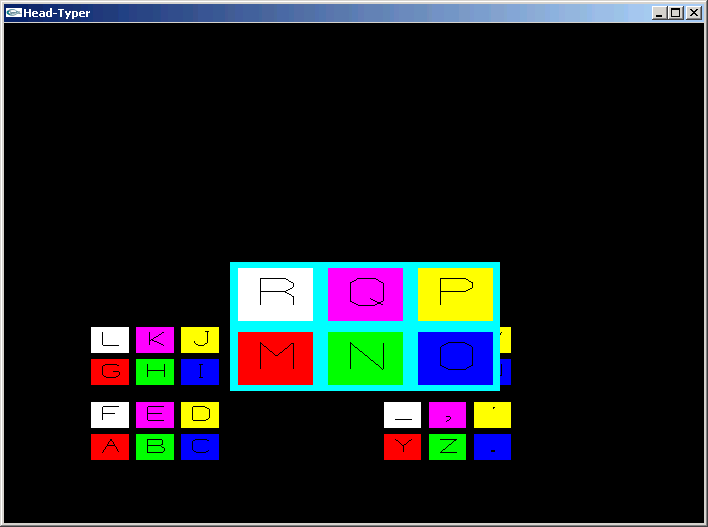

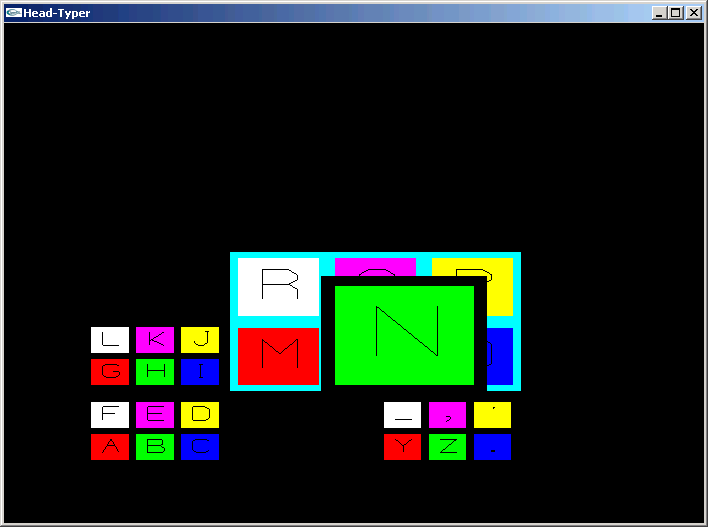

Figure 3: A group of letters is (1) highlighted. (2) The current group begins to increase in size. Once a group reaches a certain size it becomes selected, and (3) the process repeats iteself for a given letter. (4) Selected letters appear in the space above the board.

Selection of a group is determined by dwell time. The user dwells on a specific group by not changing the currently highlighted group. After a fixed period of time the currently highlighted group becomes selected. Once the group is selected, the user is able to cycle through the letters within that group. The selection of a letter in the group is completed in the same manner. The remaining time before the currently highlighted group becomes a selection is indicated by the size of that group.

Majaranta [3] has shown that motion can be used to guide the user´s attention on the current selection, and is useful as a progress meter of dwell time. A similar technique is used in the design of this system. When a group is highlighted, the group box grows in size until it fills up the entire selection window to indicate the remaining time until it is the current highlighted group becomes the current selection. The user has the ability to deselect this highlighted group until the group box occupies the entire selection area. Once deselected the box immediately shrinks to normal size and the next group box begins to enlarge. This selection time can be preset to a desired interval.

GUIDE

Source code (code.zip) - Requires Microsoft Platform SDK for Windows XP SP2, and the OpenCV libraries.Executables (exes.zip) - Compiled for Windows XP.

Keyboard commands

F3 - Starts the tracker

d - Deletes the previously selected letter

1 - Decreases dwell time (selection becomes faster)

2 - Increases dwell time (selection becomes slower)

p - Pause

b - Toggles between bi-directional and single-directional input. The default is bi-directional. If the single-directional mode is chosen then the change in selection will only take place if the user moves to his/her left.

REFERENCES

[1]Bradski, G. R. Computer Vision Face Tracking For Use in a Perceptual User Interface. Intel Technology Journal. 1998 Q2 p. 15.

[2] Francois, A. CAMSHIFT Tracker Design Experiments with Intel OpenCV and SAI, IRIS Technical Report IRIS-04-423, University of Southern California, Los Angeles, July 2004.

[3] Majaranta, P., MacKenzie, I. S., & Raiha, K.-J. Using motion to guide the focus of gaze during eye typing 12th European Conference on Eye Movements (ECEM12), Dundee, Scotland, August 2003.