Median filter based hole filling strategy for RGBD images

This project was originally done as Btech project under the supervision of Prof. Shanmuganathan Raman. I would also like to acknowledge the help and efforts of Adit Ravi (IITM) in writing a paper describing this method.

1.1 Abstract

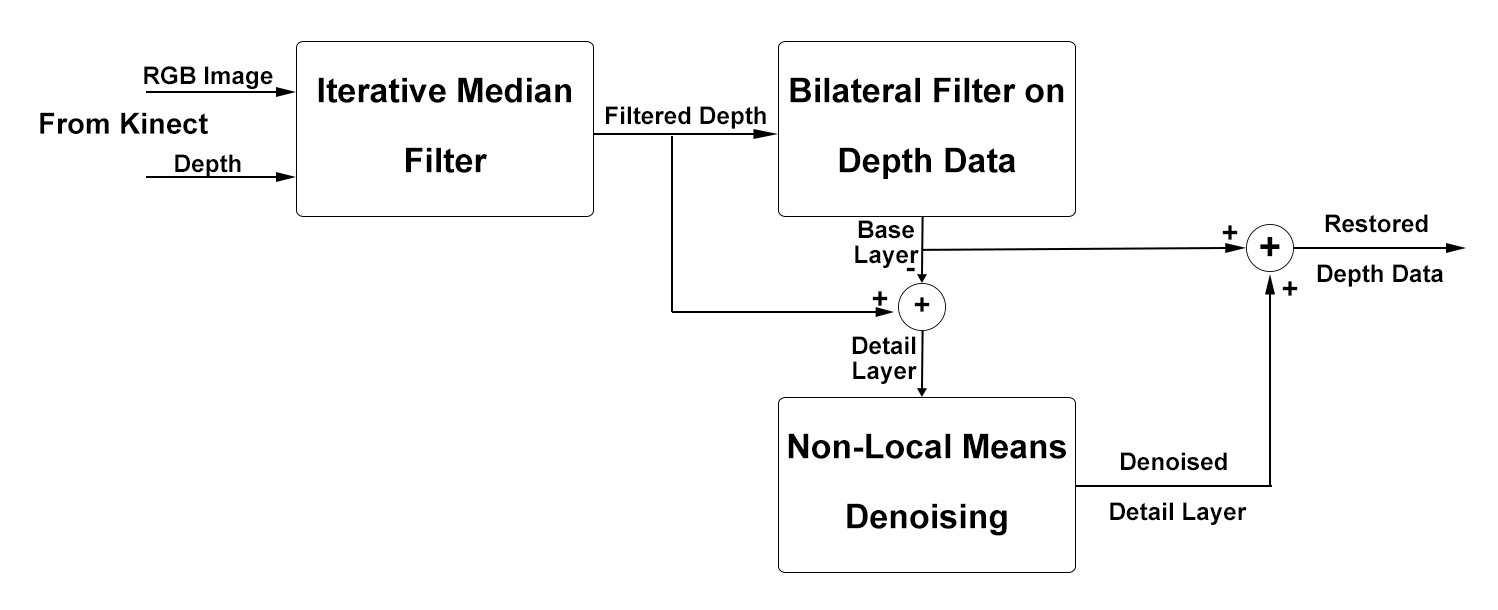

In this project, we have presented a novel iterative median filter -based strategy to improve the quality of the depth maps provided by sensors like Microsoft Kinect. The quality of the depth map is improved in two aspects, by addressing the random noise and by filling holes present in the maps. The holes are filled by iteratively applying a median filter-based strategy which takes into account the RGB components as well. The color similarity is measured by finding the absolute difference of the neighbourhood pixels and the median value. The hole filled depth map is further improved by applying a bilateral filter and processing the detail layer separately. A non-local denoising technique is applied to the detail layer, which is finally combined with the base layer to obtain a sharp accurate depth map. We show that the proposed approach is able to generate high quality depth maps which can be quite useful in improving the performance of various applications of Microsoft Kinect such as pose recognition, skeletal and facial tracking, etc.

1.2 General overview

The figure explains the steps involved in the method. The median filter-based hole filling step is the innovative step that enables us to get accurate depth maps. The post-processing steps of dividing the filtered depth map into base and detail layer help us to suppress noise.

|

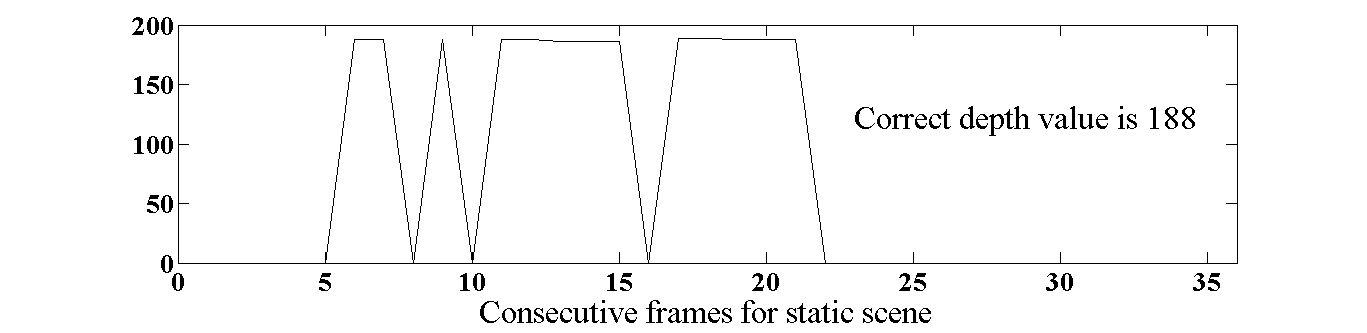

1.3 Iterative hole filling step

This step uses a modified version of the median filter to incorporate the cues given by RGB image. It uses the RGB image as a guide to restrict the region around the center pixel and then finds the median value. In essence, the idea is very close to joint-bilateral filter but instead of using bilateral filter this uses the median filter which is very effective against shot noise and temporal inconsistencies.

|

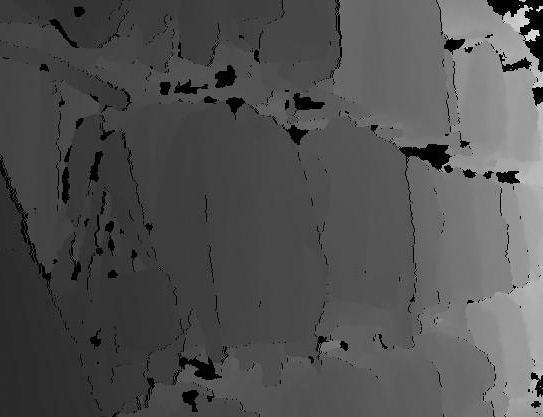

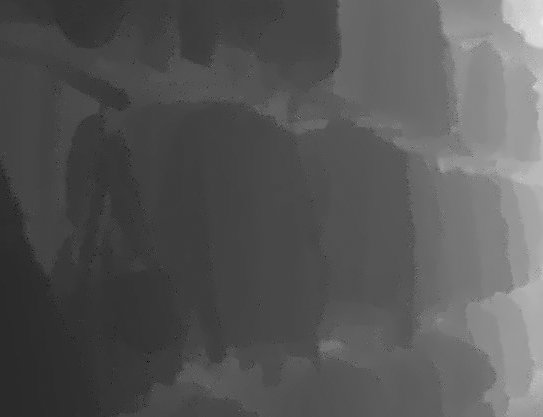

1.4 Results

| |

| |

This project was submitted as BTech project at IITGN and a paper was also written for which NYU RGBD dataset was used. Adit Ravi, my collaborator, helped us in creating our own dataset by capturing RGBD images. He has also substantially contributed in writing and revising the paper contents and the pseudo-codes. This paper was published in NCC'15.