Major Projects

Domain Transform Solver

CVPR 2019 code and paper

Rolling Shutter and Radial Distortion are Features for High Frame-Rate Multi-Camera Tracking

CVPR 2018

[paper] [supp] [poster]

Rolling Shutter Tracker

Best paper award at ISMAR 2016ISMAR-TVCG paper

[talk PDF] [talk pptx] [paper]

This project is motivated by the latency requirements for Augmented Reality(AR) or Virtual Reality(VR) systems. IN AR/VR, there are three subsystems:

- Tracking

- Rendering

- Display

In this project we target the tracking sub-system. Our goal is to create a high frequency camera-based tracker.

Physics capture : Estimation of coefficient of restitution for multiple surfaces

This project was done as a continuation of this. The Major difference is that this uses only computer vision techniques. Also, this project was done for Dr. Jan-Michael Frahm's Recent Advances in Image analysis course.

Case of the bouncing ballHere we consider a proof-of-concept scenario where a tennis ball is thrown at a table. The ball bounces multiple times on the table as well as on the ground. This interaction of the ball with the world is recorded using Microsoft Kinect 1.0. The trajectory of the ball is estimated and a physics model is used to estimated the coefficient of restitution with respect to table and ground.

Estimation of physical properties of real world objects

This project was done in collaboration with Rohan Chabra under the supervision of Prof. Ming Lin.

Demo

Estimation of physical properties of objects can be useful in the field of Augmented/Virtual reality. These physical properties can be assigned to the objects that were reconstructed using computer vision techniques. These objects can now be made interactive in the reconstructed scene with their physically correct behaviour. Another application of this particular research can be in the robot industry where our intelligent system can be used to predict the collisions that can happen as a result of some motion. Our intelligent system can communicate these predictions to a robot to help it understand the environment better and perform motion accordingly. This research can also be used in the development of advanced augmented reality applications.

Median filter based hole filling strategy for RGBD images

[paper]This project was originally done as Btech project under the supervision of Prof. Shanmuganathan Raman. I would also like to acknowledge the help and efforts of Adit Ravi (IITM) in writing a paper describing this method.

In this project, we have presented a novel iterative median filter -based strategy to improve the quality of the depth maps provided by sensors like Microsoft Kinect. The quality of the depth map is improved in two aspects, by addressing the random noise and by filling holes present in the maps. The holes are filled by iteratively applying a median filter-based strategy which takes into account the RGB components as well. The color similarity is measured by finding the absolute difference of the neighbourhood pixels and the median value. The hole filled depth map is further improved by applying a bilateral filter and processing the detail layer separately. A non-local denoising technique is applied to the detail layer, which is finally combined with the base layer to obtain a sharp accurate depth map. We show that the proposed approach is able to generate high quality depth maps which can be quite useful in improving the performance of various applications of Microsoft Kinect such as pose recognition, skeletal and facial tracking, etc.

| |

| |

This project was submitted as BTech project at IITGN and a paper was also written for which NYU RGBD dataset was used. Adit Ravi, my collaborator, helped us in creating our own dataset by capturing RGBD images. He has also substantially contributed in writing and revising the paper contents and the pseudo-codes. This paper was published in NCC'15.

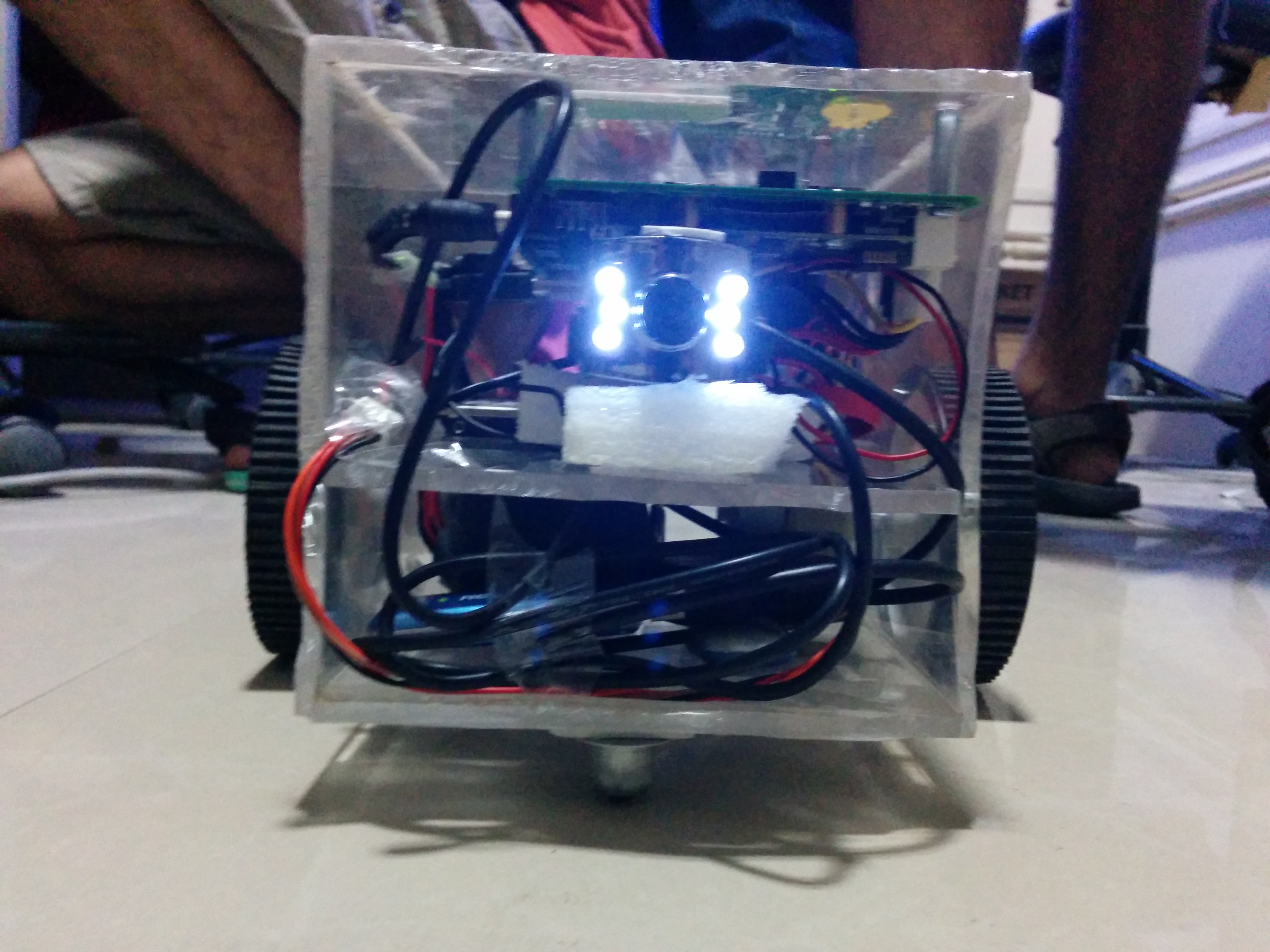

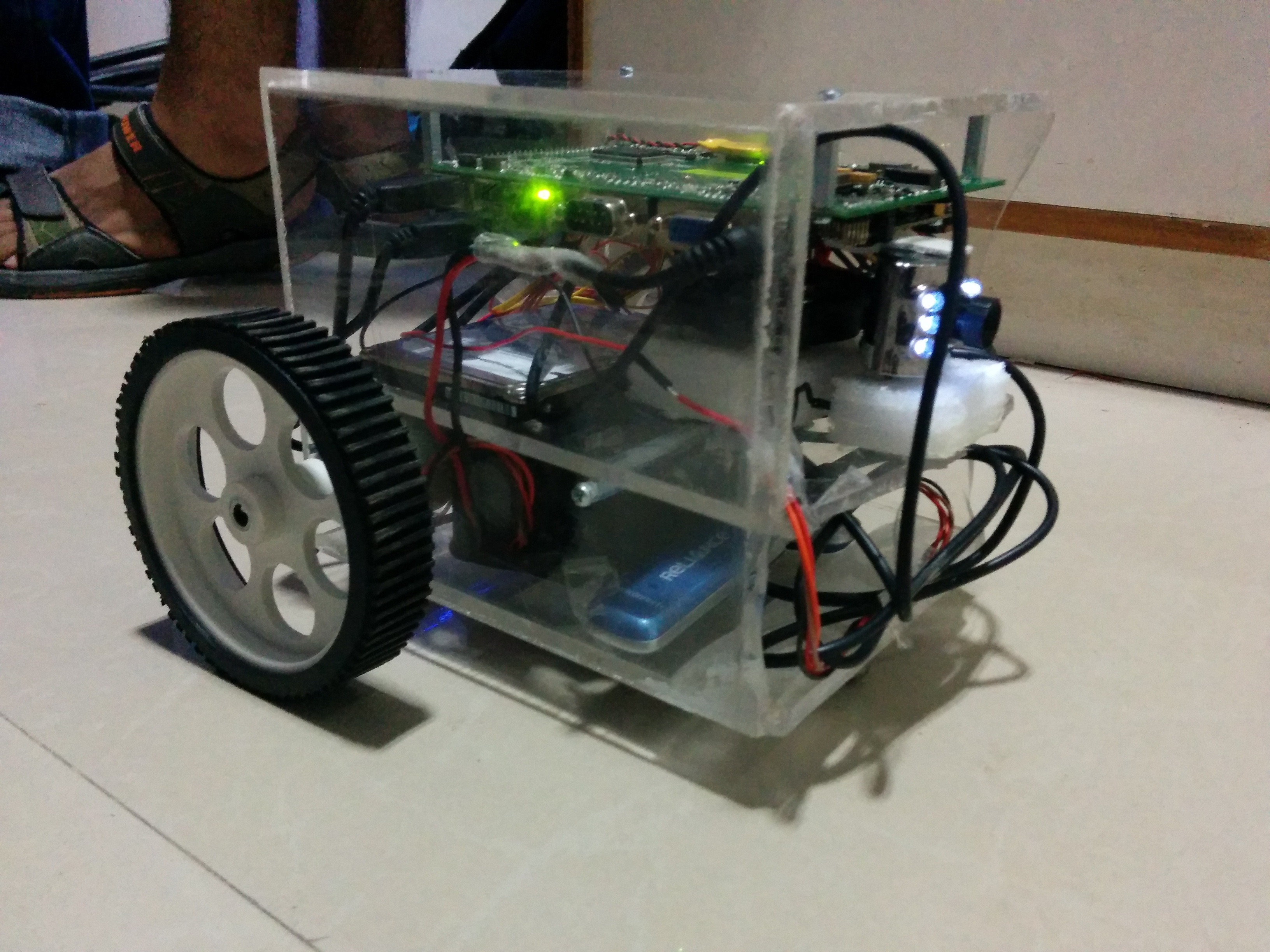

Fire rescue robot based on Intel Atom E6xx

This project was done under the guidance of Prof. Joycee Mekie and Prof. Gaonkar. I was part of a team of six people and was responsible for image processing algorithms.

Other Team members : Smit Soni, Shashank Tyagi, Nitesh Udhani, Manognya Parimi & Yash Goyal.

I would also like to acknowledge Nitheesh KL and some other people from Intel for providing valuable programs to kick-start the project.

The goal of the project was to make a remote controlled fire rescue robot using Intel E6xx atom processor. The robot was mounted with a camera and a wireless modem which were used to control the robot remotely using the real-time video feed. The robot also detected fire in the scene and warned the controller (human). The project used TCP-IP protocol and OpenCV to enable all this and used custom functions for controlling the motors using the general-purpose IO pins available on the Intel Atom board.

| |

| |

My primary responsibility was to deploy the fire-detection algorithm and get the video feed working. Another challenge was to do all this in real time so that there is no lag between the control signals and the video feed.

As this was the first project at IITGN using Intel Atom E6xx( and one of the few in the world), it also served as a platform to build more complex robots/drones for upcoming batches at IITGN.