Computer Science Graduate Student

UNC-Chapel Hill

Email: res[AT]cs.unc.edu

Curriculum Vitae

|

Ryan Schubert Computer Science Graduate Student UNC-Chapel Hill Email: res[AT]cs.unc.edu Curriculum Vitae |

|

| Ryan Schubert is a graduate student in Computer Science at UNC-Chapel Hill. He is currently pursuing his Ph.D. under the supervision of Greg Welch (University of Central Florida) and Henry Fuchs (UNC-CH). He received his Computer Science M.S. degree from UNC-Chapel Hill in 2008 and his B.S. in Computer Science from the University of Virginia in 2006. |

|

Manipulating perceptual cues (such as shading, contours) and managing misperceptions due to distortions on spatially augmented physical-virtual objects to give them the appearance of a geometry different than the underlying physical surface. Specifically, this could be used to achieve synthetic animatronics. Synthetic animatronics is the ability to represent a sequence of physical poses representing an animation, deformation, or other geometric movement (e.g. a head nod gesture) on a single, static physical display surface by emphasizing shape cues for the content we want users to perceive while attempting to mask incorrect cues that result because of the geometric discrepancy between the virtual content and the display surface when viewed from different locations. Various parts of the entire process can be optimized, based on what we have control of and what knowledge we have about the specific use-case. For example, we can optimize both the shape of the display surface as well as the imagery being displayed on it. We can leverage known or expected viewing positions or regions or expected animations that we may want to represent. |

| Schubert, R., Welch, G., Daher, S., & Raij, A. (2016). HuSIS: A Dedicated Space for Studying Human Interactions. IEEE Computer Graphics and Applications, 36(6), 26-36. |

| Lee, M., Kim, K., Daher, S., Raij, A., Schubert, R., Bailenson, J., & Welch, G. (2016, March). The wobbly table: Increased social presence via subtle incidental movement of a real-virtual table. In Virtual Reality (VR), 2016 IEEE (pp. 11-17). IEEE. |

| Schubert, R.; Welch, G.; Lincoln, P.; Nagendran, A.; Pillat, R.; Fuchs, H.; , "Advances in Shader Lamps Avatars for telepresence," 3DTV-Conference: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON), 2012 , pp.1-4, 15-17 Oct. 2012 |

| Spero, R.C., Sircar, R.K., Schubert, R., Taylor, R.M., II, Wolberg, A.S., Superfine, R., "Nanoparticle Diffusion Measures Bulk Clot Permeability", Biophysical Journal, Volume 101, Issue 4, 17 August 2011, Pages 943-950, ISSN 0006-3495, 10.1016/j.bpj.2011.06.052. |

| Daher, S., Kim, K., Lee, M., Bruder, G., Schubert, R., Bailenson, J., & Welch, G. F. (2017, March). Can social presence be contagious? Effects of social presence priming on interaction with Virtual Humans. In 3D User Interfaces (3DUI), 2017 IEEE Symposium on (pp. 201-202). IEEE. |

| Kim, K., Schubert, R., & Welch, G. (2016, September). Exploring the Impact of Environmental Effects on Social Presence with a Virtual Human. In International Conference on Intelligent Virtual Agents (pp. 470-474). Springer International Publishing. |

| Daher, S., Kim, K., Lee, M., Raij, A., Schubert, R., Bailenson, J., & Welch, G. (2016, March). Exploring social presence transfer in real-virtual human interaction. In Virtual Reality (VR), 2016 IEEE (pp. 165-166). IEEE. |

| Zheng, F., Schubert, R., Welch, G., "A General Approach for Closed-Loop Registration in AR," to appear in Proceedings of the IEEE Virtual Reality 2013 (VR'13), Mar. 2013 |

| Zheng, F., Schubert, R., Welch, G., "A General Approach for Closed-Loop Registration in AR," poster to appear in Proceedings of 11th IEEE International Symposium on Mixed and Augmented Reality (ISMAR'12), Nov. 2012 |

|

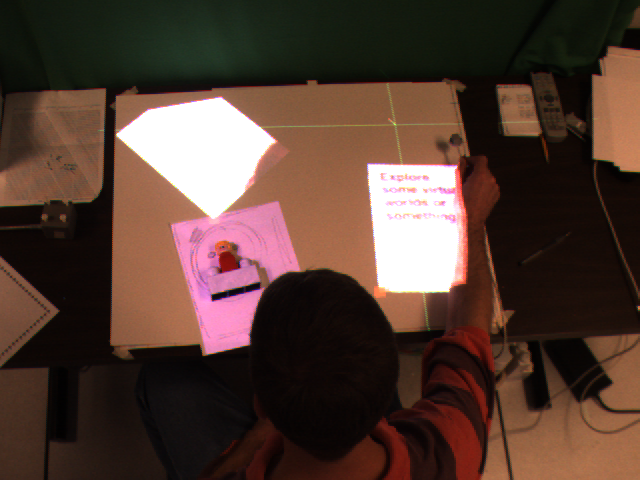

One/two hand navigation and virtual object manipulation on a large curved desk display surface. Not shown here, but in addition to manipulating a "mouse pointer" on the large non-planar display by pointing, you could also track two hands and then navigate through an immersive 3D scene being displayed on the surface. Common gestures such as moving the hands closer together or farther apart to zoom out/in were used in conjunction with a button that could be operated by the user's foot. See a short video showing the smooth pointing-based manipulation of windows on the curved, non-rectangular display here. | |

|

Remote medical telestration with A-desk and finger/laser pointer metaphor. A user sitting in front of the large, curved "analyst's desk" (a-desk) could be shown live imagery from a camera in a remote location (such as an operating room). A projector mounted near the camera in the remote location can project a virtual "laser dot" onto the physical scene where the user is currently pointing. This dot can be seen by both the user at the desk and any people in the remote location. In addition, the user could telestrate on the imagery using a button that could be operated by the user's foot, allowing them to circle, annotate, etc on the remote physical objects. | ||

|

Video-based 3D tracking for particles in microscopy Modern microscopes can be equipped with high precision control of the XYZ position of the stage on which the specimen sits. By doing realtime analysis of imagery from a camera attached to a microscope, the Z-depth of a known object (such as a microbead embedded in a biological sample) can be determined as it relates to the focus of the object. The stage can then be driven in an attempt to keep the object at a fixed plane in Z as it moves freely within the sample in X, Y, and Z. | ||

|

Augmented desk (project for Exploring Virtual Worlds course at UNC) The augmented desk was a physical desk surface onto which both physical and virtual documents could exist. A copy could be made of a physical page via an overhead camera and a tracked device to specify the page corners. A virtual version of the page would then be projected onto the desk surface from a projector pointing down at the surface. Virtual pages could be "picked up", moved around and rotated, and then placed back down on the desk in a new location using the tracked device. See a more detailed description here. | |

|

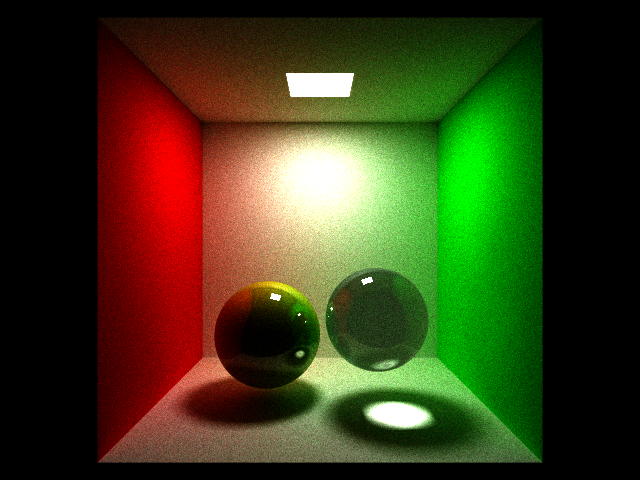

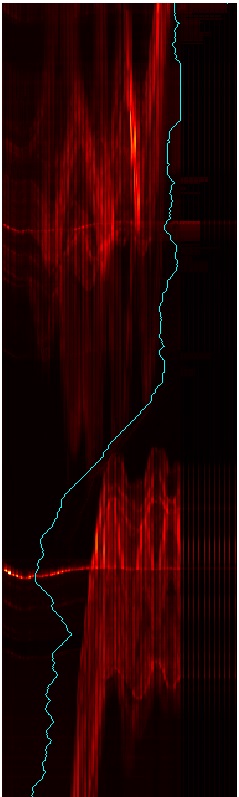

Ray tracer with path tracing (written for Advanced Image Synthesis course at UNC). A simple ray tracer that implemented intersection with planes, spheres, and triangles. It included traditional stochastic path tracing, without direct lighting, to achieve (noisy!) soft shadows from area lights. | |

|

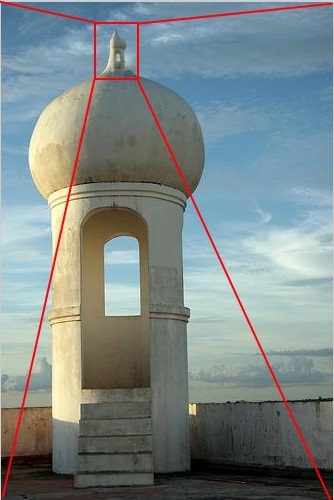

Photofractals (project for a computational photography course at UNC) | |

|

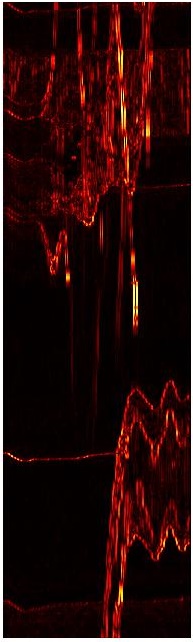

3D seam carving (project for a computer vision course at UNC) | |

| Complex adaptive system for simulating cellular interactions (undergraduate project at UVA) |