Research Assistant Professor in the 3D Computer Vision Group

Department of Computer Science

University of North Carolina at Chapel Hill

Tel: (919) 962 1703

Fax: (919) 962 1699

E-mail: jmf@cs.unc.edu

|

Jan-Michael Frahm Research Assistant Professor in the 3D Computer Vision Group Department of Computer Science University of North Carolina at Chapel Hill Tel: (919) 962 1703 Fax: (919) 962 1699 E-mail: jmf@cs.unc.edu |

| Home |

Research |

Publications |

Curriculum |

Tutorials & Workshops |

| Real-time Urban 3D Reconstruction |

| This research aims at developing a system for automatic, geo-registered, real-time 3D reconstruction from video of urban scenes. From 2005-2007 we developed a system that collects video streams, as well as GPS and inertia measurements in order to place the reconstructed models in geo-registered coordinates. It is designed using current state of the art real-time modules for all processing steps. It employs commodity graphics hardware and standard CPU's to achieve real-time performance. Our system extends existing algorithms to meet the robustness and variability necessary to operate out of the lab. To account for the large dynamic range of outdoor videos the processing pipeline estimates global camera gain changes in the feature tracking stage and efficiently compensates for these in stereo estimation without impacting the real-time performance. The required accuracy for many applications is achieved with a two-step stereo reconstruction process exploiting the redundancy across frames. More details can be found here. Our real-time stereo estimation code in CUDA can be found here |

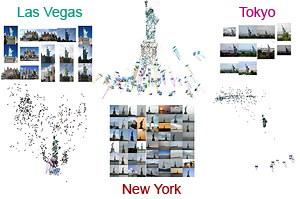

| 3D reconstruction and recognition of landmarks from photo collection |

|

This research aims at the 3D modeling of landmark sites such as the Statue of Liberty based on large-scale contaminated image collections gathered from the Internet. Our system combines 2D appearance and 3D geometric constraints to efficiently extract scene summaries, build 3D models, and recognize instances of the landmark in new test images. We start by clustering images using low-dimensional global “gist” descriptors. Next, we perform geometric verification to retain only the clusters whose images share a common 3D structure. Each valid cluster is then represented by a single iconic view, and geometric relationships between iconic views are captured by an iconic scene graph. In addition to serving as a compact scene summary, this graph is used to guide structure from motion to efficiently produce 3D models of the different aspects of the landmark. The set of iconic images is also used for recognition, i.e., determining whether new test images contain the landmark. Results on three data sets consisting of tens of thousands of images demonstrate the potential of the proposed approach |

| Camera motion estimation from uncalibrated videos |

| Tracking and calibration for multi-camera systems |

| GPU accelerated 2D tracking and matching |

| Marker-less Augmented Reality |

| The subject of augmented reality is to insert virtual objects into real scenes. We developed a system for high quality marker-less augmented reality with realistic direct illumination of the virtual objects. The lights of the scene are localized and are used for direct illumination of the virtual object placed in the scene. Our method keeps the augmented scene unaffected to overcome the limitations of many systems, which require markers or additional equipment in the scene to reconstruct illumination. For more details look here. |

| Differential Camera Tracking |

| Camera based tracking methods typically rely on a constant scene appearance over all view points. This fundamental assumption is violated for complex environments containing reflections and semi-transparent surfaces. We developed a passive optical camera tracking can be done in these very complex environments which include curved mirrors and semi-transparency. More details can be found here. |

| Synthetic Illumination of Real Objects |

| We develop a system to capture the illumination from a virtual environment map. However, the system has the drawback of a complex calibration procedure that is limited to planar screens.We propose a simple calibration procedure using a reflective calibration object that is able to deal with arbitrary screen geometries. Our calibration procedure is not limited to our application and can be used to calibrate most camera projector systems. |

| Camera based Natural User Interaction |

| Computer vision provides a powerful tool to track users in interactive environments with cameras. This has the advantages of enabling a natural interaction for the users without the need to mount any senors on the user. We developed a system to use cameras for the view control in a virtual reality system. More details can be found here. |