Hearing text read aloud is slower than reading it visually. The user listens passively, unable to skim for specific information, scan for an overview, or skip ahead as may be desired. Conventional text readers allow no scanning or skimming. They skip always by one line or one page, without allowing the user to specify a different amount. Furthermore, if the user chooses to listen passively and not skip ahead, then the only way the user can finish sooner is to speed up the speech, which makes it less comprehensible after a point. Nearly any method of speeding up reading can make it less comprehensible, but certain methods may be better than others, and different users may have different preferences.

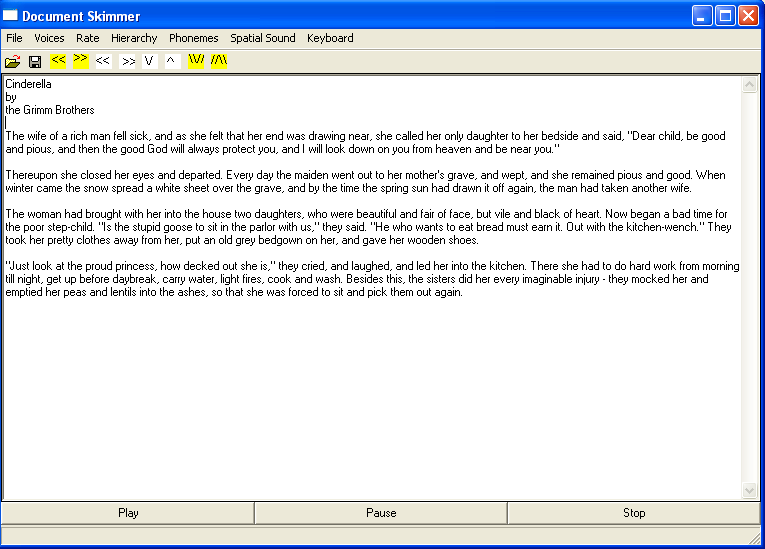

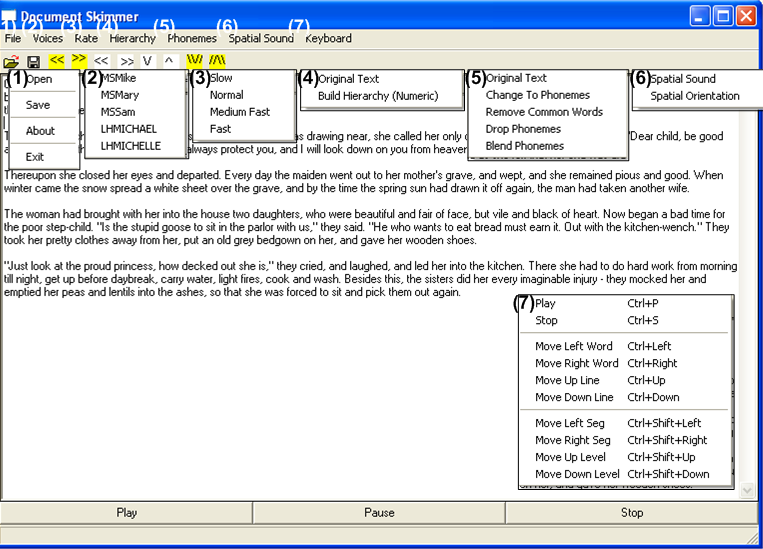

Our Document Skimmer speeds up reading and allows the user to decide how to make the tradeoff with comprehension. The user can listen actively, skipping by specified distances or scanning by specified detail amounts. He/she can skip words, lines, or segments of specified size, both forward and backwards. He/she can scan by listening to text with less detail, simultaneous speech streams, words with dropped phonemes, or words with blended phonemes.

|

Back to top |

Our program allows users to choose among multiple voices to read the text. MSMary seemed to be a favorite of people who used this software. The program also allows users to vary the rate at which text is read.

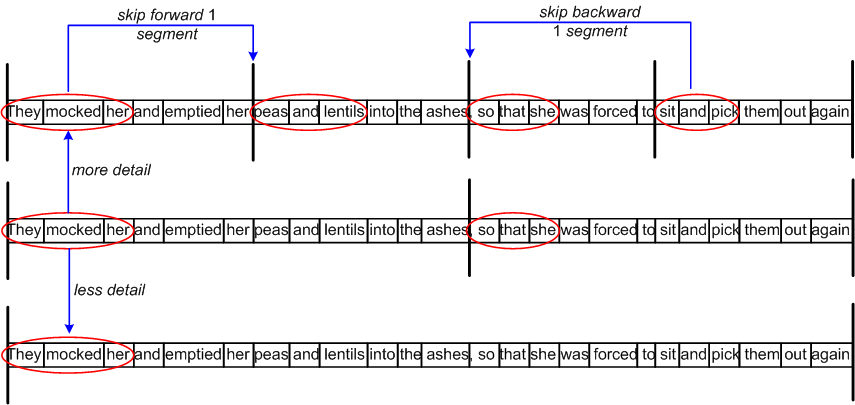

The hierarchy allows the user to hear and traverse the document at various levels of detail (LODs). The document is split into segments of size N, and for each segment only the first subsegment of size M is read (M <= N). Before hearing the text, the user specifies the values "segment size" N and "subsegment size" M. The hierarchy is then built. While hearing the text, the user can hit keys to increase or decrease the segment size in increments of N, or to jump forward or backwards in increments of M. These actions are traversing parent-child to more or less detail, and skipping sibling-sibling forwards or backwards in the text.

For example, the user may use segments of size 6 and subsegments of size 3. He/she may then traverse up the hierarchy to LODs characterized by segments of size 12, 18, 24,..., 6*floor(len(doc)/6). Within each LOD, he/she hears the first 3 words of every segment. He/she and may jump forward or backward by segments of size corresponding to the LOD.

The hierarchy simulates how people naturally skim visually, by alternately reading and skipping portions of text at will. The Speech Skimmer presents a similar hierarchy, except it processes speech instead of text, and its segments sizes are based on semantics instead of fixed. A speech stream is split into segments at estimated locations of topic change. Subsegments are a fixed number of seconds long.

People who research speech, literacy, and reading are trying to understand the processes involved when people read silently. When some people read, they subvocalize, which means they repeat each word they read silently in their head. Some researchers believe this is a common cause (or symptom) of reading disabilities, partly because it decreases reading speed. In addition, some researchers believe subvocalization decreases comprehension because the reader's attention is on individual words instead of the idea the words are creating. Because of this, we thought that hearing each word read aloud may not be a necessary prerequisite to understanding text. Our software experiments with this idea.

First, our software has a "Remove Common Words" menu option. When chosen, common articles and prepositions are removed from the text. A total of 56 words compose our list of common words. That list can be viewed here.

Our software also has a "Drop Phonemes" menu option. When chosen, phonemes with no lexical stress are removed from the text. For example, in the word "computing", essentially the first syllable would be dropped.

Finally, our software has a "Blend Phonemes" menu option. When chosen, the spaces between words which share phonemes are removed. Specifically, if either of the last two phonemes of a word are found in either of the first two phonemes of the next word, the space between the two words is removed. For example, in the phrase "What up", since the "uh" sound is heard in both words, the space between these words would be removed.

As part of our investigation into how text could be understood more quickly when it is heard, we wanted to learn how text can be understood more quickly as it is seen. We read about a variety of techniques used when people learn how to speed read. In particular, many speed reading exercises teach people to read text as chunks, instead of word-by-word. One exercise asks readers to make a circle out of their index fingers and thumbs and to try to read all of the words in that circle at once. This led us to experiment with the idea of such a circle as text is heard. Specifically, we use spatial sound to allow multiple words to be heard at once.

In our TextSkimmer the spatial sound was implemented as follows. On the menu bar there is a Spatial Sound menu that consists of two choices, Spatial Sound and Spatial Orientation. If you choose Spatial Sound in this menu a box will pop up asking you for number of sources you would like to have. We decided to support only 1, 2, 3, or 4 sources.

|

Back to top |

|

Back to top |

In order to evaluate our software, we conducted a pilot study, approved by the University of North Carolina's Internal Review Board. You can read the study proposal, the informed consent form, and the parental consent form.

Four people participated in this study. In addition, three others used our software informally and provided feedback. The protocol, including questions asked as part of this study, can be read here.

Participants were asked to perform three tasks:

In Task 1, participants were asked asked questions about some text, and were asked to find answers to these questions by navigating through the text. In general, participants found this difficult to do. Although in some cases the correct answers were located, this generally took more than a minute to do. Some participants skipped forward and backward lines, and often skipped forward and backward words, but the hierarchy was rarely used.

In Task 2, participants heard a story read. Each section of the story was read in a different way. After each portion of the text was heard, three questions were asked.

In Task 3, participants heard a story read. Each section of the story was read in a different way. After each portion of the text was heard, three questions were asked.

|

Back to top |

Although our experiments began to answer some questions, it raised even more. For example:

|

Back to top |

Code for Our Document Skimmer

Code for Our Document Skimmer

Since our program is only a prototype we do not support the one link installation process. If you want to download our program and see how it works here are the things you will need to have:

|

Back to top |

|

Back to top |